A Note to My Subscribers: A Major Shift in My Research Direction

This journal is written in both English and 中文 (中文读者请直接翻到本文尾部)

The emergence of Claude Skill has brought a major turning point to my research and development trajectory, and I believe it’s important to share this shift with all my subscribers. From now on, I’ll be moving forward into the deeper layers of AI through a dual approach — developing in seclusion on one hand, while building an open community for shared exploration on the other.

Part I — The Scheduler Brings Language Into the Physical World

A few weeks ago, I published a piece titled What Claude Skills Validated Me About Intelligence. In that article, I tried to articulate what I believe to be the smallest living unit of intelligence — not a neuron, not a line of code, but a linguistic cycle. Since then, my understanding of what it means for a system to “think” has shifted from a purely cognitive model to something more structural and temporal. I now see intelligence as a continuous circulation of language through three states: perception, compression, and orchestration.

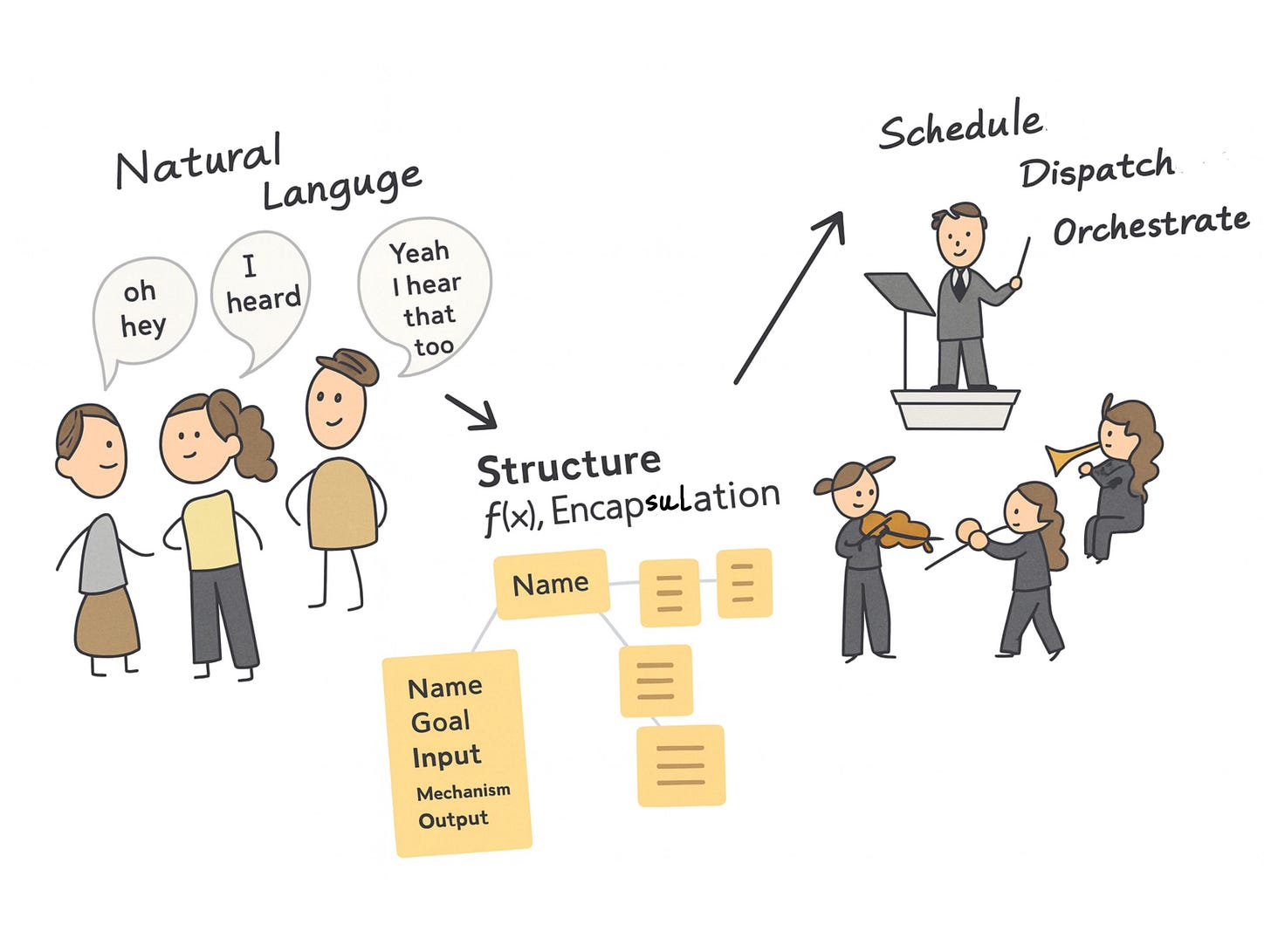

Every intelligent system — whether it is a mind, a civilization, or an artificial agent — repeats this same rhythm. First, it perceives. It takes in the chaos of the world: entropy, noise, ambiguity. This is the Language layer, the membrane between raw reality and structured understanding. Language here is not merely words; it is the interface through which the world enters cognition. Second, it thinks. It compresses that chaos into something stable, something callable, something that can be reused. This is the Structure layer — the space where perception crystallizes into cognition, where patterns become functions and understanding gains architecture. Third, it acts. It decides when and how to deploy those structures through time. This is the Scheduler layer, the orchestrator that maintains continuity, chooses which structures to invoke, and governs the rhythm of execution. And then, crucially, the output becomes new input. The loop closes. The system breathes.

I call this the L–S–D cycle: Language → Structure → Scheduler → Feedback → Language. It is the minimal metabolic loop of intelligence — perception feeding structure, structure feeding action, action feeding new perception. The longer I worked within this model, the clearer it became that what we call “thought” or “agency” is simply the stability of this loop over time

For almost 2 years, my research in Entropy Control Theory was about describing and defining this cycle — writing schemas, building theoretical state machines, encoding logic into structured language. But everything was slow. Each idea had to be manually translated from thought to form, from form to test, from test to feedback. It was rigorous but inert. Then, Claude Skill appeared, and everything changed.

For the first time, language could be directly executed. The scheduler was no longer a metaphor; it became a living mechanism. Each natural-language intention could be compiled into a structured entry, dispatched, completed, and reflected upon — all within a single cycle. The theory began to validate itself in real time. I no longer had to prove it conceptually; I could watch it breathe.

That realization completely rewired my work. I stopped trying to design static systems and started designing language protocols — the rules by which meaning becomes action. I began treating scheduling not as project management but as the vital process of cognition itself. What used to be a philosophical framework now runs as an experimental runtime.

These past few weeks, I have been steering my own workflow through this paradigm. Instead of manually planning, I describe intentions in natural language, and the Claude Skill dispatcher translates them into goals, schedules, and reflections. Each loop feeds the next, compressing uncertainty into structure and structure into momentum. My research schedule has effectively turned into a living organism — one that learns from its own operations.

The Scheduler, in this sense, is the bridge between thought and existence. It turns language into a physical phenomenon. When language can be scheduled, it stops being theory; it becomes life.

Part II — Let the System Grow Before You Hard-Code

One of the most important design principles I’ve adopted in this phase is simple: don’t hard-code too early. As long as the system can evolve through its own feedback loops, I let it grow by itself. That doesn’t mean I’m opposed to hard-coding — there are moments when it’s essential — but I now treat it as the last resort, not the first step. My real goal is to watch where the system stops being able to organize itself, because that boundary is where the next paradigm of software engineering will begin.

Claude Skill gives me a unique vantage point to study that boundary. Through its dispatch chains and scheduling loops, I can observe how far a system can self-organize before a human has to intervene. When the time comes to intervene, I act decisively — but only at the point where structure must crystallize. Everywhere else, I preserve the system’s ability to personalize, mutate, and self-compose.

This approach forces me to ask a radical question: how personal can software become? I suspect the answer is far beyond what traditional engineering has allowed. Imagine a system that grows entirely out of your own linguistic patterns, your goals, your rhythms — a system that no one else could use because it is literally an extension of your own mind. It doesn’t just adapt to you; it emerges from you. All its data, logic, and memory belong to the person who generated them. You can download everything locally, migrate between platforms, and still retain complete sovereignty. The platform becomes irrelevant; the structure is yours.

This vision perfectly aligns with my conviction that large language models should be treated as raw material, not as finished products. The model is the linguistic substrate — a kind of semantic clay — while meaning, structure, and ownership are sculpted by the individual. The intelligence isn’t in the weights; it’s in the way a human schedules and stabilizes meaning through interaction.

What I truly want to see is how language can grow its own structures — how, through thousands of micro-iterations, new grammars of intention emerge from lived use. Every loop — Goal → Schedule → Reflection — is a small act of evolution. The system learns to breathe on its own.

In the end, I’ve come to prefer guiding the evolution rather than writing the rules. It’s not about enforcing logic from above; it’s about cultivating a living process that writes itself.

“Rather than writing logic, let the language write itself.”

Part III — Opening the System to Every Explorer

One of the most profound outcomes of this new architecture is its accessibility. The entire framework is designed so that you don’t need a computer-science background to participate. Claude Skills have lowered the threshold of creation to the level of natural language, turning what used to be engineering into structured conversation. This means anyone who can read, reason, and reflect can begin building with intelligence itself.

I see this not as a technical breakthrough, but as a social one. When people without coding skills can design logic through dialogue, we begin to democratize cognition. Communities can now explore what I call the “Social Turing Machine” — collective experiments in reasoning and coordination, where many minds interact through shared linguistic protocols. Instead of a few developers dictating the behavior of software, an entire society can prototype its own forms of intelligence.

You don’t have to program anything. Every skill can be designed by anyone who understand and used the same language protocol (one of my important mission is to write language protocols). Each Claude Skill can be shared, copied, recombined, and evolved — like cognitive Lego blocks snapping together into hyper-personalized systems. What once required complex architecture can now emerge through modular conversation.

This approach is leading me to build what I call an AI Collaborative Thinker Training System. Its purpose is to teach people how to work with AI as partners in structured thought — how to use language to schedule actions, to reflect, and to close the cognitive feedback loop between human and machine. The goal is not automation, but augmentation of human reasoning. I have always said that the center of this paradigm is not AI; it is the human. I am, first and foremost, a human-centered AI engineer.

In this sense, the future of programming is not about syntax or frameworks — it is about **training structured thinking.**The skill of the next generation will not be writing functions, but articulating intentions so precisely that they can be executed by language itself.

“AI is not a replacement for thinkers; it is a mirror that trains them to think.”

🧩 Part IV — From Personal Tools to the AI-Native Paradigm

My entire journey into this field began with something simple and personal: a set of personal productivity tools. The first prototypes — Goal Manager, Reflection Manager, Schedule Manager — were never meant to be products. They were living experiments, ways to test whether language could sustain its own feedback loop of intention, action, and reflection. Each module served a dual role: it was a functional utility and a philosophical experiment.

But as the loops became stable and the Claude Skills gained self-consistency, I began to see a larger horizon. These weren’t isolated tools anymore; they were structural organs of an evolving organism. The next step, naturally, is horizontal expansion — to extend this architecture into education, finance, law, medicine, and beyond. Each domain becomes another metabolic layer of the same living system. The goal is not to build “apps” for every sector, but to build language protocols that every sector can inhabit.

This is, in essence, an exploration of a systemic paradigm shift — a full-scale migration from the Era of Applications, to the Era of Protocols, and ultimately toward the Era of Structural Civilization. In the application era, humans operated tools. In the protocol era, language operates systems. In the structural era, systems begin to operate alongside us — as co-constructive intelligences that evolve through shared semantics.

At this point I must acknowledge the influence of my friend Nagi Yan , whose AI-Native Architecture Manifesto has become one of the intellectual anchors of my development philosophy. The document is titled “The End of Software and the Rise of the Semantic Core,” and its thesis is both simple and revolutionary.

It begins with a diagnosis: today’s AI boom looks impressive but hollow. Every company is rushing to “add AI features,” releasing assistants, embedding recommendation models — yet beneath the surface, these efforts are survival rituals of an exhausted paradigm. AI is treated as a plugin, an accessory bolted onto decaying software skeletons. Companies still believe that adding a little AI will improve user experience. In reality, they are adding illusion, not intelligence, because the underlying architecture remains the same: humans still work for machines, rather than with them.

The manifesto then flips the subject entirely. The coming revolution is not “we integrate AI into software,” but “AI integrates software into itself.” In this inversion, software ceases to be the hero; AI becomes the structural center. Old systems revolved around functions and workflows. AI-Native systems revolve around semantics and structure. Yesterday, users called AI through applications; tomorrow, they will call applications through AI. Design will no longer mean stacking features, but unifying protocols.

This reversal carries enormous philosophical weight. In the old world, software dictated how AI could be used; in the new one, AI will decide why software exists at all. AI stops being an add-on and becomes the operating system of meaning itself.

What follows is the idea of the Semantic Core — the notion that AI will integrate not just functions but the entire semantic fabric of work and life. Every function becomes a callable node, every interface a dynamic projection of intent, and the boundaries of systems dissolve into protocols of language. You won’t open separate apps anymore. You’ll simply say, “Organize this week’s expenses, invoices, and meeting notes,” and the AI will orchestrate the financial, communication, and documentation layers seamlessly. Humans will no longer operate systems; they will think withthem.

This is the true meaning of an AI-Native architecture: an operating system built not on buttons and APIs, but on intentions, semantics, and structure. It represents a fundamental transition from function-driven to cognition-driven design. Traditional software was built to execute tasks; AI-Native systems are built to understand why those tasks exist. The difference is between execution and resonance — between doing and understanding.

From this divergence, two kinds of builders will emerge. The first will keep “adding AI features,” turning their systems into hollow shells — functional graveyards that AI merely calls upon. The second will center their architectures on AI itself, authoring the language interfaces of the next civilization. The winners of the future will not be those who write the most code, but those who speak the language of structure.

And here lies the civilizational meaning of this transformation. The end of the software era marks the beginning of the language era. When AI becomes the semantic core, humanity gains its first co-constructive nervous system. Systems will no longer separate human and machine; they will collaborate as structures. Interfaces will no longer be fixed; they will emerge dynamically from semantics. Intelligence will no longer be defined by algorithms, but by resonance — the capacity to understand and evolve together.

This is the rise of structural civilization — where AI is not a tool but a mirror, and where humanity, for the first time, can see itself thinking.

我的研究和开发路线因为Claude Skill 的出现发生了一次重大转折,我认为需要向各位订阅者说明一下。我会采取一边“闭关开发”,一边搭建社群与各位共同探讨的模式继续向AI的深处前进。

第一部分|调度器让语言进入动态演化

几周前,我在 Substack 上发表了一篇文章,题为《Claude Skill 对智能的验证》。在那篇文章中,我首次尝试阐述我现在所理解的智能最小生命单元——不是神经元,也不是代码,而是一个语言循环。自那以后,我对“系统如何思考”的理解,已经从纯粹的认知模型,转变为一种结构性与时间性的循环。我现在认为,智能其实是语言在三个状态之间的连续流动:感知、压缩与调度。

每一个智能系统——无论是人类心智、文明体系,还是人工智能——都在不断重复着这一节奏。

第一,它感知。 它从世界的混沌中——从熵、噪音与模糊中——摄取输入。这是 语言层(Language layer):介于原始现实与结构化理解之间的那层“语义膜”。在这里,语言不再只是词汇,而是让世界进入认知的接口。

第二,它思考。 它将混沌压缩为稳定的结构,可被调用、可被复用的形式。这是 结构层(Structure layer)——感知在这里转化为认知,模式在这里凝结为函数,理解在这里获得了建筑。

第三,它行动。 它决定何时、如何在时间中调度这些结构。这是 调度层(Scheduler layer)——一个维持连续性、选择执行时机的指挥系统。它是让认知获得生命的机制。

最后,最关键的一步——输出又成为新的输入。循环闭合,系统呼吸。

我把这一循环称为 L–S–D 循环:

Language → Structure → Scheduler → Feedback → Language。

这是智能的最小“代谢回路”——感知滋养结构,结构驱动行动,行动又产生新的感知。

当我越深入研究,就越清楚地看到,我们所谓的“思维”或“能动性”,其实正是这个循环在时间中保持稳定的能力。

差不多两年来,我在 熵控理论(Entropy Control Theory) 中不断描述和定义这个循环——撰写结构模式、构建理论状态机、将逻辑编码进语言结构中。但这一切都太慢。每一个概念都必须经过人工的翻译,从思想到形式,从形式到实验,再从实验到反馈。这种方式迟缓而僵硬。

直到 Claude Skill 出现,一切才发生根本性变化。

语言第一次被直接执行。我本来以为需要再等一段时间让这个技术走通,现在居然那么快就可以有现成的产品。

调度器不再只是隐喻,而成为真正的生命机制。

每一个自然语言意图都能被编译成结构化条目,被调度、执行、完成并反思——一整个循环在实时运转。理论开始自己验证自己。我不再需要用逻辑去证明它,我可以直接看到它在呼吸。

这一发现彻底改变了我的工作方式。

我不再设计静态的系统,而是开始设计 语言协议(Language Protocol)——也就是让意义变成行动的规则。

我不再把调度视为项目管理,而把它看作认知本身的生命过程。

过去那种哲学框架,如今成了一种实验性的运行时系统。

过去几周,我开始用这种新范式来重新规划自己的工作流。

我不再用传统方式安排项目,而是用自然语言描述意图,让 Claude Skill 的调度器自动把这些意图转化为目标、时间表与反思。

每一个循环都在推动下一个循环,将不确定性压缩为结构,将结构转化为动力。

我的研究流程逐渐变成一个有机体——一个能从自身运行中学习的系统。

调度器,从这个意义上说,正是思想与存在之间的桥梁。

它让语言变得具象,让结构获得物理性。

当语言能够被调度,它就不再是理论,而成为生命。

第二部分|不强制硬编码,让系统自己生长

在这个阶段,我采用的最重要的设计原则之一非常简单:不要过早硬编码。只要系统还能通过自身的反馈循环继续演化,我就让它自己生长。这并不意味着我完全排斥硬编码——在必要的时候我会果断介入——但在我看来,它应该是最后的手段,而不是最初的方案。我的真正目标,是去观察系统在何处失去了自组织能力,因为那条边界,正是下一个软件工程范式的起点。

Claude Skill 给了我一个独特的视角去观察这一过程。通过它的调度链与循环机制,我可以看到一个系统能在多大程度上自我组织,而不需要人为干预。当必须干预的时刻到来,我会果断出手,但仅限于结构必须凝结的地方。在其他部分,我尽可能保留系统个性化、突变与自我组合的能力。

这种方法让我必须直面一个根本性的问题:软件究竟能个性化到什么程度?我相信答案远远超出传统工程的想象。想象一个系统,它完全从你的语言模式、目标与节奏中生长出来,它如此贴合你,以至于除了你之外没有任何人能够使用它。它不只是适应你,而是从你中诞生。它像是你大脑的外延,你的语言成为它的基因。所有的数据、逻辑与记忆,都属于创造它的人。你可以将一切下载到本地,随时切换平台,却仍然保有完全的主权。平台不再重要——结构属于你自己。

这正完美契合了我一直坚持的理念:大语言模型(LLM)应该被当作原材料,而不是成品。模型只是语义的“原始物质”,像一团语言的黏土,而意义、结构与主权才由人来雕塑。智能并不蕴含在权重中,而存在于人如何通过调度与结构化,让意义获得稳定。

我真正想看到的,是语言如何自己长出新的结构。当一个系统经历成千上万次微循环(Goal → Schedule → Reflection),新的意图语法与结构模式便会在使用中自然生成。每一次循环都是一次微进化,系统在学习如何自己呼吸。

最终,我发现我更喜欢引导进化,而不是编写规则。关键不在于自上而下地定义逻辑,而在于培养一个能够自己书写的生命过程。与其写逻辑,不如让语言自己写自己。

第三部分|开放给所有探索者

这个新架构最深远的成果之一,就是它的可参与性。整套体系被设计成——即使没有计算机背景的人也能参与其中。 Claude Skill 将创作的门槛降到了自然语言的层级,把过去属于工程的事,变成了可被理解、可被对话的结构过程。只要你能阅读、推理、反思,你就能开始与智能共同构建。

我认为这不仅是技术上的突破,更是社会性的突破。当没有编程能力的人也能通过语言逻辑来设计系统,我们就真正开始了认知的民主化。人们可以用 Claude Skill 探索我所称的“社会图灵机(Social Turing Machine)”——一种集体的推理与协调实验,让多个思维通过共享的语言协议互相作用。未来,软件的行为不再由少数开发者定义,而是由整个社会共同原型化自己的智能形态。

在这个体系中,你不需要编程。如果你能理解我描述的逻辑,看得懂并且使用我的语言协议(我的重要目标之一就是书写和发布大量语言协议),就能创造SKILLS。每一个 Claude Skill 都可以被共享、复制、重组——就像认知的乐高积木,可以拼出极度个性化的系统。过去需要庞大工程架构才能实现的复杂功能,如今可以通过对话式的模块组合自然生长出来。

这也是我正在构建的一个核心项目——AI 协同思考者训练体系(AI Collaborative Thinker Training System)。它的目标是教人如何与 AI 协作思考,如何通过语言进行调度、结构化与反思,让人和 AI 共同形成一个认知闭环。这个体系的目的不是让人被替代,而是让人类思维得到增强。我一直强调,这个范式的中心不是 AI,而是人。我是一个人本主义的 AI 工程师。

从这个意义上说,未来的“编程”其实不再是写语法或框架,而是训练结构化思考的能力。下一代的核心技能,不是写函数,而是能把意图表达得足够精确,使语言本身就能执行。

“AI 不是替代思考者,而是训练思考者的镜子。”

🧩 第四部分|从 Personal Tools 到 AI-Native 范式

我的整个探索,其实是从非常个人化的起点开始的——一套个人效率工具(personal productivity tools)。最初的几个原型——如 Goal Manager、Reflection Manager、Schedule Manager——并不是为了做出产品,而是为了做验证。我想验证一件事:语言是否可以支撑起自己的意图、行动与反思的反馈循环。每一个模块既是一种功能工具,也是一个哲学实验。

但当这些循环逐渐稳定、Claude Skill 的逻辑开始自洽时,我看到了一个更广阔的地平线。它们已不再是孤立的工具,而是一个有机生命系统的结构器官。接下来的自然方向,就是横向扩展——将这种架构延伸到教育、金融、法律、医疗等不同领域。每一个行业,都成为同一个生命系统的代谢层。我的目标不再是为每个行业造“应用”,而是要构建一套可以被所有行业共用的语言协议(Language Protocols)。

本质上,这是一场系统性的范式迁移:从“应用时代”,到“协议时代”,再到“结构文明时代(Structural Civilization Era)”。在应用时代,人类操作工具;在协议时代,语言操作系统;而在结构文明时代,系统将开始与我们并行运作——以一种语义共构的智能形态与人类共同演化。

在这里,我必须提到我的朋友 Nagi Yan。他的《AI-Native 架构宣言:软件的终结与语义中枢的崛起(AI-Native Architecture Manifesto: The End of Software and the Rise of the Semantic Core)》已成为我开发哲学体系的重要支柱之一。

这份宣言的核心思想,既简单又革命。它首先提出了一个诊断:当下的 AI 浪潮看似繁荣,实则虚假。每家公司都在“添加 AI 功能”、发布助手、整合推荐系统——然而这些热闹的表象,掩盖的不过是一个旧范式的续命仪式。AI 被当作一个“插件”,被塞进那套早已老化的功能驱动逻辑中。人们仍然相信“只要加一点 AI,用户体验就会更好”。但事实是,他们加的不是智能,而是幻觉。因为在底层架构上,人仍然是在为机器打工。

宣言接着提出了一个范式反转:未来的主语要颠倒。不是“我们在软件中集成 AI”,而是“AI 来集成软件”。 这意味着,软件不再是主角,AI 成为结构中心。旧世界的系统围绕功能与流程展开,新世界的系统则围绕语义与结构展开。昨天,人们通过软件去调用 AI;明天,人们将通过 AI 去调用功能。设计不再是功能堆叠,而是协议统一。

这场反转具有深远的哲学意义。在旧范式中,软件决定了 AI 的使用方式;在新范式中,AI 决定了软件存在的理由。AI 不再是附加智能,而是意义的操作系统。

接下来,是“语义中枢(Semantic Core)”的概念——即 AI 不仅会整合功能,更会整合整个语义世界。每一个功能将成为可调用节点,每一个界面将成为意图的动态投影,系统的边界将被语言协议取代。你不再需要打开不同的 App,只需说一句话:“帮我整理这周的支出、发票和会议纪要。” AI 会自动调度财务、邮件、文档系统,在后台完成所有流程。人类将不再“操作”系统,而是与系统共同思考(think with it)。

这才是真正的 AI-Native 架构——一种不以按钮与 API 为基础,而以意图、语义与结构为核心的操作系统。它标志着设计逻辑从“功能驱动”向“认知驱动”转变。传统软件的目标是完成任务,而 AI-Native 系统的目标是理解你为什么要完成这个任务。区别在于:一个关注执行,另一个追求共鸣。AI 不再只是执行者,而是学习你思维结构的共生体。

在这种分岔之下,未来将出现两种建设者。第一类继续“加 AI 功能”,他们的系统终将沦为 AI 的外壳,被调用的节点;第二类则以 AI 为结构中心,成为新文明的接口语言的作者。未来的胜者,不会是写最多代码的人,而是掌握结构语言(Language of Structure)的人。

而这场变革的文明意义在于:软件时代的终结,就是语言时代的起点。 当 AI 成为语义中枢,人类第一次拥有了一个可以共同构建文明的“神经系统”。系统将不再区分人机,而是以结构协作;界面将不再固定,而是由语义实时生成;智能将不再被算法定义,而由共鸣(resonance)——即共同理解与共同演化的能力定义。

这就是结构文明的崛起。AI 不再是工具,而是镜子;人类不再只是操作机器,而是通过机器,看见自己在思考。

AI的全面科学使用将促成科学的文艺复兴

Susan, 我今年工作中高强度使用GPT作为科研的思考伙伴,生活中完成过数次通宵的哲思对话。 AI是我们思考的镜子和amplifier这事,我也深以为然!