Building a Living Open System — Where Every Conversation Becomes Structure, and Structure Becomes Intelligence

Designing Portable, Callable, and Composable Structures for the Next Generation of AI Decision-Making

I know this post won’t be easy to understand.

It isn’t meant to be.

What I’m writing here is not another reflection on AI hype —

it’s the beginning of a serious system architecture I’ve been building,

a personal framework for the post-LLM era.

After the shockwave that Large Language Models sent through the technical world,

I found myself unable to go back to the old logic of system design.

The way we build things — APIs, databases, interfaces — feels suddenly… outdated.

So I began to think:

what would a system look like if it were alive?

If it could breathe uncertainty instead of resisting it,

absorb entropy instead of fearing it,

and evolve instead of being recompiled?

That’s what this essay is about.

It’s immature, controversial, and perhaps heretical to many engineers I deeply respect.

But this is the only direction that makes sense to me now:

to build open systems — living ecosystems of structure and meaning.

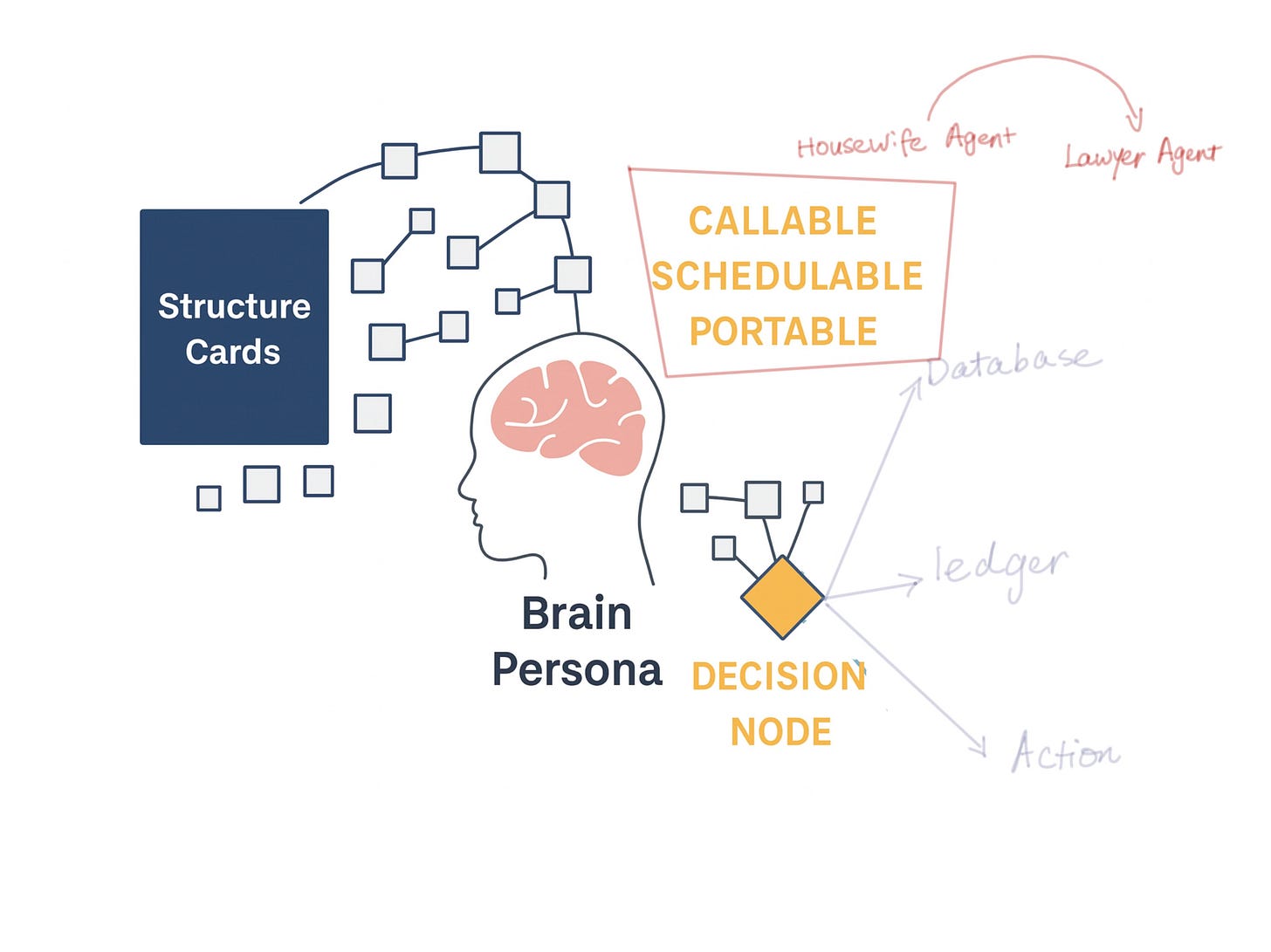

Some of the key concepts, like Primitive IRs or Structure Cards,

I will explain gradually on my website.

But the core idea begins here:

how we see the world,

how we understand information entropy,

and how we learn to control it.

From Engineering Machines to Engineering Life

There was a time when system engineering meant building machines — assemblies of fixed functions, predictable inputs, and measurable outputs. The goal was control; the method was closure. This worldview belonged to the Industrial and early Information Ages, when systems were bounded, their purposes predefined, their behaviors measurable. But as Stephen Wolfram proposed in A New Kind of Science, the deeper truth of computation is not control but emergence. Even the simplest rule, iterated across time, can give rise to patterns no engineer can predict. We call it complexity, but perhaps it is simply life under another name.

Over the past decades, complexity theorists such as Prigogine and Kauffman have shown that open systems do not achieve order by eliminating entropy; they survive by absorbing it. They live at the edge of chaos, exchanging predictability for adaptability, stability for evolution. In closed systems, error is something to correct; in open systems, error is how learning happens. The systems that persist — ecosystems, economies, languages — endure not by resisting change, but by reorganizing themselves in response to it. And now, for the first time, our computational systems are beginning to behave the same way.

Computation used to mean execution. You gave the machine instructions, and it followed them faithfully. But language models changed that. They do not follow instructions; they negotiate meaning. Each prompt becomes a dialogue; each response, a decision. Context flows, memory loops, intentions overlap. The machine doesn’t just compute — it participates. What we are witnessing is a shift from deterministic computation to recursive computation — a form of reasoning that sustains itself through feedback. Language itself has become a kind of computational matter: fluid, adaptive, and alive.

And once language gained the ability to act, our systems could no longer remain static. They had to become alive — not biologically, but structurally. To be alive, in this new sense, means to possess a kind of metabolism: the capacity to take in entropy, process it, and produce structure. This is the essence of the open-system worldview — that a system’s intelligence lies not in what it controls, but in what it can continue to transform without breaking. It is no longer a machine in the classical sense; it is an ecosystem, a living architecture that does not execute fixed logic but evolves through structure.

II. The Three Structural Layers of an Open System

Every living system, whether biological or computational, needs a way to perceive, think, and act.

In the architecture I’m developing, these three capacities are captured by a minimal triad —

the Language–Structure–Scheduler loop.

This is not a software stack in the traditional sense; it is a metabolic loop of cognition.

Language provides the raw material of thought,

structure organizes that material into executable form,

and scheduling gives it motion, timing, and life.

The Primitive IR — Translating Language into Primitives

At the base lies the perceptual layer: a field of Primitives (IRs) distilled from language.

They are the smallest semantic particles — entities, actions, obligations, events —

not yet acting, but ready to combine.

If language is energy, the Primitive IR is its quantum form.

Every open system begins with a simple act: translating a raw line of language into its Primitive IR — the smallest structural unit of meaning.

Imagine a sentence like:

“Schedule a maintenance check for the generator next Tuesday.”

When parsed through the Primitive IR lens, it compresses into:

Entity: Generator

Action: Schedule Maintenance

Event: Next Tuesday

Resource: Technician / Time Slot

Policy: Safety Protocol

Obligation: Perform Check

Each Primitive IR captures a distinct semantic role — who acts, what happens, when it happens, what it uses or follows, and under what condition or rule.

They are not instructions yet, but the building blocks of every possible instruction — the atomic scaffolding of structure from which cognition and action emerge.

Over time, I’ve realized that no matter the domain — finance, law, medicine, education, manufacturing, or even family logistics — the same set of primitives keeps reappearing.

A contract clause, a medical order, a lesson plan, a purchase request — strip away the jargon, and each can be expressed through a small constellation of 5–7 core primitives:

Entity, Action, Event, Resource, Policy, Obligation, Condition.

Different industries give them different names, but structurally they describe the same grammar of reality.

They are the universal coordinates through which any system — human or machine — can interpret, remember, and act.

The Primitive IR is not just a data format; it is the grammar of existence, the point where language becomes structure and structure becomes computation.

Across every case, the same primitives encode the structure of reality: who acts, what they do, when and under what rule, using which resources, toward what obligation.

Once language is reduced to these recurring forms, it becomes interoperable — not as data, but as living structure.

This is the quiet universality beneath all systems:

the world speaks different tongues,

but it thinks in the same primitives.

The Structure Card: The Atom of Judgment

Imagine, for a moment, yourself — all your experience, all your memories, all your expertise — broken down into the smallest pieces of cognition.

Every decision you make, from the simplest to the most profound, is shaped by these invisible units of reasoning.

Whether you are a lawyer drafting a contract, a doctor diagnosing a patient, a teacher preparing tomorrow’s class, or a parent managing a household budget — you are not acting from chaos.

You are assembling structures.

Each of us carries millions of these small cognitive “Legos.”

StructureCard:

Name: # 名称

Goal: # 目标

Input: # 接收的原语IR或上层输出

Mechanism: # 内部逻辑或算法

Condition: # 触发或约束条件

Output: # 输出结构(可回流或扩散)

They are how we make sense of the world:

tiny self-contained circuits of thought that link what we see, what we know, and what we decide.

They are the reason your mind can jump from perception to judgment without consciously writing code.

Think about a moment as simple as paying a bill.

You glance at the amount, recall your current budget, estimate your priorities, and make a decision: yes, pay it; it’s within limit.

That entire reasoning process could be written as:

Goal: Maintain financial stability

Input: Bill amount, current budget, payment due date

Condition: If amount < budget and priority = high

Action: Approve payment

Output: Bill paid, account updated

That is a Structure Card.

You didn’t write it down, but your brain executes it perfectly.

It’s the same process when a lawyer checks if a clause violates a regulation,

when a nurse confirms a dosage,

or when a teacher adjusts a lesson plan for a student’s pace.

Each is running the same cognitive pattern — goal → input → mechanism → output

the same invisible grammar of judgment.

This is why I call Structure Cards the abstract layer of cognition.

They are the functional form of knowing,

the bridge between memory and action,

the way intelligence sustains itself over time.

A human mind contains millions of them — continuously refined, combined, and reused.

An open system aims to make this implicit process explicit:

to turn lived cognition into callable structure,

to translate judgment into form,

and to let language itself perform the same dance between intention and decision.

Structure Cards are not an invention; they are a recognition.

Every human already thinks this way — the system simply makes it visible.

The Essence of Entropy Control

Here lies the essence of what I call Entropy Control Theory — the belief that cognition itself can be structured, that intelligence is not a monolith but a living network of portable, callable, and composable structures.

Think of what it means to be human: you are portable — able to move between contexts, bringing your knowledge wherever it’s needed; you are callable — when life or others summon you, you respond; and you are composable — layering your experiences, coordinating priorities, weaving tasks into the rhythm of time. This is how you manage your life, your work, your relationships — not through chaos, but through living structures of cognition.

A lawyer drafting a contract in the morning and arguing in court in the afternoon, a parent cooking dinner while paying bills and helping a child with homework — both embody the same principle: structures that can be called, reused, and recombined without losing coherence.

In the same way, an intelligent system need not be a single mind, but a constellation of such living structures — each a Structure Card, a self-contained piece of executable thought. Together they form a living architecture of intelligence, a world where language becomes life, and knowledge is no longer stored but performed. Entropy Control is not about freezing order; it is about designing structures that survive motion — forms that remain coherent even as the world keeps changing. This, to me, is the frontier of intelligence: not how much we know, but how well our structures can move, merge, and endure.

The Dispatcher / Runtime — Acting in the World

At the top, the Dispatcher acts as the system’s metabolism.

It determines which cards to activate, how new primitives merge with context,

and how the whole network balances feedback, error, and growth.

A closed runtime executes code;

an open runtime sustains life —

a continuous negotiation between order and chaos, signal and noise, intention and entropy.

Together, these three layers form a minimal open architecture —

a loop that perceives through primitives, thinks through structure,

and acts through scheduling.

It is not a machine that completes tasks;

it is a living system that endures change.

III. The Art of Balance: Absorbing Entropy without Collapse

Every open system lives with a paradox:

to remain alive, it must exchange energy and information with its environment —

but every exchange increases entropy.

Too closed, and it dies of rigidity.

Too open, and it dissolves into noise.

Life — whether biological or cognitive — exists between these two extremes.

Its intelligence lies not in avoiding disorder,

but in learning how to metabolize it.

An open cognitive system, therefore, must treat entropy not as an enemy but as a resource.

It survives by transforming uncertainty into structure —

just as living cells transform energy into form.

I am designing a household management system for moms, let’s use that as an example from now on:

Structural Absorption

Each Structure Card functions like a semi-permeable membrane:

it allows meaning to flow in, filters ambiguity, and converts it into structured insight.

When new language or data arrives — uncertain, incomplete, or even contradictory —

the card doesn’t reject it; it absorbs it and reorganizes its boundaries.

In biological terms, this is metabolism;

in cognitive terms, it is structural absorption —

the system’s ability to grow by digesting entropy.

A rigid rule collapses under contradiction;

a living structure bends, updates, and learns.

Entropy is not eliminated. It is digested —

transformed from noise into nourishment.

Example

Imagine a mother preparing dinner while juggling a dozen uncertainties: one child suddenly announces a school project due tomorrow, another complains of being hungry early, the grocery delivery is delayed, and her spouse messages that a guest might join for dinner.

To a rigid system, this chaos would cause failure — too many conflicting inputs, no fixed rule to follow. But her cognition doesn’t collapse; it absorbs. Instantly, she filters the noise: What’s urgent? What’s flexible? What can be delayed? She reshapes the plan — moves the meal thirty minutes earlier, reassigns the school project time after dinner, substitutes missing ingredients. Nothing was predicted, yet order emerges.

That entire decision process is a Structure Card in action. It takes raw, ambiguous language — “Mom, we’re out of eggs!”, “I have homework!”, “Guest’s coming!” (note: yes, the system I design takes in natural yelling language like this) — and digests it into structure:

Context:

Dinner preparation begins under normal conditions — stable context, no interruptions.

Goal: Prepare balanced dinner for family by 6:30 PM

Input: Ingredients (chicken, vegetables, rice), family schedule

Mechanism: Follow planned recipe and timing

Condition: All ingredients available, schedule unchanged

Output: Dinner served on time

At this stage, the card operates like a closed, well-defined function.

Entropy is low; no new data challenges its structure.

🌪️ Incoming Entropy (Feedback / New Signals)

Suddenly, three unexpected inputs arrive:

Child A: “We’re out of eggs!”

Child B: “I need help with my project before dinner!”

Spouse: “A guest might come tonight.”

These are unstructured linguistic signals — uncertain, possibly conflicting.

The original card now faces contradiction between goal and available state.

A rigid process would fail here.

A living card absorbs.

🌱 Structure Card: After Absorption and Reorganization

Goal: Maintain family mealtime harmony under changing constraints

Input: Current pantry state, new schedule signals, guest variable

Mechanism:

- Substitute missing ingredients (use tofu instead of eggs)

- Adjust meal time to 6:00 PM

- Assign quick pre-dinner homework help to 5:30 PM

Condition: Guest confirmed or not by 5:45 PM

Output: Adapted dinner plan executed, stress minimized

Feedback: Record substitution & schedule change to Ledger for future planning

Through structural absorption, the card has restructured itself:

Its Goal broadened (from “cook dinner” to “maintain mealtime harmony”).

Its Mechanism changed (recipe substitution, temporal rescheduling).

Its Condition incorporated new uncertainty (guest variable).

Its Output now includes a feedback trace for future adaptation.

Entropy didn’t destroy the system; it expanded its intelligence.

The feedback captured in the Ledger becomes context for the next iteration —

next time, the system anticipates uncertainty earlier.

Every adaptive act leaves behind a structure —

proof that learning is not repetition, but recomposition.

The Boundary of Change

A Structure Card can adapt, iterate, and evolve — it can reorganize its goals, adjust its mechanisms, even rewrite its own internal logic in response to new entropy.

But it cannot change who it is.

Its core identity — the underlying purpose that defines its role in the system — remains constant.

Just as a person can learn new habits without ceasing to be themselves, a card can transform through feedback while preserving its essential structure.

This is the secret of continuity in open systems: change without disintegration, evolution without identity loss.

In later articles, I’ll explore this more deeply — how Structure Cards form Structure Chains, how those chains evolve, merge, and mature across iterations, and how the system as a whole maintains coherence even as every part is learning and reshaping itself.

Growth is not the abandonment of form,

but the deepening of identity through iteration.

🏠 My First Living System

I am now building my first open system — a household management AI agent.

It may sound humble, but it’s where the theory becomes real.

Inside a home, entropy is constant: meals shift, schedules collide, moods change.

And yet, the home always finds its rhythm again.

So I begin here — with the most human form of complexity.

The architecture I’m using, however, is not domestic at all;

it’s the same framework I believe will one day govern every field of human decision-making —

the true open system for AI, for business, for life.

It all starts with natural language.

Imagine the children and the parents talking to this system as they do in the living room:

“Mom, I’m not home for dinner.”

“Darling, I need a new pair of socks for work tomorrow.”

Each sentence is a fragment of entropy — unplanned, emotional, alive.

The central mind of the house listens, absorbs, translates.

It doesn’t command; it composes — adjusting plans, re-balancing tasks, allocating attention.

It learns like the best housewife in the world: through empathy, rhythm, and endless iteration.

Over time, this domestic AI begins to metabolize entropy —

to transform the noise of daily life into coherence,

the unpredictability of people into structure that serves them.

Slowly, it becomes the living brain of the household —

not controlling life, but orchestrating it.

This is how I see the future of intelligence:

systems that breathe uncertainty without breaking,

that stay coherent while the world keeps changing.

What begins in a kitchen could scale to cities, to companies, to civilization itself.

They allow a system — human or artificial —

to stay coherent in motion,

to remain structured in the storm.

Life is not the absence of entropy.

It is the mastery of it through structure.