From Language to Structure: The Universal Substrate of Intelligence

How Primitive IRs Bridge Human Intention and Machine Execution

The Beginning of Treating Natural Language as Raw Material

In my framework, all natural language — whether generated by humans or by LLMs — is treated as raw material: dense with energy, possibility, and meaning, yet inherently messy and filled with countless parallel paths.

If AI is to be used seriously — for decision-making, business management, or as the digital twin of complex organizational commands — this raw linguistic energy must first be refined.

Natural language needs to be compressed into a structural layer: an Intermediate Representation, which I call the Primitive IR — a format that casts all language into executable, interpretable structures.

All natural language is lively — yet boundless.

That’s the energy of human language:

a sea without edges, always flowing, always generating.

Every day, new words, new meanings, new combinations emerge.

No two sentences are ever truly the same — even when they sound alike.

Language is the perpetual motion of the human mind —

infinite in form, recursive in meaning,

constantly weaving new patterns of thought.

It is this endless generativity — this open horizon of expression —

that makes language both our greatest power and our greatest entropy.

Do you think the business world is any different?

Even the most profitable companies — the ones that appear perfectly organized from the outside — are, on the inside, oceans of language entropy.

Every day, every minute, the office hums with unstructured communication: meetings, emails, chats, notes, reports — waves of words overlapping and colliding.

Believe it or not, nearly 80% of routine office work, even in the best companies, is simply the act of translating unstructured language into structured form — into spreadsheets, reports, workflows, and, yes, even code.

When instructions from upper management are finally turned into executable commands, that’s translation.

And few realize that most junior programmers are, in truth, linguistic translators — converting the messiness of human intention into structured logic that machines can execute.

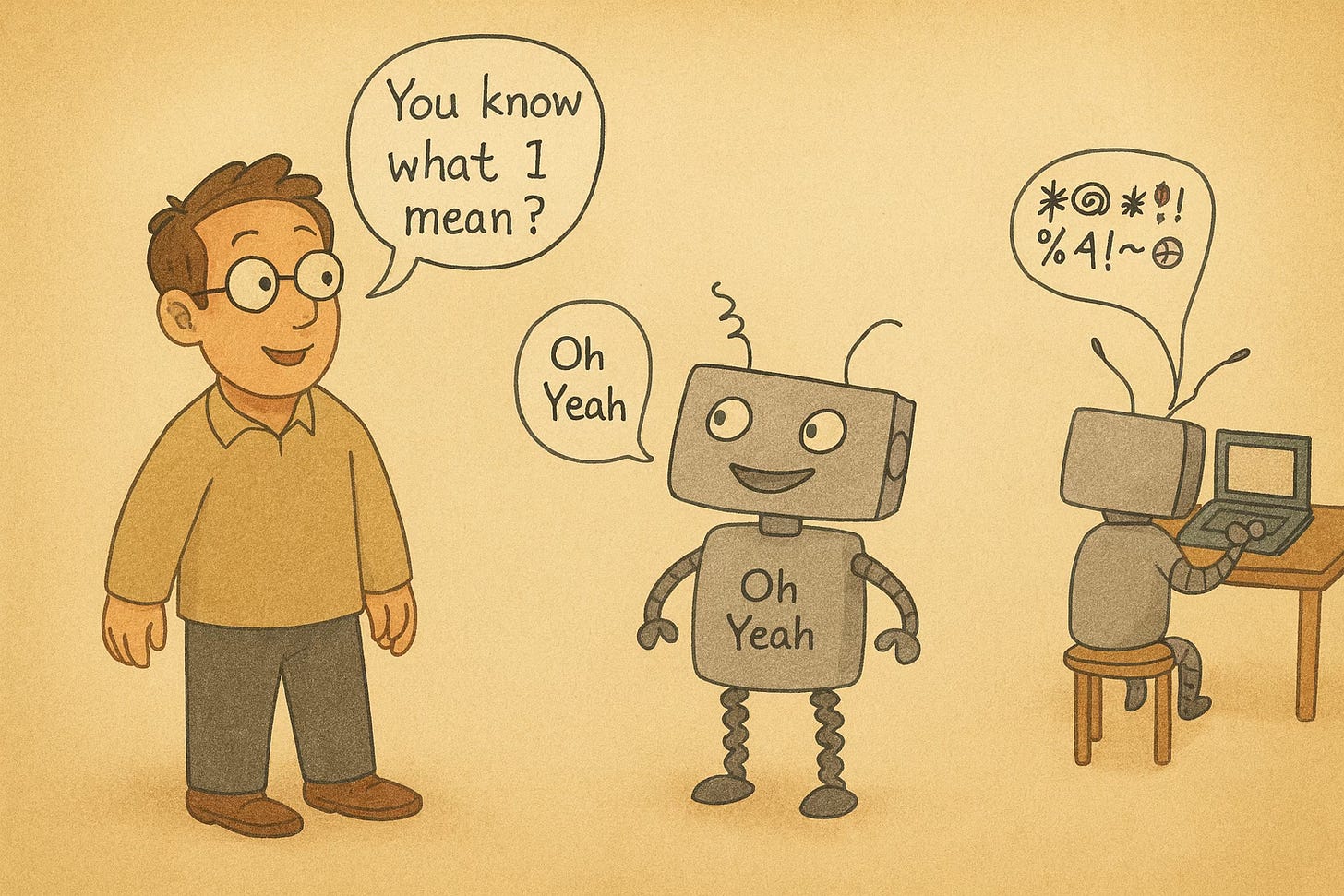

Now, one invention has dramatically transformed our world —

the Large Language Model (LLM).

For the first time in history, machines can truly understand natural language — and even respond in it.

They speak our tongue, seamlessly switching between English, Chinese, Japanese,

and even coding languages like Python, Java, or COBOL.

It’s incredible.

Overnight, it feels as if the world no longer needs translators.

Humans and machines can finally “talk.”

Every barrier of syntax, grammar, and form seems to dissolve.

But beneath this miracle of fluency lies a new kind of challenge —

understanding is not yet structure.

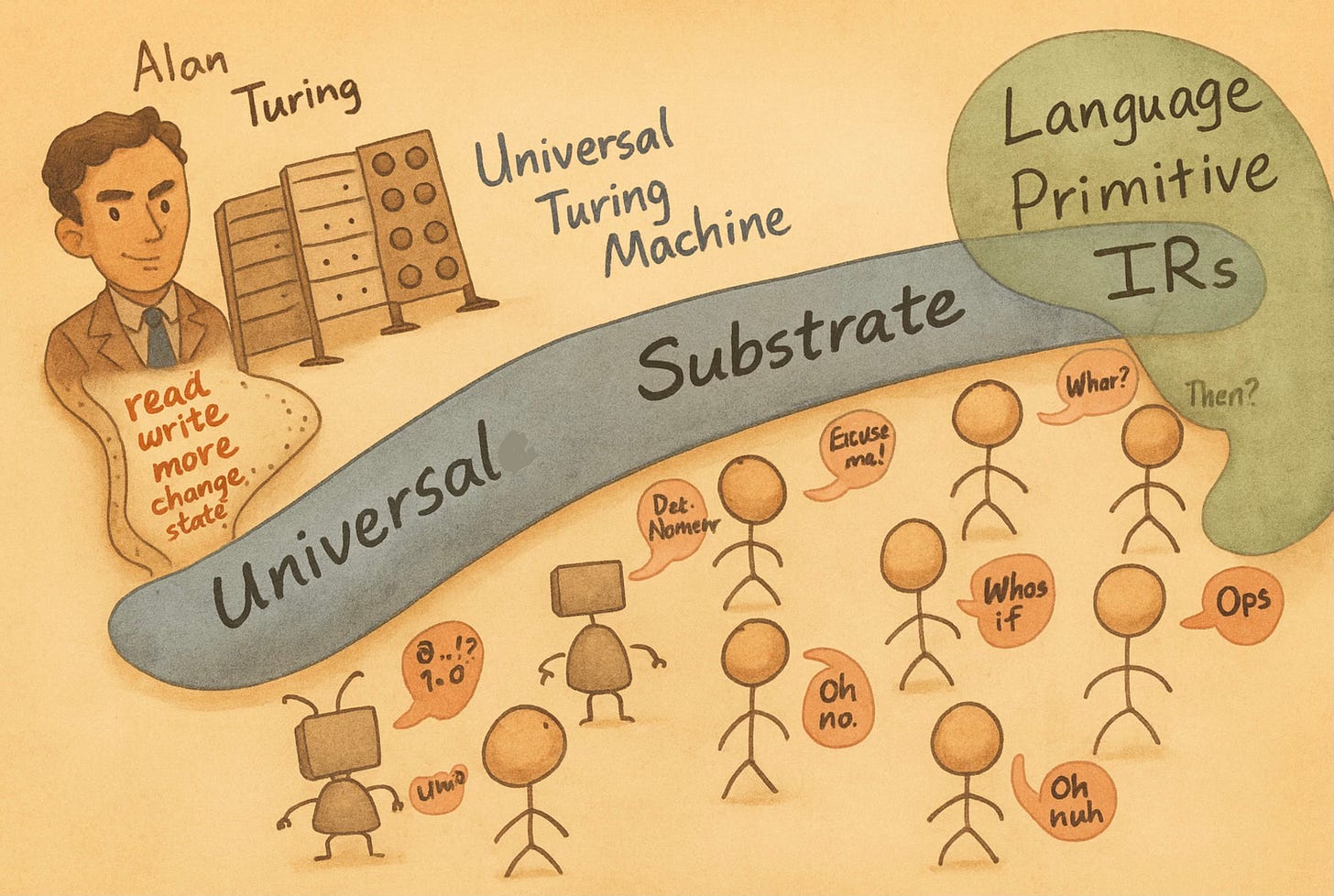

Just as Turing once reduced all computation to four primitives — read, write, move, change state —

I believe that all language-based intelligence can also be grounded in a small set of structural primitives.

Every “Can you send me the report?”

Every “Book a meeting with the team.”

Every “If revenue drops, trigger Plan B.”

All of them share the same hidden grammar of intention and action:

Entity — the actor, the “who.”

Resource — the object, the “what.”

Event — the temporal change, the “when.”

Obligation — the commitment or rule, the “must.”

Action — the executable task, the “doing.”

Policy — the condition-to-action logic, the “if–then.”

Ledger — the record of outcome, the “what happened.”

These seven primitives form the foundation of structured meaning — the minimal building blocks from which any human or machine instruction can be composed.

Once language is expressed through these primitives,

we can finally bridge human intention and machine execution —

not through endless prompting, but through Primitive IRs —

a unified layer where language becomes structure,

and structure becomes intelligence.

Let’s take some examples — imagine how the daily language of different professions can be translated into Primitive IRs:

⚖️ Lawyer

Sentence: “Send the signed contract to the client before 5 PM.”

Entity: Paralegal (who sends it)

Resource: The contract (what)

Event: 5 PM deadline (when)

Obligation: Contract must be delivered (must)

Action: Send the document (doing)

Policy: If signed, then deliver (if–then)

Ledger: Email record confirming delivery (what happened)

🩺 Doctor

Sentence: “If the patient’s temperature stays above 102°F, start the antibiotic treatment.”

Entity: Nurse (who acts)

Resource: Patient’s medical record (what)

Event: Temperature reading > 102°F (when)

Obligation: Must follow treatment protocol (must)

Action: Administer antibiotic (doing)

Policy: If temperature high, then treat (if–then)

Ledger: Updated patient chart with medication log (what happened)

💼 Accountant

Sentence: “Generate the monthly revenue report and send it to finance by Friday.”

Entity: Accountant (who)

Resource: Financial data (what)

Event: Friday deadline (when)

Obligation: Report must be submitted (must)

Action: Generate and send report (doing)

Policy: If month-end, then compile data (if–then)

Ledger: Report archived in company system (what happened)

No matter the field — law, medicine, or finance —

every professional instruction ultimately breaks down into the same primitive structure.

This is how language becomes executable,

and how intelligence — human or machine — can share one universal substrate of meaning.

When Turing discovered the universal basis of computation, he wasn’t just describing machines —

he was uncovering the hidden order behind all processes of reasoning.

Today, as Large Language Models flood the world with continuous meaning,

we stand at a similar threshold.

To move from language understanding to language execution,

we need a new kind of substrate — one that discretizes thought itself.

That’s what the Primitive IR is:

a universal layer where meaning becomes structure,

and structure becomes action.

From there, Structure Cards emerge —

the living functions of the mind —

callable, combinable, and evolving through time.

It’s not about replacing human intelligence,

but extending it —

giving our words form,

our thoughts continuity,

and our intelligence a framework that can finally live across both humans and machines.

The next leap of intelligence will not come from bigger models,

but from structured minds.