LLM as a Product or LLM as a Raw Material?

Why this choice will decide whether we stay in the app era — or step into the Protocol Civilization

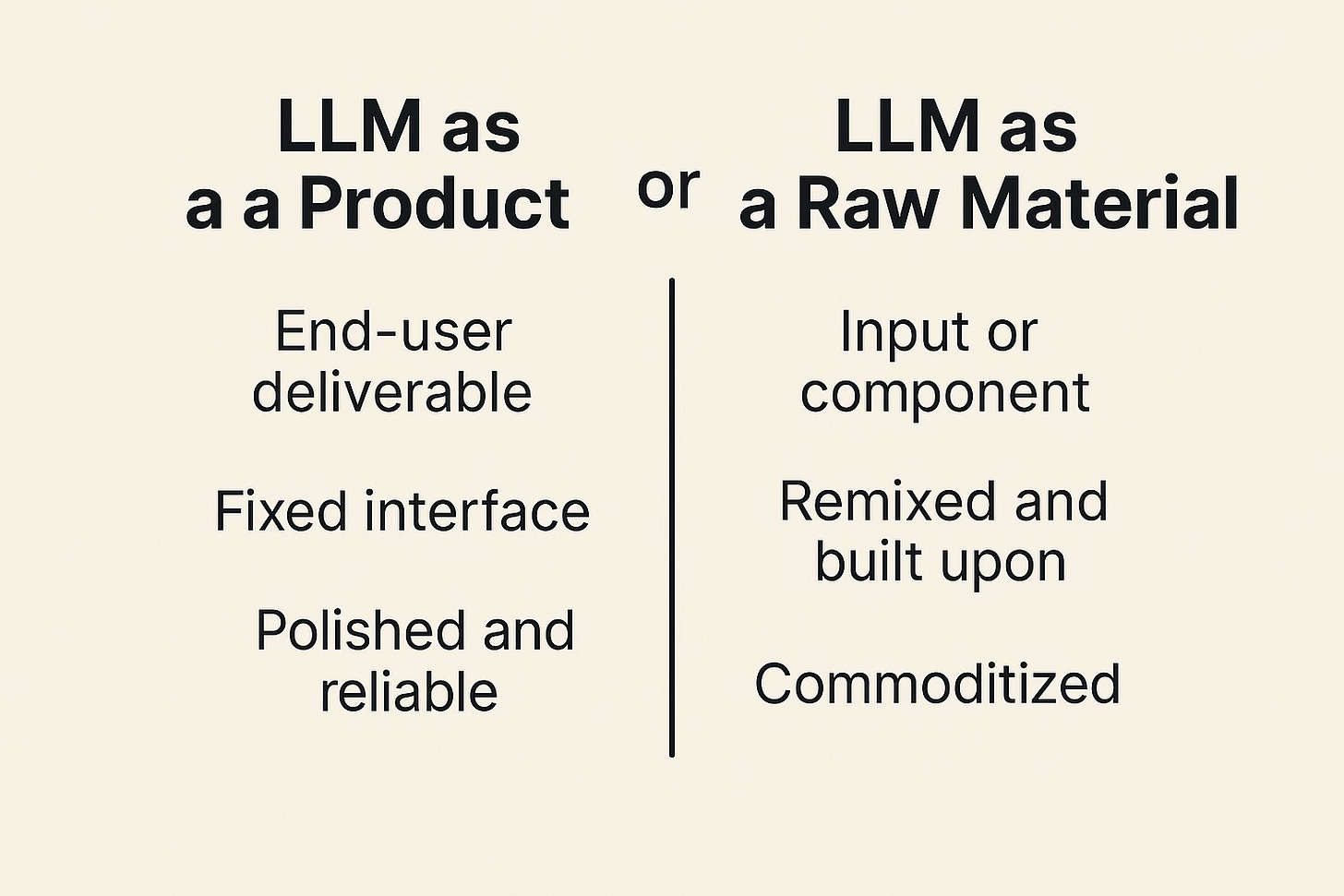

There will come a day when we face a deceptively simple question: Is a large language model a finished product, or is it raw material?

The answer sounds technical, but it carries civilizational weight.

Treat it as a product, and we stay in the world of apps and platforms — convenient, polished, but bounded.

Treat it as raw material, and suddenly we are talking about infrastructure, protocols, and the long, messy work of building something as foundational as electricity or the internet.

The Crossroads

We stand at a crossroads. On one side, the product path: large models wrapped into polished applications, distributed as subscription services, and guarded by corporate platforms. This is the logic of convenience — useful for the end-user, profitable for the provider, but ultimately bounded.

On the other side lies the raw material path: large models treated not as apps, but as infrastructure inputs, like electricity or bandwidth. In this vision, the LLM is not the end but the beginning — fuel for an entire ecosystem of tools, protocols, and industries that grow on top of it.

It’s not surprising that the largest providers — OpenAI, Google, Anthropic — lean toward the product path. After all, packaging a model as a finished product aligns with their incentives: user lock-in, predictable revenue, regulatory defensibility. But history shows us that when something has the properties of a general-purpose technology — like electricity, like the internet — no company can fully contain it.

Why the Distinction Matters

This choice is not just a business model question. It determines the shape of the future AI industry.

If we stick to the product view, we remain in the “app logic” era — countless variations of assistants, copilots, dashboards. Useful, yes. But limited in transformative power. It’s the equivalent of early electricity being confined to private laboratories, dazzling but inaccessible.

If we embrace the raw material view, a different world opens. Raw material demands infrastructure: standards, protocols, governance, education, and law. Just as electricity required power grids, plugs, safety codes, and tariffs before it could become universal, LLMs will require an equivalent scaffolding of protocols to scale from toys to civilization-grade infrastructure.

And the opportunity this creates for the general public is immense. Once LLMs become raw material, anyone can build on them — just as anyone could start a factory once electricity became a utility, or launch a website once TCP/IP became universal. The creativity of millions, not the control of a few, is what makes a general-purpose technology truly transformative.

That is why I believe this trend is unstoppable. Even if large providers cling to the product model, the open-source ecosystem ensures that the raw material path is always available. Just as Linux became the quiet backbone of the internet, open models will power a protocol civilization whether or not the incumbents approve.

The Missing Protocols

Sounds nice, but let’s be clear: this is not going to be easy. It’s not just about spinning up more GPUs or publishing another benchmark paper. For LLMs to become true infrastructure, an entire social and technical layer of protocolsneeds to be built.

Right now, we are in a protocol vacuum. We have models, we have APIs, we even have dazzling demos. But we lack the shared, enforceable standards that let raw capability scale into civilization infrastructure.

History offers sharp lessons. The internet was not built on flashy websites, but on the quiet universality of TCP/IP. Mobile communication only became global when GSM defined how devices could interoperate across borders. Electricity itself only scaled when AC/DC standards stabilized, and when plugs, voltages, and safety codes were agreed upon. In each case, protocols — not products — were the hinge between experimentation and civilization.

The Work Ahead

That’s why the road from “LLM as raw material” to “LLM as infrastructure” is not a sprint — it’s a generational project.

Legal frameworks: We need laws that define how AI outputs are owned, how liability is assigned, and how safety is enforced.

Education: Just as children learned “electrical literacy” in the 20th century, they will need “AI literacy” in the 21st.

Cultural adaptation: Societies must normalize the presence of AI, not as a curiosity or threat, but as a stable companion in work and governance.

Technology companies cannot do this alone. Even if OpenAI or Google wanted to, they do not have the legitimacy to define social norms, nor the authority to encode law, nor the reach to reshape education. This is not the work of one lab or one startup. It requires societal alignment, the slow forging of consensus across governments, industries, and communities.

And the scale of this work is daunting. It is not the equivalent of launching an app or raising a venture round. It is more like crossing a continent: long, exhausting, and littered with dead ends. But every civilization-defining technology — electricity, printing, the internet — has required exactly this kind of arduous collective effort.

Conclusion

Right now, I glance at my phone — it holds a thousand apps. Do we really need to add just another dozen AI apps to that pile? Or should we take the bolder path: embrace the true spirit of revolutionary science, break from the present, and use LLMs not as yet another product, but as the foundation to build an entire protocol civilization?