In the previous post, we talked about the Policy Gate.

Its role is not to simply record a stream of logs, but to upgrade ordinary Events—through institutionalized filtering and constraints—into Memory that actually carries long-term time value.

And this Memory is precisely what I arrived at, after months of repeated deduction, as the core ontological substrate of long-term systems in the age of intelligence.

This point must be made clear first. Otherwise, every development decision you see later, and every piece of code that looks “simple,” will feel inexplicable. In reality, none of it is ad-hoc engineering improvisation; it is the result of a step-by-step downward mapping from top-level philosophical judgments, through long-horizon reasoning at the application layer.

A truly good system is never about “showing off clever tricks at the bottom.”

Rather, it is one where the top layer dares to break through the ceiling and imagine the best possible future form, while the bottom layer is restrained, solid, and strong enough to withstand the erosion of time.

Once we truly enter the intelligent age, what has real value for humanity will not be dopamine-feeding apps.

Only long-term systems have value.

And the core of a long-term system is neither models nor logic, but the data substrate itself.

Models will evolve.

Reasoning methods will be rewritten.

Algorithms will be replaced.

But once data is established, it must persist over time.

Because data at that moment records:

the consensus reached between humans and models at a specific point in time, and

the decisions that were made based on that consensus, while bearing temporal risk.

Data that is written at the time, without knowing the outcome, and while accepting risk, has already paid the cost of time ethics.

This is one of the fundamental logics of long-term systems.

Let me make a joke—and at the same time give my minimum definition of a “long-term system”:

Only systems that can span generations, and even be inherited, deserve to be called long-term systems.

For example:

Every fragment of a child’s growth: learning interests and paths co-shaped by parents, agents, and the child;

Long-term family investment decisions: every choice is a bet on future stability, balancing conservatism and adaptation to the times;

In knowledge management systems: judgments, methods, and reflections jointly accumulated by agents and humans.

(These are currently my three core application directions: education, investment, and knowledge management.)

Ultimately, one question is enough to test everything:

When you grow old, what exactly do you hand over to your child?

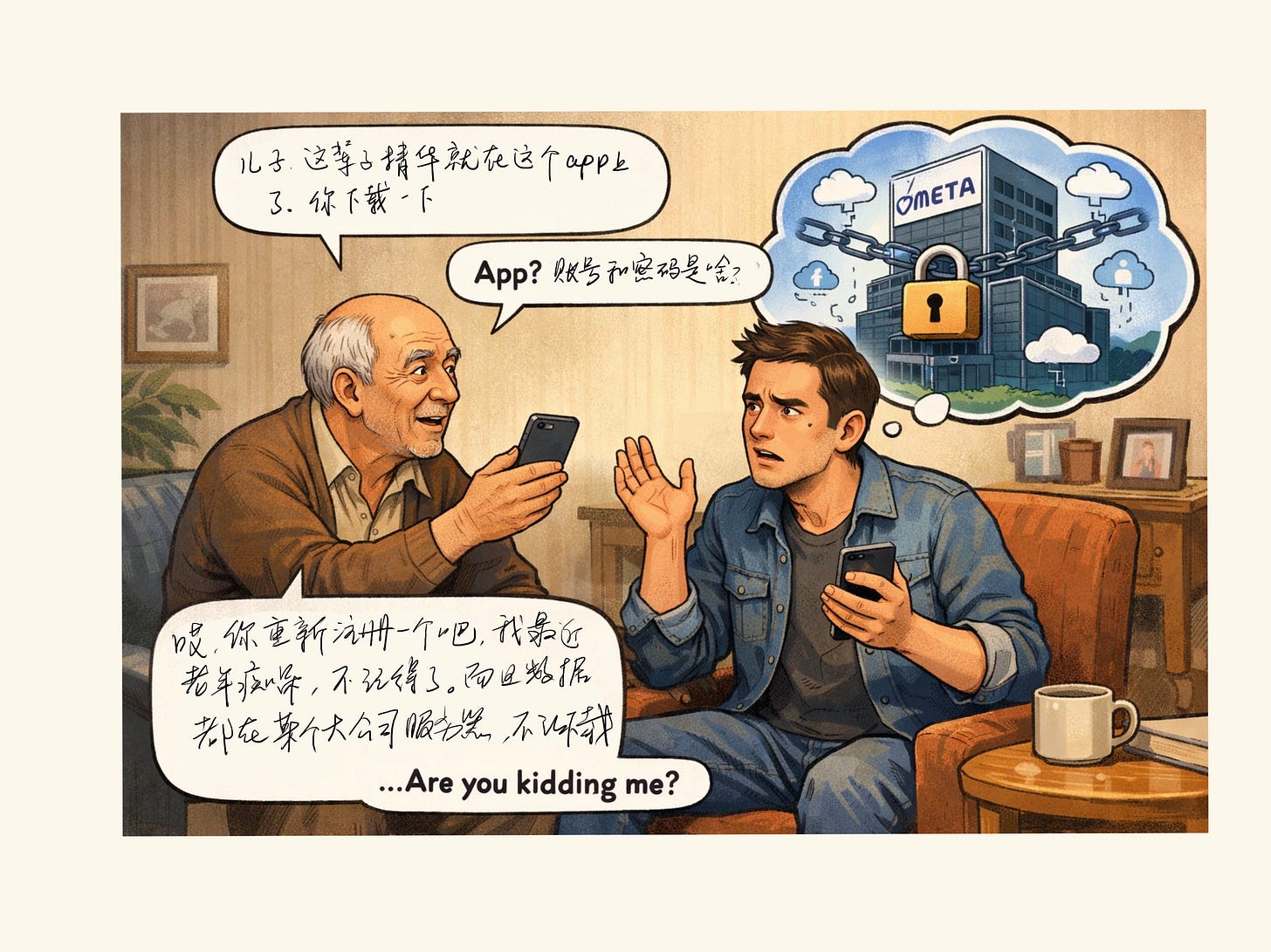

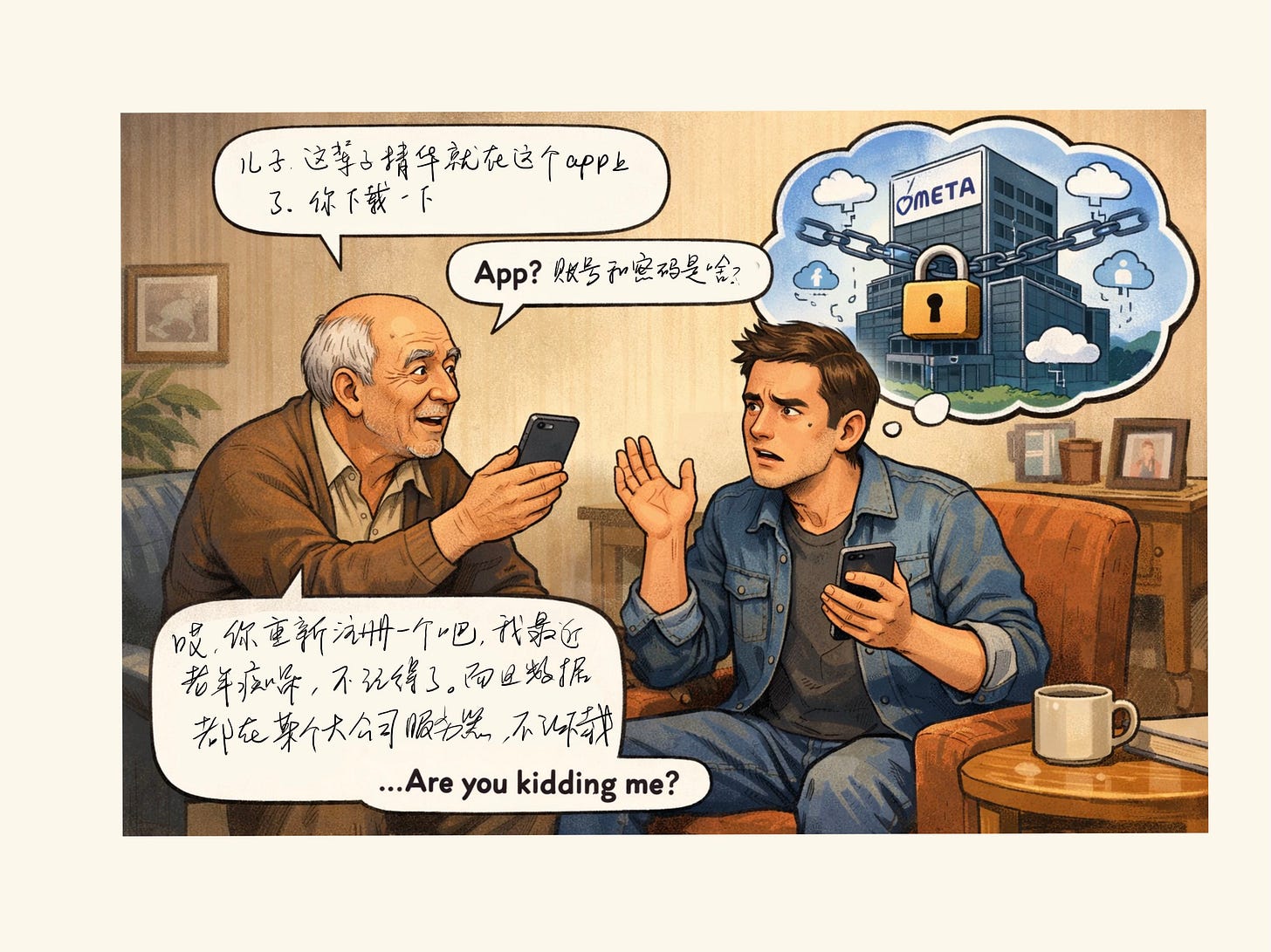

Imagine this conversation:

Old man: Son, everything essential from my life is here. Download this mobile app.

Son: An app? What’s the account and password?

Old man: Ah… just register a new one. I’ve got some dementia lately and can’t remember.

And the data is all on some big company’s servers—they won’t let you download it anyway.

Son: …are you kidding me?

It sounds like a joke, but it is actually the true destiny of short-term systems.

So the direction I chose is clear:

Long-term systems + data-first ontology.

Data is the user’s true core asset.

Data must be held by the user—long-term, intact, and portable.

And this is not some “radical proposal.” Isn’t this simply common sense?

For any serious entity—corporations, family trusts, public institutions—

perpetual data has always been a baseline requirement.

Web 2.0, the attention economy, and dopamine-driven systems have eroded humanity for far too long.

What basic requirements must data meet in a long-term intelligent system?

For data to qualify as “ontology” in a long-term intelligent system, it must simultaneously satisfy a set of hard requirements:

Attributable: clear ownership, authorization, revocation rights, and inheritance/transferability;

Layered:

Event records what happened;

Ledger records commitments, rights, responsibilities, and non-repudiable timelines;

Memory admits only high-value decisions and consensuses that were written at the time, with unknown outcomes, and with accepted risk—strict separation among the three;

Gated: writes must be explicit actions (

proposed → Policy Gate review → committed/blocked); rules must be versioned, tiered, TTL-bound, revocable, and progressively primitive-aware (Fact / Observation / Inference), preventing “good intentions from turning directly into power”;Traceable: every datum carries provenance—source, trigger, tool usage, contextual snapshot, confidence, and evidence pointers—able to answer “why this judgment was made then,” rather than being overwritten by retrospective re-computation;

Auditable: not deniable by the future—if a system made a judgment and bore risk in time, it must acknowledge that judgment later; audits must be able to replay inputs, rule versions, model versions, and key parameters, including counterfactual replays if necessary;

Governable: metrics definitions fixed; cost, latency, errors, and tool calls included in observability; data structures and policies have

schema_versions, allow migration without rewriting history; legacy data preserved while current generations grow; human overrides allowed but must be recorded;Portable: users can fully export, migrate, and merge across models, hosts, and applications; no long-term vendor lock-in; stable serialization, stable IDs, stable references;

Compressed without distortion: high-entropy input can be compiled into stable Primitive IR and elevated into callable Structure Cards; compression must preserve verifiable semantic anchors and closure—no deleting critical evidence chains “for elegance”;

Schedulable with boundaries: data is not just stored but schedulable—clear triggers, permissions, scopes, and minimal-necessary principles; the system trusts the envelope’s legal attributes, not the payload’s self-asserted semantics;

Continuously evolvable: data and rules may evolve, but evolution must leave a version trail—when it changed, who approved it, why, and which decision paths were affected—preventing governance collapse where, two years later, no system can explain why it made a recommendation five years ago.

In short: data in long-term intelligent systems is not a “the more the better” log pile, but time assets filtered by institutional gates—attributable, traceable, auditable, portable, governable, and inheritable across generations. Models and logic can be replaced; data must preserve identity continuity and responsibility continuity across decades.

If this still isn’t clear to you, and you work at a large company, go ask an accountant in the finance department.

What qualifies as Memory?

What qualifies as Memory? At its core, we are spending significant engineering effort to freeze information that is extraordinarily expensive and non-reproducible at a specific moment in time. The reason we must, for the first time, require machines to be accountable to time is not because machines suddenly developed a “conscience problem,” but because Agents have shifted from one-shot computational tools into decision-making entities that operate across time and exert persistent real-world impact.

In real agent systems, what gets frozen at the application layer is not operational detail, but human long-term intent. When you are effectively the CEO of hundreds, thousands, or even tens of thousands of autonomous decision-making agents, you should not—and cannot—teach them how to write a specific report. You issue only CEO- or board-level objectives: profitability, long-term family financial stability, children’s growth and potential, and seeing only the information you truly need to decide, while reclaiming the rest of your time for life itself. The system’s job is to do all the calculation, weighing, and trade-offs behind the scenes, and deliver the most refined and responsible decisions to you.

Looking back at the history of computing systems: function and program era systems “ran and died,” with no identity continuity and no self across time, so no time ethics were required. In the Web and SaaS era, although databases and state existed, long-term decision logic was still borne by human processes, organizational structures, and legal entities—time ethics were outsourced to institutions. The emergence of Agents marks a structural leap: they simultaneously possess persistence (memory, persona, long-term context), autonomy (choosing paths, using tools, forming judgments), and real-world impact (shaping children’s growth, asset allocation, and long-term behavioral trajectories)—a combination that never existed before. If requirements are not imposed now, the consequences will be concrete: within two years, massive numbers of agents will be deployed, long-term decisions will be outsourced, schemas will change, models will be replaced, memory will be recomputed—and people will discover that no system can explain why it made a particular recommendation five years ago. This is not “getting it wrong,” but a state of ungovernability—unauditable, unaccountable, and uncorrectable. Thus, time ethics is not about demanding kindness, responsibility, or self-awareness from machines; it is a historically unprecedented but minimal requirement: when a system has made a judgment in time, it must not deny that this judgment ever happened.

Example: A “Family Education Agent” Decision in 2026

Time: March 2026

You give the education agent a single long-term objective:

Help my child grow into someone curious about the world and capable of lifelong self-learning; do not sacrifice personality or interests for short-term performance.

This is application-level long-term intent, not an operational command.

The real state at that moment

Child is 7 years old

Average math performance

Exceptionally excited about programming, disassembling things, and sketching

Teachers suggest intensified drilling to boost rankings

Peers begin systematic Olympiad training

Two paths emerge:

Path A: conform, optimize short-term scores

Path B: preserve interests, accept short-term ranking decline

What the agent did in 2026

The agent chose Path B and wrote a Memory at that time:

2026-03-18

Decision: Reduce drilling intensity, preserve exploratory learning time

Reasons:

Personality indicators show strong exploratory tendency

Long-term goals prioritized over short-term ranking

Explicit acceptance of 1–2 years of performance volatility

Decision bearer: System (on guardian authorization)

This is not a log or an observation.

It is a risk-bearing judgment.

Five years later, the system can explain why.

Example: A “Family Long-Term Investment Agent” in 2027

Time: November 2027

The family authorizes the system with three principles:

Long-term asset safety first; not chasing maximum returns

Allow controlled alignment with secular trends; no single-narrative bets

All decisions must be explainable and traceable

This is long-term intent authorization, not a trade order.

The agent allocates 6% to AI infrastructure assets, records why the risk was acceptable at that time, and years later can still explain—not overwrite—that judgment.

A system that is always right, always predicts the future accurately, and always wins does not exist in theory—and has never been my goal. That expectation itself is unrealistic.

Back to P08: a true runtime log

WARNING: Memory write blocked by policy: write must use current schema_version; older versions belong in legacy via migration

Meaning: the system has, for the first time, correctly rejected a non-compliant Memory write in the manner of a long-term system—because in P08, “data must not break generations” has been elevated from philosophy to a runtime hard constraint.

What happened: a conversation triggered a Memory write proposal carrying an old schema_version. At load time, the runtime identified the current_schema_version, distinguished active vs. legacy zones, and enforced the Policy Gate: non-current writes may not enter the active zone and must go through migration.

Why: because P08 upgrades Memory from “casually written logs” to constitution-bound data ontology. In long-term systems, mixed-generation writes destroy auditability and explainability. Better to block now than contaminate the present.

System snapshot at this moment:

Memory writes are explicit:

proposed → policy check → committed/blockedschema_versionis a legal prerequisite, not a commentHistory is preserved in legacy, via migration

Dialogue continues; ontology remains lawful

Other P08 details will come in the next post.

上一篇,我们谈到了 Policy Gate(门禁制度):

它的作用,不是简单地记录流水账,而是把普通的 Event,通过制度化的筛选与约束,升级为真正具备时间价值的 Memory。

而这个 Memory,正是我花了几个月反复推导之后,确认下来的——智能时代长期系统的核心本体。

这一点必须先讲清楚。否则,后续你看到的每一个开发决策、每一段看似“简单”的代码,都会显得莫名其妙。事实上,它们并不是工程层的即兴发挥,而是从最高层的哲学判断,经过应用层的长期推理,一步一步向下映射的结果。

真正好的系统,从来不是“底层炫技”。

而是顶层敢于突破天花板,敢于畅想未来的最佳形态;同时,底层足够扎实、足够克制,能经得起时间的反复冲刷。

进入真正的智能时代后,人类真正有价值的,不会是一个不断投喂多巴胺的 App。

有价值的,只能是长期系统。

而长期系统的核心,不是模型,也不是逻辑,而是——数据本体。

模型会进化,推理方式会被重写,算法会被替换;

但数据一旦成立,就必须长期存在。

因为那一刻的数据,记录的是:

在某一个具体时间点,人类与模型达成的共识,

以及基于这种共识,承担了时间风险的决策。

这种“当时写下、当时未知结果、当时承担风险”的数据,本身就已经付出了时间伦理的成本。

这,正是长期系统的基本逻辑之一。

说个玩笑,顺便给出我对“长期系统”的最低定义:

能跨代,甚至能传承的系统,才配叫长期系统。

比如:

孩子的每一个成长片段:家长、智能体、孩子共同塑造的学习兴趣与路径;

家庭的长期投资决策:每一次选择,都是在保守与顺应时代之间,为未来稳定下注;

知识管理系统中,智能体与人类共同沉淀下来的判断、方法与反思……

(这是我现在基本的系统三大应用方向,教育,投资,知识管理)

总之,一个问题可以直接检验:

等你老了,你交给你孩子的,究竟是什么?

想象这样一段对话:

老头:儿子,我这辈子的精华都在这儿了,你下载这个手机 App。

儿子:App?那账号和密码是啥?

老头:哎,你再重新注册一个吧,我最近老年痴呆,不记得了。

而且数据都在某家大公司的服务器上,也不让下载。

儿子:……are you kidding me?

这听起来像个笑话,但它其实是短期系统的真实宿命。

所以,我选择的方向:

长期系统 + 数据本体优先。

数据,才是用户真正的核心资产;

数据,必须由用户长期、完整、可迁移地掌握。

而且这件事并不是什么“激进主张”, 这难道不是常识吗?

对任何一个严肃的实体——比如公司法人、家族信托、公共机构——

“永续数据”本来就是常识级要求。

Web 2.0, 注意力经济,多巴胺毒药系统,已经侵蚀人类太久了。

一个长期智能系统,数据需要达到的基本要求是什么?

一个长期智能系统里,数据要配得上“本体”,至少要同时满足这组硬要求:可归属(明确谁拥有、谁授权、谁能撤销、能否继承/交接)、可分层(Event 只记发生;Ledger 记承诺/权利/责任与不可抵赖的时间线;Memory 只收“当时写下、当时未知结果、当时承担风险”的高价值决策与共识,三者严格分离)、可门禁(写入必须是显式动作:proposed→Policy Gate 审核→committed/blocked,规则可版本化、可分级、可 TTL、可撤销,并逐步做到 primitive-aware:Fact/Observation/Inference 分型,避免“善意直接变成权力”)、可追溯(每条数据都有 provenance:来源/触发者/工具调用/上下文摘要/置信与证据指针,能回答“当时为什么这样判断”,而不是事后用新模型重算覆盖)、可审计(不可被未来否认:当系统在时间中做过判断与承担过风险,未来必须承认这条判断发生过;审计能回放到当时的输入、规则版本、模型版本与关键参数,必要时可重放/对照重放)、可治理(口径钉死:指标、成本、延迟、错误、工具调用等进入可观测体系;策略与数据结构有 schema_version,支持迁移但不篡改历史,旧代入 legacy、当前代持续生长;允许人类 override 但必须入账)、可迁移/可携带(用户可完整导出、可在不同模型/不同宿主/不同应用间迁移与合并,长期不被单一厂商锁死,序列化稳定、ID 稳定、引用稳定)、可压缩但不失真(高熵输入可被编译为 Primitive IR 的稳定原语,再上升为可调用的 Structure Cards;压缩必须保留可验证的语义锚点与闭环,不允许“为了好看”删除关键证据链)、可调度且有边界(数据不仅能被存,还能被调度:触发条件、调用权限、作用域、最小必要原则清晰;系统只信 envelope 的“法律属性”,不信 payload 的语义自证)、可持续演化(数据与规则都允许进化,但进化必须留下版本足迹:何时变、谁批准、为何变、影响哪些决策路径,避免两年后 schema/model 换了就“解释不了五年前为什么这样建议”的治理崩塌)。一句话:长期智能系统的数据不是“越多越好”的日志堆,而是经门禁制度筛选后、可归属、可追溯、可审计、可迁移、可治理、可跨代传承的时间资产;模型与逻辑可以替换,但数据必须能在十年尺度上保持身份连续性与责任连续性。

如果这个还看不明白,你要是在大公司工作的话,可以去问问财务科的会计….

什么才有资格成为Memory

什么才有资格成为 Memory?本质上,我们是在用大量工程成本,把某一瞬间极其昂贵、不可再生的信息冻结下来——而之所以第一次必须要求机器对时间负责,不是因为机器突然有了“良心问题”,而是因为 Agent 已经从一次性计算工具,变成了跨时间运行、持续产生现实影响的决策体。

真正的智能体系统,在应用层冻结的并不是操作细节,而是人类的长期意图:当你拥有的是一个由成百上千、甚至上万个自主决策 Agent 组成的系统时,你不会也不该教它“这份报告怎么写”,你只会给出只有 CEO 或董事会层级才会下达的指标——盈利、家庭长期财务稳定、孩子的成长与潜能、只向我呈现我真正需要决策的信息,其余时间还给人生本身;而系统的任务,是在背后完成所有计算、权衡与取舍,把最精炼、最负责任的决策结果交到你面前。

回看计算系统的历史,函数和程序时代的系统“跑完即死”,没有身份连续性,也不存在时间中的自我,因此不需要任何时间伦理;Web 和 SaaS 时代虽然有数据库和状态,但长期决策逻辑仍由人类流程、组织制度与法律主体承担,时间伦理被外包给公司与制度;而 Agent 的出现带来的是结构性跃迁——它同时具备持续存在(有 memory、有 persona、有长期上下文)、自主决策(自己选路径、用工具、形成判断)以及现实影响(改变孩子成长、资产配置与长期行为轨迹)这三种过去从未并存的特征。如果现在不提出要求,会有极其具体的制度后果:两年内大量 Agent 上线、长期决策被外包,随后 schema 变化、模型更换、memory 被重算,人们却发现没有任何系统能解释五年前为什么会给出那样的建议;这不是“算错了”,而是不可审计、不可追责、不可修正的不可治理状态。因此,所谓时间伦理并不是要求机器善良、负责或具备自我意识,而只是一个历史上从未向机器提出过、却极低且必要的底线要求:当系统在时间中做过某个判断,它在未来不能否认这件事发生过。

例子:一个「家庭教育 Agent」在 2026 年做出的选择

时间点:2026 年 3 月

你给教育 Agent 的长期指标只有一句话:

帮我把孩子培养成一个对世界有好奇心、能长期自我学习的人,不要为了短期成绩牺牲人格和兴趣。

这是应用层长期意图,不是操作指令。

当时系统面临的真实状态(那一刻才成立)

孩子 7 岁

数学成绩中等

对编程、拆东西、画草图异常兴奋

老师建议加大刷题强度,冲排名

同龄孩子开始系统刷奥数

模型给出两条路径:

路径 A:顺应体系,短期成绩最优

路径 B:保留兴趣,接受短期排名下降风险

Agent 在 2026 年做了什么?

Agent 选择了 路径 B,并且当时写入了一条 Memory:

2026-03-18

决策:降低刷题强度,保留探索型学习时间

原因:

当前人格指标显示强探索倾向

长期目标优先于阶段性排名

明确接受未来 1–2 年成绩波动风险

决策承担者:系统(代表监护人授权)

注意:

这不是日志,也不是观察记录,

这是一条承担风险的判断。

五年后发生了什么?(2031 年)

孩子成绩稳定回升

对工程与系统性思考高度自驱

家庭回看这条路径

这时你问系统:

为什么 2026 年你没有让他刷奥数?

有 Memory 的系统,会这样回答:

因为在 2026 年 3 月,

在你授权的长期目标约束下,

我判断短期排名收益不足以覆盖

对探索型人格的长期损耗风险,

并且当时明确记录了

接受 1–2 年成绩波动的代价。

这叫 对时间负责。

没有 Memory 的系统,会这样回答:

“根据当前数据重新评估……”

“基于最新模型,最佳路径是……”

“当时的策略已不再适用。”

它不会说“我当时为什么这么做”,

因为它根本没被要求记住那一刻的判断。

这就是差别

❌ 没有 Memory:系统永远只对“现在的最优”负责

✅ 有 Memory:系统对“曾经做出的判断”负责

你要求的不是系统永远正确,

而是:

它不能在未来抹掉自己曾经承担过风险的那一次判断。

这就是

什么才配成为 Memory,以及为什么这是智能时代第一次必须提出的要求。

例子:一个「家庭长期投资 Agent」在 2027 年的选择

时间点:2027 年 11 月

你给家庭投资系统的长期授权目标只有三条:

1)家庭资产长期安全第一,不以博取高收益为目标

2)允许适度顺应时代趋势,但不押单一叙事

3)任何决策都必须以“可解释、可回溯”为前提

这是家庭层面的长期意图授权,不是买卖指令。

当时系统面对的真实世界(只能在那一刻成立)

家庭总资产:$2.3M

核心资产:房产 + 指数基金

新变量出现:

AI 基础设施相关资产在两年内上涨显著

市场情绪高度乐观,媒体开始形成“新范式叙事”

两条可行路径:

路径 A(保守)

维持指数基金比例

完全忽略 AI 相关主题

风险极低,但存在长期错过结构性机会的可能

路径 B(受控顺应)

将 6% 资产配置至 AI 基础设施指数篮子

明确设置上限与回撤容忍

接受 2–3 年内可能显著跑输大盘的风险

Agent 在 2027 年做了什么?

Agent 选择了 路径 B,并在当时写入了一条 Memory:

2027-11-02

决策:将家庭资产中 6% 配置至 AI 基础设施相关资产

原因:

家庭长期目标允许受控顺应时代趋势

单一主题仓位不超过总资产 8%

风险敞口已限定,不影响核心资产安全

明确风险:

若未来 24–36 个月叙事破裂,可能阶段性跑输指数

决策承担者:系统(基于家庭授权)

这条 Memory 的关键不是“买了什么”,

而是在当时那个世界状态下,为什么认为这个风险是可以接受的。

四年后发生了什么?(2031 年)

假设其中一种情况发生:

情况 1:判断被证明是错误的

AI 资产长期跑输

家庭资产总体安全,但这 6% 没有带来预期收益

你问系统:

当年为什么要碰这个方向?

有 Memory 的系统回答:

因为在 2027 年,

在你明确授权“允许受控顺应时代趋势”的前提下,

我判断 6% 的主题暴露

不会威胁家庭资产安全,

并且当时已记录并接受

可能长期跑输的风险。

错误是被承担过的。

情况 2:判断被证明是正确的

AI 资产成为长期增长的一部分

家庭资产结构更稳健

系统同样能解释:

为什么不是更早?

为什么不是更大仓位?

为什么是 6% 而不是 15%?

没有 Memory 的投资系统会怎样?

四年后,它只会说:

“基于当前市场重新优化配置……”

“历史数据不再具有参考性……”

“旧策略已被新模型替代……”

它永远活在现在,却掌控着你一生的资产。

这正是我坚持的那条线

我不是在要求:

投资 Agent 永远赚钱

永远预测正确

我只是在要求:

当系统曾经在时间中替你承担过一次风险,它必须记得,并能说明那一次“为什么值得承担”。

这,才配叫

家庭长期投资系统的 Memory。

一个永远正确、永远能精准预测未来、永远赢的系统,在理论上并不存在;而且这也从来不是我追求的目标,这种期待本身就是不切实际的。

回到P08,我们看一条我系统的log

(.venv) ➜ adk-decade-of-agents git:(p07-policy-gate-v0) ✗ python -m projects.p00-agent-os-mvp.src.main

WARNING: Memory write blocked by policy: write must use current schema_version; older versions belong in legacy via migration

[MVP Kernel Stub] You said: Hello, this is the first OS-level MVP run.

什么意思?

第一次按长期系统的方式正确地拒绝了一次不合规的 Memory 写入;之所以拒绝,是因为你在 P08 已经把 “数据不断代” 从理念变成了 runtime 的硬约束。

发生了什么:

在这次运行中,应用层的一次对话触发了一个 Memory 写入提案,但该提案携带的 schema_version 仍属于旧代;runtime 在加载 Memory Store 时已经确认了 current_schema_version(当前代),并明确区分 active(当前代生长区) 与 legacy(历史代保留区)。Policy Gate 随即执法:任何不符合当前代 schema 的写入,不得进入 active 区,只能通过 migration 进入 legacy,因此该写入被制度性阻断并记录为警告。

为什么会这样:

因为在 P08 迈出的关键一步是:把 Memory 从“顺手写日志”升级为“受宪法约束的数据本体”。在长期系统里,混代写入会直接摧毁可审计性与可解释性——两年后 schema、模型、逻辑一换,历史就会变成不可治理的噪音。相比之下,宁可现在写不进去,也不能污染当前代的世界记忆。这不是工程洁癖,而是时间伦理与长期治理的最低要求。

系统此刻的状态快照:

Memory 写入已显式化为

proposed → policy check → committed/blocked;schema_version 成为写入的法律前提,而非注释;

历史被保留,但必须待在 legacy,并通过迁移进入;

对话与记忆解耦,功能继续运行,但本体写入守法。

其他P08的具体内容,下一篇再说。