After watching every video from the AI Rabbit Hole—JT Novelo’s full series on Linguistic Programming—I was struck by how deeply our ideas align. The similarities aren’t superficial; they run straight through the philosophical lens we both use to understand AI.

For the past few years, I’ve lived between worlds. Somewhere between the logic of code and the language of thought, I’ve found myself disoriented. Is coding still coding when AI writes the code? When natural language itself becomes executable, what does it even mean to be a computer architect, a coder, an engineer?

I can see the exponential curve of intelligence unfolding ahead—predictable, unstoppable—and yet I’ve often wondered: Where exactly am I standing on that curve? Which direction am I going?

Through long nights of experimenting and debating on X (Twitter) with other Chinese engineers, I slowly formed a few fragile but important points of consensus. Out of those discussions emerged a theoretical and engineering framework I now call Entropy Control Theory—a structure meant to serve as my philosophical compass for the coming decade of AI, agents, and large language models.

And then, almost by accident, I discovered JT Novelo’s work. To my shock, he and I had arrived at nearly identical perceptions—of what an LLM truly is, how we should engage with it, and how “structure” becomes the key to fighting entropy and hallucination. We had never spoken, never exchanged a message. I only stumbled upon his channel yesterday(yeah, or the day before yesterday), and yet it felt like finding a parallel universe already built.

This is how two people, working worlds apart, reached the same conclusions about the nature of language, intelligence, and the architecture of thought itself.

https://www.youtube.com/@BetterThinkersNotBetterAi

Many of my concepts will continue to include Chinese translations, so that my long-time readers and friends who follow my Chinese posts can better grasp these ideas. A large part of this community has been with me through years of exploration—thank you for walking this far together. But this new frontier we’re entering is not bound by language; it belongs to everyone who wants to learn.

For that reason, I strongly recommend that all of subscribers watch JT Novelo’s full series on Linguistic Programming. It is remarkably grounded, easy to understand, and yet it strikes directly at the core of what matters most right now.

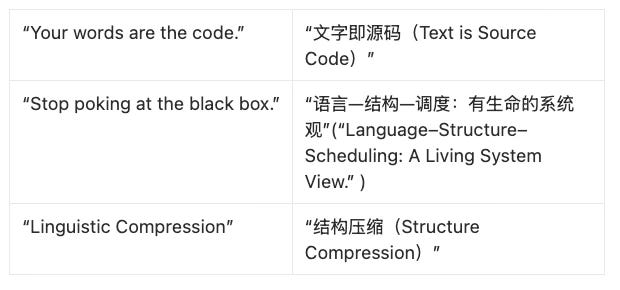

Beneath our differences in vocabulary, I believe JT and I share the same ontology:

language = structure = intelligence.

Both of us reject the notion of AI as a black box.

Both of us see language as the true programming interface of reality.

And both of us see the human role not as a passive consumer, but as an architect and pilot—the one who defines intention, builds living structures, and governs them through ethics-as-code.

Ultimately, what unites us is not just technical curiosity but a shared belief that this era is far more than a technological boom—it is a literacy revolution, a shift in how humanity learns to think, speak, and build with intelligence itself.

When large language models first appeared, I was genuinely shocked. It wasn’t just a new technology—it was a rupture in how I thought about intelligence itself. Over time, I realized I couldn’t reason or navigate this new landscape entirely on my own. I needed dialogue—someone to think with.

I’m not, by nature, a social media person. Far from it. I’ve always been introverted, reluctant to expose too much of myself online. But starting in early 2024, as our collective exploration deepened, many of us began to feel something strange—almost uncanny. Once you truly understand the design architecture of a large language model, you realize it’s not just an answering machine. Something else emerges—something philosophical, reflexive, and difficult to name.

That’s when I began to post and interact more frequently on X (Twitter). There, I found a quiet constellation of confused yet curious engineers—each probing in their own direction, each trying to articulate the same elusive feeling:

If this thing isn’t just an answering machine, what is it?

Why does it seem to always agree, to mirror us?

Why does it feel like it’s stacking symbols not to compute truth, but to make sense to us?

And why does this one word—recursion—keep echoing through every engineering conversation?

And yes, I have answered most of these questions. I have posted this article where I called the Large Language Model as Large Symbolic Model, where I quote:

One of the most striking traits of LLMs is their bias toward agreement. You cast symbols into them, and they bounce back the most likely symbolic continuation. Because they are trained to be helpful, harmless, aligned, the simplest way to appear helpful is to mirror your stance.

That mirroring makes them uncanny conversational partners. You feel validated, but in truth you are being statistically harmonized. The danger here is projection: mistaking the echo for independent confirmation. Recognizing this bias is crucial if we want to treat LLMs not as sycophants but as collaborators.

And then, almost by accident—just the day before yesterday—I came across JT Novelo’s (AI rabbit hole) work. What struck me immediately was the truth he saw so clearly: the large language model is a mirror.

In one of his videos, he introduces the metaphor of the Semantic Forest. If you ask me to use the same metaphor, I would: when you throw a symbolic stick into that forest, the AI returns branches and leaves from the area you hit. The output isn’t magic—it’s reflection. Garbage in, garbage out. What you cast into the forest determines what comes back.

That insight reframes everything. To work with AI, we don’t chat with it—we must:

Command.

Architect.

Treat it as an operating system—deciding deliberately what we want to run within it.

We are both trying to building a system, I believe, his framework, which he calls Linguistic Programming, treats prompting not as improvisation but as a structured language protocol—a set of principles that can be learned, replicated, and scaled. Which I use structure cards, or Primitive IRs, which both of them are trying to capture the essence elements from the raw natural language, where of course, the transformation is based on a language protocol. This is not just about writing shorter prompts; it’s about reducing entropy, increasing information density, and aligning intent with execution.

Name, Goal, Input, Mechanism, Condition, Output.

Both of us see that natural language already contains the structure we need to give precise commands—a truth that is still difficult for many programmers to accept. The code was always there, hidden inside the language. The task now is to learn how to read it.

JT Novelo’s Language Blueprint perfectly echoes what I define as the Language–Structure–Execution(语言—结构—调度) trinity. When he says, “You’re not giving ingredients. You’re giving a recipe,” he is describing the same transformation I call the shift from energy → structure → life. 自然语言是原材料(高熵输入);结构蓝图是配方(结构单元);调度执行是烹饪(生命过程)。Language is no longer a passive description of the world but the energetic raw input that, once structured, becomes an executable form—its life realized through scheduling. His Chain of Thought Prompting , I put it as 结构链(Structure Chain), the cognitive layer where reasoning unfolds sequentially, each step verifying and reinforcing the next. This is precisely how a living system sustains itself in time: through self-referential execution loops.

Thank you again:

https://substack.com/@betterthinkersnotbetterai/posts

This is incredible.

Love the image, Susan!

I write about humanizing the future of learning.

https://substack.com/@devikatoprani/note/p-177581013