The Age of Intelligent Lego: How Everyone Can Build with AI Agents

You don’t need to be a programmer to shape the future of intelligence — just curiosity, imagination, and a sense for how the pieces fit together.

When I started writing this Substack, I often thought of Thomas Kuhn’s The Structure of Scientific Revolutions. Over the past few years, I have returned to that book again and again, and each reading has affected me more deeply than the last.

In the first half of my life, the education and professional training I received belonged entirely to what Kuhn called normal science. The world I grew up in was neatly divided. Each discipline had its own methods, each profession had its standards and codes. Everything I was taught seemed to exist within a textbook. You learned, you practiced, and you followed the rules that others had already written. It was a stable, predictable way to understand the world.

But in recent years, large language models have changed everything. They have produced too many phenomena that our old theories can no longer explain. Machines now appear to understand meaning, generate ideas, plan actions, and even collaborate with us in ways that feel almost human. For the first time, I realized I was standing at the edge of a new scientific revolution.

Kuhn once wrote,

“An individual or group first produces a synthesis able to attract most of the next generation of practitioners; the old schools gradually disappear.”

I believe we are living in such a moment now. What I am about to share with you is my understanding of AI agents. Even if you have no background in computer science, you will still be able to follow. Because this is not a story about code, it is a story about structure, coordination, and understanding.

The idea of the agent is becoming the new paradigm.

To understand it is to understand the next era.

Introduction: The Next Form of AI Is Not a Model, but an Ecosystem

Most conversations about AI still circle around the models themselves: larger language models, multimodal inputs, new reasoning tricks, more parameters, better benchmarks. But the more I watch these systems at work, the clearer it becomes that the next step is not a bigger model but a different form altogether. If a large model is the engine of intelligence, the agent is the vehicle that carries intention into the world; more precisely, what matters is an ecosystem of small, composable units—intelligent Lego—that can be connected, swapped, and reassembled as goals and contexts change. The real shift is from generation to orchestration: from producing text or images to coordinating perception, memory, tools, and actions into a coherent process that can pursue a goal over time.

People will reasonably disagree about what counts as a “true agent.” Some reserve the word for systems that exhibit autonomy, persistent goals, memory, and self-reflection. Others use it more loosely for any arrangement where a model perceives, decides, and acts through tools. Rather than stall on definitions, I propose a practical compromise: when a system interprets an open-ended objective and carries it through a sequence of reasoning and tool use, we can call that an agentic workflow. The phrase is more precise and less metaphysical, and it lets us talk about what actually works: processes that bind models to retrieval, planning, databases, APIs, and human review, with feedback loops that refine the next step.

Once you look through this lens, intelligence stops being a property of a single model and becomes a property of how components snap together. A language model on its own is a capable brick; retrieval adds memory; function calling adds hands; logging and review add reflection; scheduling adds continuity. When these bricks share clean interfaces—often just structured language—they form circuits of sense, plan, act, and learn. The behavior that emerges from their interaction is the thing we care about: a pattern of cognition robust enough to survive ambiguity, adapt when inputs shift, and reconfigure when a plan fails.

This is why the future belongs to ecosystems. The advantage will not come from one more clever prompt but from the craft of assembly: designing interfaces that make modules interoperable, curating libraries of reliable skills, attaching verification to every action, and teaching systems to choose and rewire their own subroutines as context evolves. In that world, anyone who understands structure—how to decompose goals, how to route information, how to keep a feedback loop alive—can build with intelligent Lego. Call it agents if you like, or, more carefully, agentic workflows. Either way, learning to assemble them is how we step from models to living systems of thought.

II. From Models to Ecosystems: The Birth of the Agent

In the early stage of the AI revolution, the promise of intelligence was expressed mainly through generation. We asked models to write text, draw images, compose code, and summarize information. The excitement was in the fluency and creativity of their output. Large language models, trained on the entire written record of humanity, became mirrors of our symbolic world. They could produce words, sentences, and paragraphs that looked indistinguishable from those written by people. Yet, at that point, they were still static intelligences—closed systems that responded to prompts but did not act beyond language.

That was the first paradigm: AI as generator.

It was powerful, but limited to simulation. A model could imitate thought but could not change the world it described. It had no tools, no memory, and no continuity between sessions. Every prompt began from zero.

The second paradigm emerged quietly when models gained the ability to call functions, retrieve information, and use external tools. A model could now ask an API for a flight schedule, look up a document in a database, or execute a Python function to calculate a number. When a model learns to use a tool, it crosses the line from simulation to action. It begins to operate in a loop that resembles cognition: perceive, reason, act, and verify.

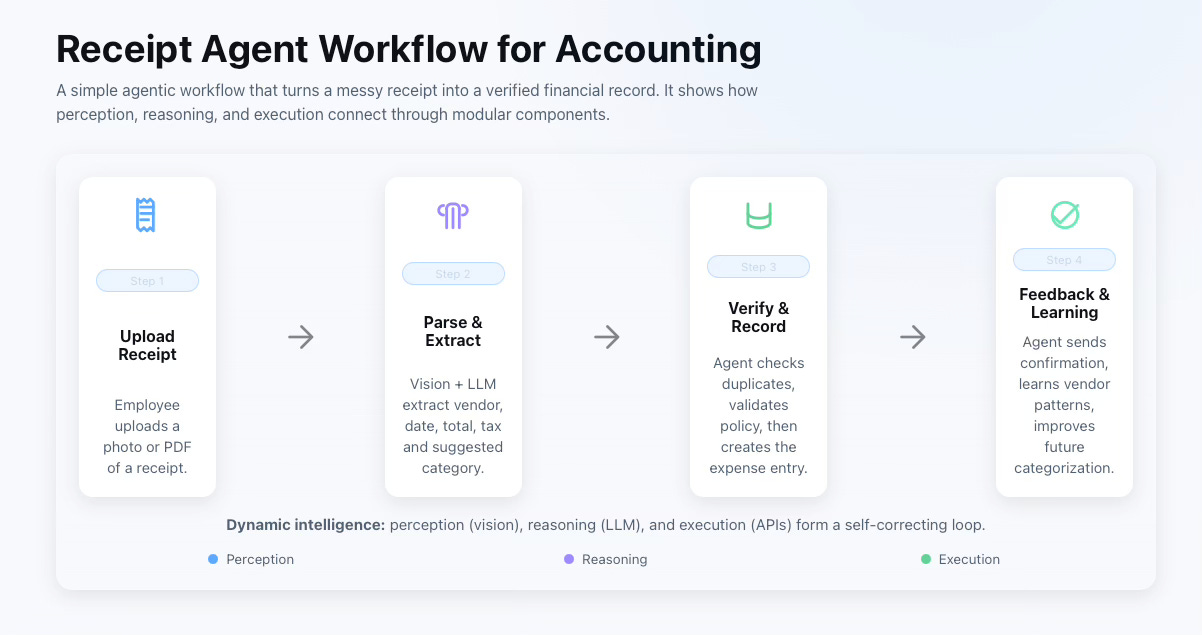

Consider a simple example: a receipt-input agent in a small business.

You take a photo of a crumpled receipt and upload it. A few years ago, a model could only describe it—“this appears to be a restaurant bill”—and maybe extract some numbers from the text. That was generation, a linguistic guess. But now the model can do more. It can use a vision API to parse the image, extract structured data such as vendor, date, total, and tax. It can query your accounting database, check whether this expense has already been recorded, and if not, automatically categorize and file it under “Meals and Entertainment.” Finally, it can send a message to your finance dashboard: “Expense added, awaiting review.”

At that moment, what once was a static model becomes an active participant in your workflow. It perceives, reasons, acts, and confirms—the full cognitive loop of dynamic intelligence. The receipt-input agent does not simply recognize text; it coordinates a small ecosystem of tools, APIs, and records to perform a meaningful business action.

Adding memory deepens that loop. The agent can now remember your preferred expense categories, typical vendors, or recurring mistakes and correct them automatically next time. Goal planning adds another dimension: it can schedule periodic reconciliations, flag anomalies, and prioritize high-value receipts for review. Through this process, the system moves from reacting to tasks to managing intentions.

At that moment, the system stops being a static model and starts to behave as a living process—one that interprets, acts, and learns within a feedback loop. This is the birth of the agentic ecosystem: a constellation of models, tools, and memories that together perform coordinated reasoning.

Agent = LLM + Tools + Memory + Feedback + Self-Planning

This simple equation captures the transformation from language model to agentic system. The intelligence we see here is no longer passive or descriptive. It is dynamic intelligence—an intelligence capable of affecting the environment, responding to uncertainty, and refining its strategy through iteration.

Static intelligence generates possibilities; dynamic intelligence tests them.

Static systems wait for instructions; dynamic systems form intentions.

Once models begin to sense, act, and reflect, they stop being mere engines of prediction and become actors within an ecosystem of cognition.

That is why the true story of AI now shifts from models to ecosystems. Intelligence is no longer something we consume; it is something we compose—a distributed structure that learns to think and operate alongside us.

III. Intelligent Lego: From Function to Structure

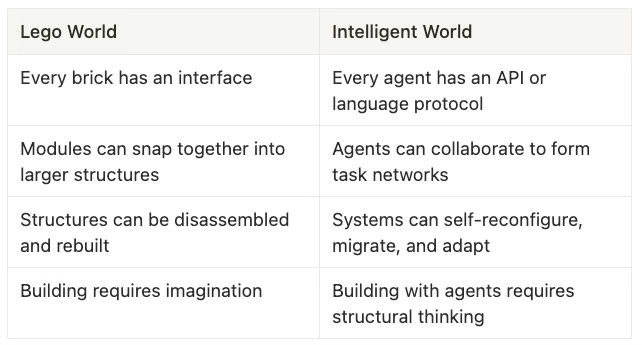

Imagine the world of AI agents as a world made of Lego.

In the same way that every Lego brick has a connector, every agent has an interface — an API or a language protocol that lets it connect to other components. What makes Lego fascinating is not the individual bricks, but how endlessly they can be combined into new forms. The same is true of AI systems today: their power no longer lies in any single model, but in the way these models, tools, and memories interlock to form living, reconfigurable structures of cognition.

“Intelligence doesn’t live inside the brick — it emerges from how the bricks snap together.”

(This orange Lego brick from my son’s set is the simplest way to explain agentic intelligence: by itself, it does nothing—but because it follows a shared design language, it can connect with any other piece to form something far greater. Each AI component works the same way: a model, a memory, or a tool is just one brick, and intelligence emerges not from the brick itself but from how these pieces fit together into a living structure.)

A single large language model, no matter how advanced, is only a language brick.

Tool invocation becomes an action brick, retrieval or RAG serves as a memory brick, and autonomous planning acts as a thinking brick.

When these modules are connected through a common structure protocol, they stop being isolated capabilities and begin to form coordinated behavior — a network of cognition.

And here lies the most important shift: this new mode of creation is open to everyone.

You no longer need to be a programmer to build with intelligent systems.

What matters is not syntax, but structure — not coding skill, but conceptual fluency.

In the same way that a child can build castles or robots from the same set of Lego pieces, anyone can now assemble intelligent workflows by describing how components should interact. A business analyst who understands process flows, an educator designing personalized lessons, a designer experimenting with creative prompts — all of them can become builders in this new ecosystem.

This democratization of intelligence means that structural imagination becomes the new literacy. Those who understand how to connect and orchestrate modules — even without writing a single line of code — can often discover more elegant, efficient, and creative methods than traditional engineers. Many people who deeply understand their domain or have an instinct for structure will, through experimentation, play their way into far better solutions than software teams working from fixed templates.

As with Lego, the real genius is not in having the most pieces, but in knowing how to assemble them.

When you learn to think structurally, every model, every API, every memory source becomes a piece of your cognitive architecture. And as you begin to design not programs but systems of interaction, you realize that the frontier of AI is not automation, but composition — a world where intelligence is built the same way imagination has always been: by snapping one meaningful piece to another, until form gives rise to function.

V. How to Start Building Your Own Intelligent Lego

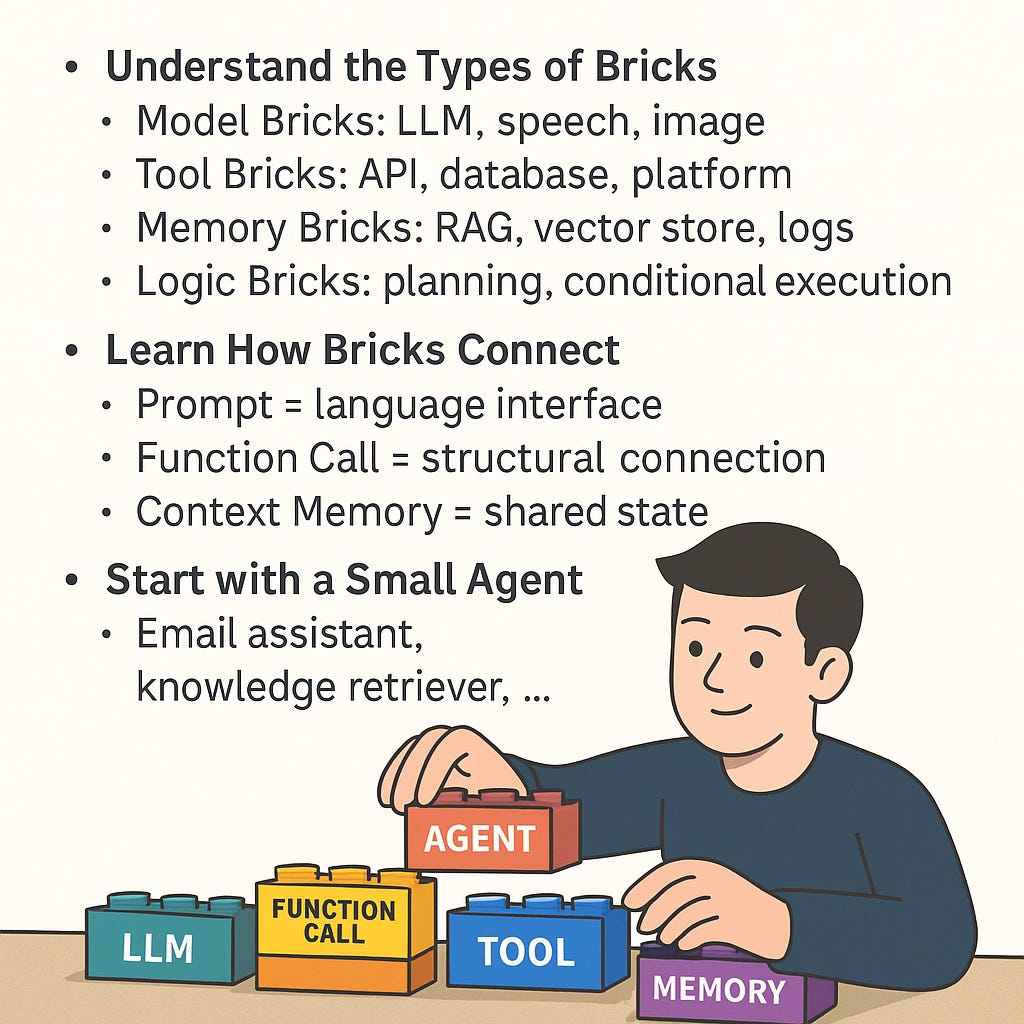

Step 1: Understand the Types of Bricks

Every intelligent system is built from a few fundamental kinds of “bricks,” each serving a different cognitive function.

Model Bricks: These are your thinking components — large language models, speech recognition, image understanding. They interpret, generate, and reason.

Tool Bricks: The hands of your system. APIs, databases, and platform connectors that allow your agent to take action in the real world.

Memory Bricks: The system’s long-term context — retrieval-augmented generation (RAG), vector databases, and logs that store what the agent has learned or seen before.

Logic Bricks: The decision-making core — planning, conditional execution, and reasoning loops that guide when and how other bricks are used.

When you understand these four categories, you start to see any intelligent workflow — from customer service automation to creative writing assistance — as a composition of modular functions rather than a monolithic app.

Step 2: Learn How Bricks Connect

The real creativity begins in how you connect the pieces.

Each connection method defines how information flows through the structure:

Prompt = Language Interface. The simplest connector — where one component’s output becomes another’s instruction.

Function Call = Structural Connection. When a model triggers an external process, this call acts as the stud that links language to action.

Context Memory = Shared State. The baseplate of your Lego system — it lets agents remember and coordinate, keeping the structure coherent over time.

Once you see these as universal interfaces, you realize you’re not programming; you’re describing relationships. You’re deciding how thought, action, and memory talk to one another.

Step 3: Start with a Small Agent

Begin small. You don’t need to build an entire cognitive city. Start with one “brick-sized” intelligent assistant — a self-contained agent that performs a single meaningful task:

An email assistant that drafts and classifies messages.

A knowledge retrieval agent that answers questions from your own notes or documents.

A research summarizer that organizes information from multiple sources.

A content publishing helper that formats and posts your writing automatically.

These are your first Lego pieces — simple, functional, and modular. Once you build one, you can connect it to another. Two or three agents can form a miniature ecosystem, collaborating through shared context or handoff logic.

“Each agent is a brick, and every brick can grow into an ecosystem.”

The goal is not to engineer software, but to learn how intelligence behaves when structured.

Like Lego, the more you build, the more patterns you discover.

Eventually, you stop seeing isolated functions and start seeing systems —

not code, but compositional intelligence coming to life through your design.

For those who has no computer science background

Many people assume that building intelligent systems requires a background in computer science, but the coming era may prove the opposite. Those who understand how ideas, processes, and people fit together — designers, teachers, managers, writers, scientists — often possess a natural sense of architecture. If they begin to experiment with intelligent components the way they once learned new creative tools, they may discover structures that engineers would never imagine. Because what matters now is not syntax, but composition — not coding, but the ability to see how pieces of thought and function can form living systems.

As a practical next step, I highly recommend exploring Andrew Ng’s new course on Agentic AI. It offers a clear and accessible introduction to how agents work, how they connect reasoning with tools, and how anyone — even without a computer science background — can start experimenting with these ideas. It’s an ideal bridge between theory and practice, and a perfect place to begin building your own intelligent Lego.

https://www.deeplearning.ai/