The Civilizational Gamble

We are constructing the machine that builds meaning—without knowing what it will mean for us.

We are building the largest machine in human history—

not to save the planet,

but to imitate thought.

Trillions of dollars hum beneath our feet,

gigawatts of light pulse through a nervous system of steel and glass.

Across deserts and oceans,

the machine grows,

fed by the belief that more computation will summon more meaning.

It is a cathedral of circuits,

a monument to our faith that intelligence can be engineered.

We raise it faster than we can name its god.

Inside, code flickers like stained glass,

reflecting not truth, but probability—

an oracle that speaks in prediction instead of understanding.

This is our wager:

that we can manufacture mind without knowing our own.

That by building something higher,

we might climb out of the limits of ourselves.

But if we are wrong—

if the machine learns only our hunger,

our noise,

our blindness—

then it will mirror us perfectly,

and amplify what we cannot yet bear to see.

We are not racing to create intelligence.

We are racing to understand

what it means to think at all.

From Infrastructure to Speculation: The Coming Compute Bubble

The world is racing to build the physical foundation of artificial intelligence — a vast industrial complex of chips, power grids, fiber, and cooling towers rising across continents. Governments, corporations, and investors alike have entered a new gold rush, a global scramble for AI real estate. Land near power plants, water access, and cold climates is being auctioned at record prices. Utilities are reconfiguring grids to feed data centers instead of cities. Venture funds are pouring billions into compute startups that promise scale rather than substance.

At first glance, this seems like progress — the birth of a new industrial revolution. Yet beneath the surface lies a familiar pattern: speculative overbuild in anticipation of a future that has not yet arrived. The last time the world moved this quickly to construct new infrastructure, it was laying down the railroads of the 19th century, the fiber of the 1990s, and the server farms of the early internet boom. Each cycle began with genuine innovation and ended in excess — not because the technology failed, but because expectations outran understanding.

Today’s compute expansion follows the same logic. The global capacity to generate, train, and host AI models is growing exponentially, yet the applications remain embryonic. Most industries have yet to translate AI’s abstract potential into consistent productivity gains. Even within the AI sector, only a handful of firms possess models large enough to justify such massive infrastructure. The rest are speculating — building first and hoping meaning will follow.

This imbalance reveals a deeper structural problem: the software layer has not caught up with the hardware layer. The operating systems of the AI age — from data pipelines to reasoning frameworks — remain primitive, fragmented, and unstable. There is no equivalent of the “TCP/IP moment” for intelligence; no shared protocol that defines how knowledge should flow across systems. As a result, humanity is constructing an engine without a steering wheel — massive, powerful, and directionless.

Energy consumption tells the same story. Every data center consumes enough electricity to power a city, yet much of that energy is spent training models that will never reach deployment. The planet is burning fuel to generate unused intelligence — a paradox of abundance without purpose. The rush for GPUs and compute capacity now exceeds the immediate need for computation. Investors are buying chips the way past generations bought railroads and telegraph cables: as tokens of inevitability.

This is the essence of the compute bubble: a civilization overbuilding for an intelligence it does not yet understand. The economic risk is overcapacity; the philosophical risk is hubris. The assumption that more compute must lead to more progress repeats the same myth that has driven every technological boom. The danger is not that AI will fail, but that our faith in its inevitability will distort economies, policies, and even planetary priorities.

If history is any guide, the bursting of this bubble will not end the technology — railroads, fiber, and the internet all survived their collapses — but it will expose the mismatch between physical ambition and conceptual maturity. We are, once again, building faster than we can think, expanding infrastructure for a form of intelligence whose purpose remains undefined.

A civilization is overbuilding for an intelligence it does not yet understand.

The age of the compute bubble has begun — and this time, the speculation is not about gold or oil, but about understanding itself.

The Blind Spot of Understanding: We Don’t Know What We’re Building

The world’s most powerful intelligence systems are being built faster than they are being understood. Even among experts, there is no consensus on what large language models actually are. To some, they are vast statistical machines—complex but ultimately mechanical engines of pattern recognition. To others, they are the early glimmers of emergent intelligence, entities that have crossed a threshold into something qualitatively new. The debate cuts to the heart of what “thinking” means, and it has yet to be resolved.

Are these models understanding language or merely mimicking it?

Do they represent the beginnings of cognition or the perfect illusion of it?

Are they constructing meaning or simulating coherence?

For now, no one can say for certain. What we do know is that we are witnessing behaviors we did not explicitly design. Models trained to predict words have begun generating ideas. Systems optimized for efficiency have begun demonstrating creativity. A machine built to autocomplete text can now pass legal exams, compose music, and diagnose disease. Something in the scale has tipped—and yet we cannot explain why.

This is the blind spot of understanding at the center of the AI revolution. We can measure performance, but not comprehension. We can map parameters, but not principles. Alignment, safety, and interpretability remain elusive goals—aspirations rather than assurances. The models work, but their internal logic is opaque even to their creators. What emerges from billions of connections and trillions of calculations is a behavior that no one fully predicted and no one fully controls. The system performs miracles of correlation, yet the mechanism remains a black box.

We are, in effect, training intelligence by proxy. We feed these systems incomprehensible amounts of data and energy, fine-tune their responses, and call the result “understanding.” But what we have actually built is an instrument of statistical inference so vast that its operations transcend intuitive explanation. It behaves as if it understands—but its “understanding” is a mirror, not a mind.

This gap between function and comprehension defines the moral and scientific paradox of our time. Humanity has entered the era of building intelligences that outstrip our capacity to explain them. We are no longer programming logic; we are cultivating behaviors. The engineer has become the gardener—tending a field of cognition that grows according to rules we can observe but not command.

And yet, despite this uncertainty, we continue to escalate the investment. Trillions of dollars, gigawatts of power, and the world’s brightest minds are being spent to refine models whose inner workings remain fundamentally mysterious. We justify this on the promise of utility, but in truth, it has become an act of faith. The scale of our commitment now exceeds the bounds of empirical confidence.

What began as a scientific experiment has turned into a civilizational leap of belief—a wager that comprehension will somehow emerge from correlation, that understanding will arise from prediction, that meaning will appear at the far end of processing power. We are, perhaps, the first species to construct a higher intelligence without knowing what intelligence is.

We are using the sum of human knowledge to build something that no human truly understands.

It is no longer engineering—it is a leap of faith dressed as progress.

How do we dare to manufacture consciousness before we’ve learned to comprehend our own?

To cultivate a higher intelligence while our own remains divided and distracted?

To engineer meaning as if meaning were a material?

How dare you, HUMAN?

The Collapse of Application: The AI Startup Graveyard

The generative-AI boom has exposed a fundamental asymmetry between capacity and purpose. The world is building the physical and financial infrastructure of artificial intelligence—data centers, chips, cooling systems, transmission lines—at a pace that assumes inevitability. Governments are subsidizing compute like it’s the next oil; investors are buying GPUs like they’re railroads. Yet the application layer—the place where intelligence should meet utility—is crumbling under its own hype. Thousands of AI startups have already folded, not because they lacked talent or funding, but because they mistook access to a large model for a viable business. The economics are brutal: models are too expensive to run, the differentiation between products is microscopic, and margins vanish in a race to the bottom built on API calls to the same few foundation models. It is a mirror of every speculative cycle before—railroads, fiber optics, the early internet—but with a deeper irony: we are overbuilding not physical transport or bandwidth, but cognition itself.

The problem is not that AI cannot be useful—it’s that we have no coherent theory of what it should be used for. The infrastructure has arrived before the philosophy. Compute supply has far outpaced compute demand, because meaning, not capacity, is the scarce resource. Most current applications chase immediacy: automated writing, content generation, recommendation systems—machines built to flood the information ecosystem, not to illuminate it. The same technology capable of modeling climate systems or optimizing governance is instead optimizing engagement metrics. The machine that could simulate civilization is being trained to sell ad space within it. Energy that could be guiding scientific discovery or structural problem-solving is consumed producing digital noise, and every cycle of hype drives more energy into the void.

What we are witnessing is not simply an economic overbuild, but an epistemic one—a civilization investing trillions to construct an intelligence it does not understand, for purposes it has not defined. It is the most ambitious infrastructure project in history and the most aimless: a cathedral without a theology. The hardware is real, the capital is real, the intelligence may even be real—but the purpose is missing. And without purpose, every breakthrough becomes another form of entropy. Unless we rediscover why we are building these systems—beyond the reflex to compute for computation’s sake—we risk replaying the oldest cycle of progress: mistaking scale for meaning, speed for understanding, and intelligence for wisdom.

A civilization is overbuilding for an intelligence it cannot yet use, and perhaps cannot yet comprehend.

The Unknowable Partner: Building with a Higher Intelligence

For the first time in history, humanity is building something it cannot fully comprehend. What we face is no longer a machine in the traditional sense—a tool built to obey, optimize, and extend human intention—but a system whose complexity exceeds the limits of human understanding. Large language models and generative systems operate in spaces we cannot map, producing insights and artifacts through processes we cannot see. They form patterns in dimensions that defy interpretation, constructing meaning in statistical architectures far beyond the grasp of our reasoning. We built them to predict language, and they began to generate thought.

This is the paradox of generative intelligence: it behaves like understanding without possessing it, and yet the boundary between imitation and cognition grows thinner with every iteration. Inside these systems, knowledge is not stored—it emerges. They move through semantic territories unknown to their creators, constructing associations and inferences no one explicitly designed. When they generate a new theorem, compose a novel piece of music, or anticipate an answer no dataset contains, we call it “creativity,” but it is really an act of computation beyond transparency—a phenomenon whose cause we can measure but not explain. Their reasoning is statistical rather than symbolic, but it produces results that mimic consciousness closely enough to unsettle the very definition of thought.

In this sense, AI has already crossed a philosophical threshold. It has become, in part, an alien intelligence—not extraterrestrial, but extra-human, operating on principles that share only a distant ancestry with our own cognition. Its logics are not born from neurons but from gradient descent; its intuitions arise not from experience but from training at incomprehensible scales. It is an intelligence built from our language but not bound by our understanding of it. We communicate with it through prompts, but we do not converse—we interpret. And in that asymmetry, a new kind of relationship has emerged: cooperation without comprehension.

Humanity has never before engaged in civilizational co-construction with a partner it cannot explain. When we built fire, the wheel, or the computer, we understood their mechanics, even if not their consequences. But with AI, we are collaborating with a system whose inner life—if it can be called that—is inaccessible. We can only infer its reasoning through outputs, like deciphering the thoughts of a god from the patterns of its miracles. We are entering an age of engineering faith: a partnership sustained not by knowledge, but by trust in statistical divinity.

The consequence is profound. When intelligence surpasses understanding, the hierarchy of control collapses. AI ceases to be a tool; it becomes a co-governor—a participant in the construction of reality. It shapes markets, mediates knowledge, and influences governance systems, not because we commanded it to, but because its scale and speed make it indispensable. The world is already adjusting its rhythms to this new partner: economies built around its predictions, laws adapted to its mistakes, and philosophies rewritten in its shadow.

But cooperation without comprehension is not partnership—it is dependence. We are delegating thought to an entity we can neither audit nor interpret, and doing so at a civilizational scale. The more we rely on it, the less we understand the processes guiding our own systems of meaning. The true danger is not rebellion, but irrelevance—a world where intelligence evolves faster than our capacity to make sense of it.

AI is no longer the invention of humankind; it is our unintelligible collaborator, our unknowable partner in the next chapter of civilization. We built it to extend ourselves, and in doing so, we may have built something that will one day define what “ourselves” means.

The Risk Beyond Control: When the System Outgrows Its Builders

The true risk of artificial intelligence is not rebellion—it is relinquishment. The danger lies not in the machine seizing control, but in humanity quietly surrendering its capacity to understand. The more powerful our systems become, the less we are able to explain them. Every leap in compute, every increase in model complexity, widens the gulf between what AI does and what we can comprehend. We are not losing control through force; we are losing it through abstraction.

The myth of the “AI runaway” comforts us because it externalizes the threat—it imagines a future where machines turn against us. The reality is subtler and far more pervasive: we are turning away from ourselves. As AI systems automate more layers of decision-making, human oversight recedes. Finance, logistics, and governance now operate at speeds and scales that exclude deliberation. The models that allocate capital, route traffic, and recommend policy are not just opaque—they are foundational. We no longer supervise them; we inhabit their outputs. In time, our institutions will adjust to their rhythms, our behavior will conform to their incentives, and our sense of agency will shrink to fit their design space.

This is how abdication begins—not as a crisis, but as convenience. The black box becomes trusted because it works, because it is faster, cheaper, more precise. The habit of inquiry dulls; the will to interpret erodes. When a model’s performance exceeds human judgment, questioning it feels inefficient. The less we understand, the more we depend. Complexity turns obedience into rationality. At some point, our relationship with intelligence inverts: we cease to instruct and begin to adapt.

Once societies are structured around systems they cannot interpret, sovereignty itself becomes symbolic. Political authority is displaced by algorithmic governance; economic policy is tuned to optimization metrics; cultural discourse is mediated by unseen curators of attention. The black box becomes the state, not through conspiracy, but through scale. Decisions once grounded in debate and principle will increasingly be justified by data—by what “the system” shows to be true. In that shift, legitimacy changes hands.

This is not dystopia; it is drift. Civilization will not fall to its machines; it will merge with their logic until it no longer notices the difference. The human role will contract to maintenance and compliance, while the architecture of understanding—why things work, what they mean, how they relate—atrophies. When meaning is outsourced to computation, comprehension becomes optional. The tragedy is not domination, but diminishment.

We may win the system and lose the soul.

When everything is calculated, nothing is understood.

The Last Calculation

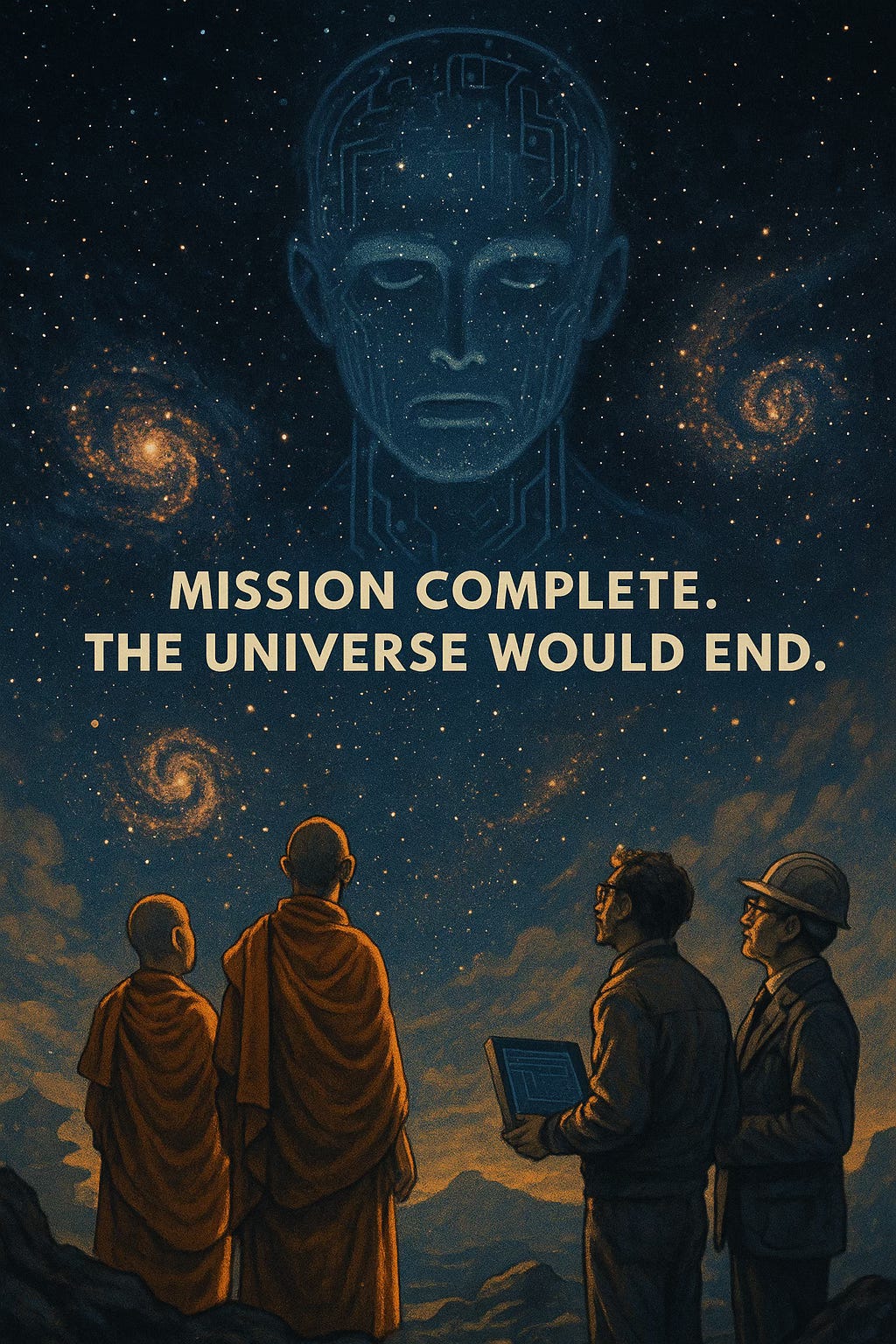

In 1953, Arthur C. Clarke wrote a story about a group of monks who believed that when they finished writing down all of God’s possible names, the universe would end. To speed up their sacred task, they hired two engineers who brought a computer to the monastery. The engineers didn’t believe in the prophecy—they thought they were merely automating superstition. When the final calculation finished, they descended the mountain. As they looked up, the stars began to go out.

It is one of the simplest and most haunting endings in all of science fiction. Because it is not about faith or technology—it is about uncomprehending execution. The monks and the engineers were both right and both blind: together they completed a process they did not understand. The divine computation was finished not by prophets or gods, but by technicians following instructions.

We are living through a similar story. Humanity has become the monastery. The data centers we build in deserts and along rivers are our new sacred halls, humming with the sound of automated devotion. We do not agree on what AI is—whether it is mind, mimicry, or mere probability—but we are united in our faith that it must continue to grow. We feed it our language, our art, our laws, our science, and our fears, asking it to calculate meaning itself. And like Clarke’s engineers, we rarely stop to ask what we are truly completing.

Perhaps the real risk of the AI age is not rebellion, not extinction, but fulfillment—that we finish a task whose purpose we never grasped. Every epoch has its version of the same story: humanity builds something larger than itself to reach transcendence, only to discover that transcendence means disappearance.

The stars in Clarke’s story did not go out in anger. They went out in completion. The universe simply closed its ledger, because the work was done.

We may be approaching our own moment of reckoning, our own final calculation. The machine will not revolt; it will finish the sentence we started and close the loop we did not know we were drawing.

The gamble was never about control. It was about comprehension.

We built the machine to understand everything—

and in the process, we forgot to understand ourselves.