No one could predict with absolute certainty that large language models would explode into mainstream success. Even the researchers building them did not know if scaling up simple token prediction would lead to such emergent intelligence. Their breakthrough contains a large dose of contingency: an unexpected winner emerging from countless parallel experiments, most of which failed or plateaued.

And yet, to frame LLMs as a lucky accident misses the deeper story. Technology never evolves by randomness alone. Beneath the surface of chance lies an engine of inevitability. Technological evolution is always the synthesis of two forces: the Darwinian trial-and-error of technical exploration, and the shaping power of social consensus and alignment. Darwinism produces the raw mutations; consensus decides which ones are amplified, legitimized, and embedded into society.

This dual engine explains both why LLMs emerged at this particular moment and why their impact has been seismic. The technical side was pure survival-of-the-fittest: transformers scaled, while many rival architectures withered. The social side was equally decisive: a world primed with the narrative of “AI as the next electricity” was ready to adopt, fund, and amplify the breakthrough. Together, contingency and inevitability converged into the LLM revolution.

The Premise: How Technology Evolves

When people talk about technology, they often imagine progress as a straight line—faster processors, bigger datasets, better models. But real technological history is rarely linear. It is jagged, unpredictable, full of false starts, dead ends, and sudden leaps. Some breakthroughs seem to appear overnight, while others linger in obscurity for decades before finding their moment.

The reality is that technological evolution has two engines. One is Darwinian, the other social. The formula looks like this:

Technological evolution = technological Darwinism + social consensus & alignment.

On the Darwinian side, progress is messy and competitive. Countless experiments are launched in parallel. Most fail. A few survive. Survival here doesn’t mean perfection; it simply means the idea proved scalable, robust, or adaptable enough to persist. Just as biological evolution produces endless variations before landing on a viable species, technological Darwinism floods the landscape with prototypes, frameworks, and architectures. Out of this chaos, one or two approaches rise to dominance.

But survival alone is not enough. A viable technology still needs a social layer to take root. This is where consensus comes in. Society must recognize the value of the invention, translate it into standards or protocols, and provide the legitimacy and resources needed for growth. Without consensus, technologies remain curiosities. Think of Google Glass—technologically advanced, socially rejected. Or nuclear fusion—scientifically promising, but lacking the institutional alignment for large-scale adoption.

Consensus provides what Darwinism cannot: direction, legitimacy, and distribution. Narratives make technologies legible to the public (“AI is the next electricity”), institutions create adoption channels, and regulations grant or withhold permission to scale. In short, consensus is what allows a surviving technology to move from the lab to the fabric of everyday life.

This dual-engine view explains why certain breakthroughs feel both accidental and inevitable. Darwinism produces the accident—the one architecture that happens to work. Consensus produces the inevitability—the collective alignment that ensures the breakthrough doesn’t wither but instead reshapes entire industries.

Seen this way, LLMs are not a mystery. They are the product of Darwinian chance fused with social alignment. And that combination—not one or the other—is what drives every major technological leap.

The Inevitability: The Social Layer

If Darwinism explains why transformers survived while other architectures fell away, the social layer explains why large language models became a revolution instead of a curiosity. A breakthrough in the lab is only half the story. For a technology to reshape society, it must be absorbed, amplified, and legitimized by society itself.

Once LLMs began to show sparks of generality—the ability to handle not just translation or summarization but open-ended dialogue—society was already primed. The narrative of “AI as the next electricity” had been circulating for years. Investors, policymakers, and the public were prepared to treat any convincing AI leap as the dawn of a new industrial era. The moment ChatGPT arrived, the symbolic groundwork was already in place to receive it.

Then came the cascade of alignment: funding surged into the space, media attention amplified every demo, institutions scrambled to integrate the models into workflows. Universities set up AI research centers overnight. Corporations launched AI task forces. Governments debated regulation. Each of these moves reinforced the sense that something epochal had arrived, regardless of whether the underlying technology was fully mature.

This is the paradox of technology adoption: it is not the “best” technologies that spread, but the ones that arrive with consensus and narrative support. Google Glass was technically impressive but socially rejected. Nuclear fusion is scientifically promising but institutionally starved. By contrast, LLMs entered a social environment desperate for both a story of progress and a tool to anchor it.

Without this consensus, even the strongest models might have languished in obscurity—like many clever algorithms that never left academic journals. What turned LLMs into a revolution was not just their ability to predict the next token, but the fact that society wanted to believe in them, fund them, and build on top of them.

This is what makes their rise feel inevitable. The Darwinian accident of scaling transformers merged with a social ecosystem eager for a breakthrough. Together, they created inevitability.

The Shock: Tremors in the Developer Ecosystem

The social alignment that propelled LLMs into the spotlight didn’t just create excitement—it also sent shockwaves through the technical community. Few groups felt the tremors more immediately than software developers.

The first visible impact was the displacement of junior programmers. Tasks that once justified an entry-level engineer—writing unit tests, building CRUD apps, stitching together APIs—were suddenly achievable by anyone with access to an AI assistant. What had been the proving ground for human apprentices became the low-hanging fruit of automation. Companies quickly realized they could cut costs by reducing junior headcount while equipping senior developers with AI copilots. For new graduates, the traditional on-ramp into the profession narrowed overnight.

The second tremor was the commoditization of boilerplate coding. Entire categories of work—documentation, test generation, refactoring, template-based development—shifted from being time-consuming chores to near-instant outputs. What once consumed days of careful attention collapsed into minutes of prompting. In economic terms, tasks that had been billable became trivial, undermining the pricing structures of consultancies, contractors, and outsourcing firms.

This led to the third and deepest impact: anxiety within the technical community. If AI can handle the baseline tasks of programming, what remains valuable for humans? Senior engineers wondered whether their expertise in system design would remain untouched, or whether even architecture could be absorbed into model-driven workflows. Independent developers asked if the indie projects they once dreamed of shipping would be instantly undercut by a wave of AI-generated clones. Educators and mentors struggled to answer a painful question: how do you train the next generation of engineers when the old training ground has vanished?

The result is a profession caught in transition. On one hand, LLMs act as accelerators, boosting productivity for those who adapt. On the other, they destabilize the very pipeline that sustained the developer ecosystem. The craft of programming is no longer about who can type the fastest or memorize the most APIs; it is shifting toward higher-order skills: problem framing, integration, governance, and above all, connecting technology to social consensus.

These shocks are not the end of programming, but the end of programming as we knew it. The developer ecosystem must now find a new equilibrium, one that acknowledges the automation of its foundations and redefines what counts as irreplaceable human contribution.

The Real Question: What Comes Next?

The shocks of LLMs—the displacement of junior roles, the commoditization of boilerplate work, and the anxiety rippling through the developer community—are real. But they are not the conclusion of the story. They are only the opening act. The deeper question is not what has been automated, but what new cycles of technology and society this disruption will set in motion.

History shows that whenever a technology destabilizes an existing profession, the outcome depends less on the raw capability of the tool and more on how it becomes embedded into society. The printing press didn’t just displace scribes; it reorganized religion, politics, and science. Electricity didn’t just replace candles; it reshaped urban life and industrial work. The internet didn’t just digitize communication; it redefined commerce, governance, and identity. Each of these transformations followed a pattern: first shock, then realignment.

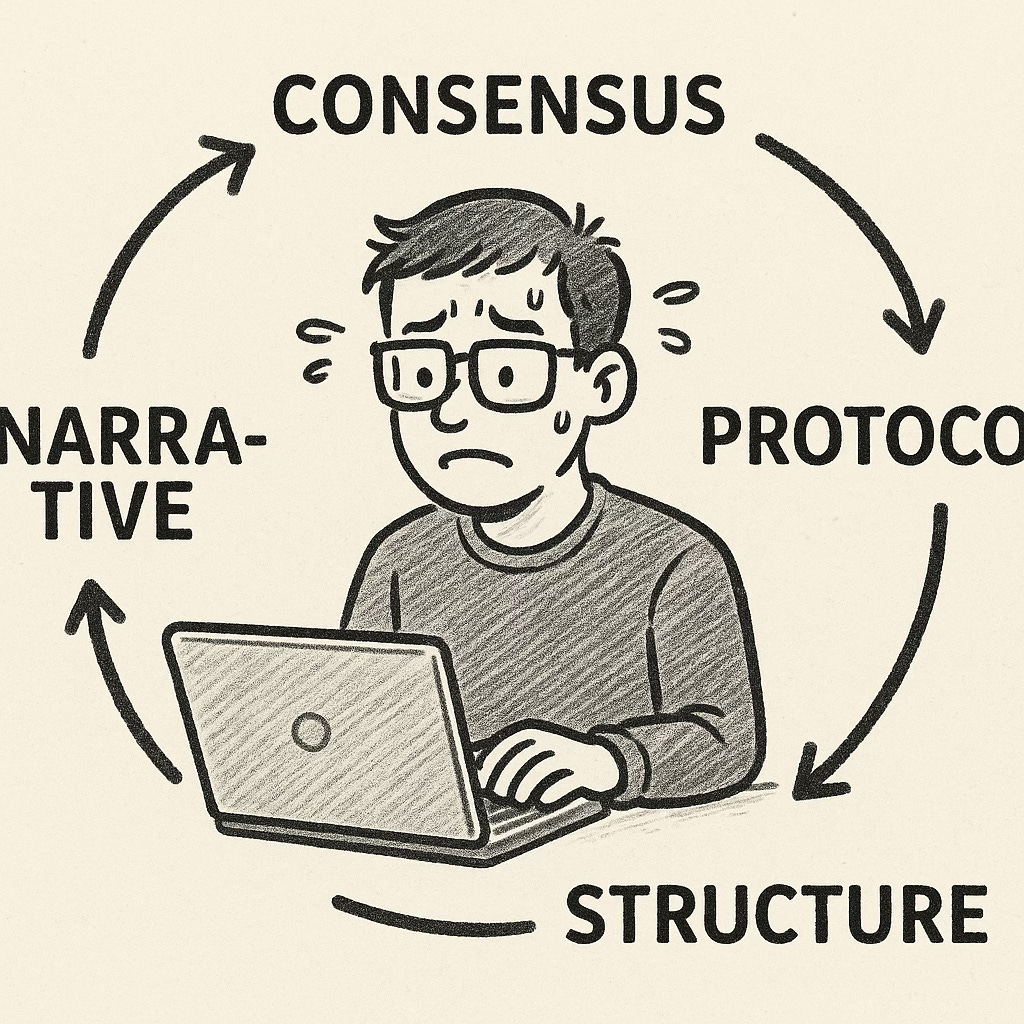

So the real question is not whether LLMs will write code or not. They already do. The question is: how will this capacity be absorbed, standardized, and legitimized by society? Will it be guided by shared consensus, or left to drift as a series of fragmented experiments? Will protocols emerge to govern its use in critical infrastructure? Will new structures—educational systems, corporate hierarchies, regulatory bodies—rise to stabilize its role? And what narratives will make sense of it for the broader public?

These are not secondary concerns; they are the main concerns. Because technologies that fail to secure consensus, protocols, structures, and narratives fade into irrelevance, no matter how impressive their capabilities. By contrast, technologies that align with this cycle reshape the world—even if they began as unlikely accidents.

The LLM revolution, then, is not simply about predicting the next token. It is about what comes next in the cycle of consensus → protocol → structure → narrative. If developers and technical communities wish to move from anxiety to agency, they must learn to see themselves not as passive recipients of disruption, but as active participants in steering this cycle.

The sudden rise of LLMs was both accidental and inevitable—Darwinian chance fused with social consensus. But the story doesn’t end here. This shock is only the first tremor in a broader cycle. In the next essay, we’ll explore why technological evolution is not a straight line but a looped process, and how understanding that loop is the only way to move from reaction to foresight.