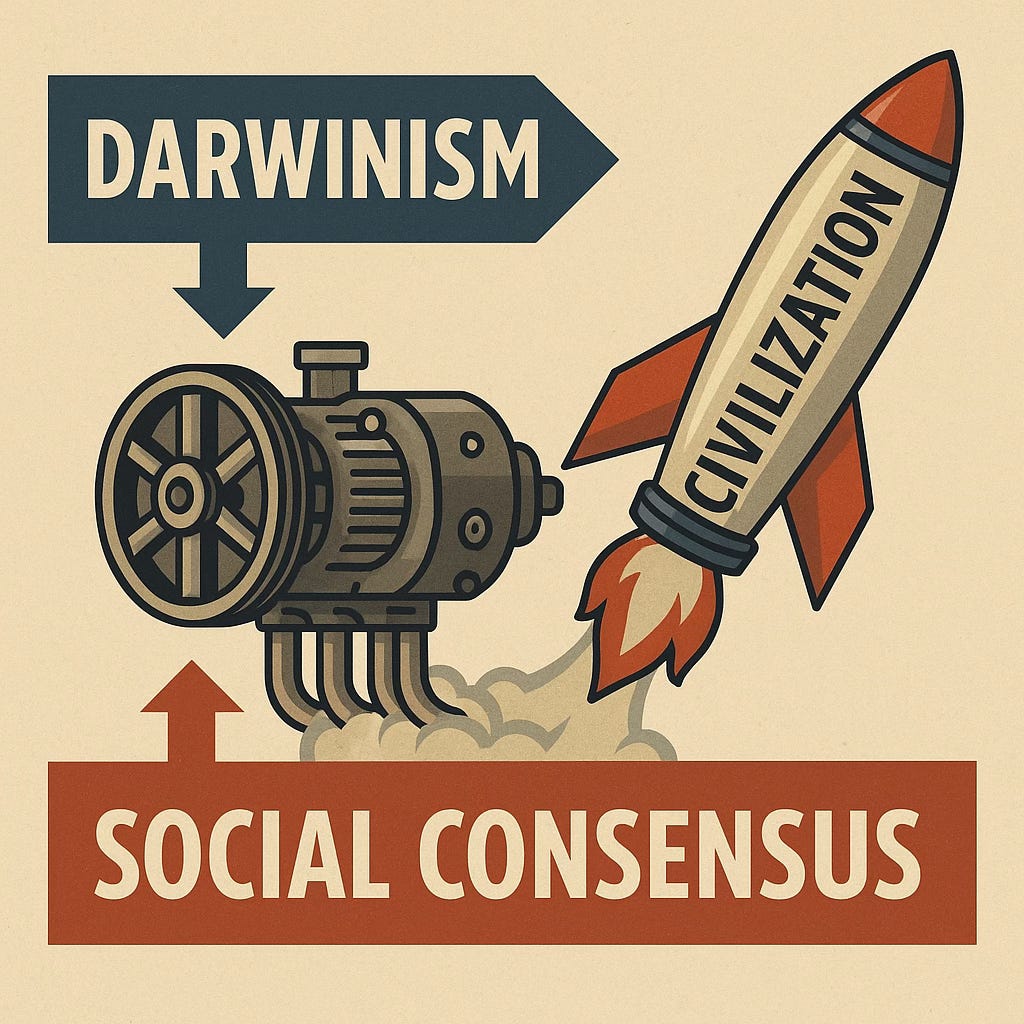

The Dual Engine of Technology: Darwinism + Social Consensus

How LLMs evolved through chance, narrative, and a community unprepared for a Renaissance-level disruption.

The story of large language models is not just about technical progress—it is about how Darwinian trial-and-error collided with social consensus. I remember my own reaction when the first wave of breakthroughs arrived: confusion mixed with exhilaration, weeks of sleepless exploration until my neck injury flared up again. Others, like Andrew Ng, understood earlier; they leveraged their influence to participate directly in the narrative cycle from the start.

But for much of the developer community, the shock was overwhelming. The system of education and training had not prepared programmers to think historically about technology’s evolution. Most knew how to code, but few knew how to situate LLMs in the long arc of computing. When Sam Altman called this a “Renaissance-level moment,” he was not exaggerating. It was not just a tool arriving—it was a tidal wave crashing against the post–World War II division of knowledge, disciplines, and professions.

This is why understanding technology as a dual engine—Darwinism plus consensus—is essential. Without it, the excitement feels like chaos, the shock feels like catastrophe. With it, we can see the deeper pattern behind LLMs’ rise, and begin to navigate what comes next.

Darwinism in LLMs: The Contingency of Breakthroughs

Large language models were not inevitable. For decades, AI research cycled through waves of optimism and disappointment. Symbolic AI promised rule-based intelligence, but collapsed under the weight of brittle logic. Expert systems in the 1980s generated excitement, only to prove narrow and unscalable. In the 2000s, recurrent neural networks and LSTMs brought improvements to sequence modeling, but they, too, struggled to generalize. Each of these attempts looked promising, attracted funding, and then faltered.

In retrospect, the rise of transformers looks obvious. But at the time, it was anything but. The original transformer paper in 2017 was designed for machine translation, not for creating a universal symbolic engine. The discovery that these models could scale—first to billions, then to hundreds of billions of parameters—was less a master plan than a Darwinian accident. Researchers were not certain it would work. They simply tried, failed, retried, and eventually stumbled onto an architecture that survived the brutal environment of benchmarks, funding cycles, and peer review.

I remember my own reaction when the first signs of generality appeared. Confusion and exhilaration blurred together. For weeks, I barely slept, chasing every experiment I could find, testing prompts, probing limits, convinced that something fundamental had shifted. The intensity left me physically wrecked—my neck injury flared up badly—but the intellectual thrill was undeniable. This was what Darwinian chaos felt like at the technical layer: thousands of trials converging into a fragile but undeniable breakthrough.

This is the essence of technological Darwinism: massive parallel experimentation, most of it destined to fail, with only a few paths surviving long enough to prove viable. Transformers scaled while others withered, not because they were obviously destined to win, but because they happened to align with the conditions of the moment—data availability, compute power, and a research community desperate for progress.

LLMs, then, are not the product of a single genius or a perfectly rational design. They are the survivors of a long evolutionary struggle. Their contingency reminds us that technology does not advance in straight lines, but in branching, chaotic forests of exploration, where survival often looks accidental in hindsight.

Consensus and Narrative: The Inevitable Layer

Darwinian experimentation explains how transformers survived the chaotic competition of architectures, but it does not explain why they exploded into the center of global attention. Many technologies survive in labs for decades without ever touching mainstream society. What transforms a technical survivor into a world-changing inevitability is the social layer: consensus and narrative.

Once sparks of generality appeared in LLMs—when they could move beyond narrow tasks like translation and begin engaging in open-ended dialogue—the narrative machine switched on. Thought leaders began framing these models not as incremental progress, but as epochal breakthroughs. The phrase “AI is the new electricity” spread quickly, giving the public a metaphor they could immediately grasp: just as electricity once reshaped every industry, AI would now seep into everything.

Influential figures understood this cycle early and leveraged it. Andrew Ng, for example, didn’t just contribute technically—he used his reach as an educator and entrepreneur to amplify the message, placing LLMs into the broader story of AI transformation. By doing so, he helped build the consensus that these models were not fringe curiosities but the inevitable future of computing.

Sam Altman pushed the framing even further, calling this a “Renaissance-level event.” That phrase was not a technical description—it was a civilizational narrative. By invoking the Renaissance, Altman positioned LLMs not as a new tool for programmers, but as a disruption capable of collapsing disciplinary boundaries, reshaping knowledge systems, and redefining what it means to be human in relation to machines. Whether one agreed or not, the narrative was powerful. It provided legitimacy and urgency.

This is the key point: consensus turns accidents into inevitabilities. Without a receptive social environment, even the most capable models might have remained curiosities buried in research papers. But with narratives that resonated—electricity, Renaissance, the “last invention humanity needs”—the technology gained momentum far beyond the lab. Institutions began to reorganize around it. Capital flooded in. Media cycles reinforced the story daily.

In this sense, the rise of LLMs was never purely technical. It was the convergence of a Darwinian survivor with a society primed for a story of salvation, disruption, and inevitability. That convergence is what transformed a contingent breakthrough into a world-shaking revolution.

The Shock to the Technical Community

If Darwinism explains the contingency of LLMs and consensus explains their inevitability, then the next chapter is about impact—specifically, the shockwaves felt inside the technical community. The panic was immediate, and it wasn’t only about code. It was about culture, education, and identity.

Why such panic? Because most programmers had not been trained to think historically about technology’s evolution. Computer science education tends to emphasize syntax, frameworks, and immediate employability. University curricula and coding bootcamps prepare students to pass interviews, solve algorithmic puzzles, and get productive quickly in a specific stack. But very little time is devoted to the lineage of computing—the story of how technology evolves through cycles of trial, failure, consensus, and reinvention.

This lack of historical grounding left many developers blindsided. When the Darwinian accident of LLMs collided with consensus-driven hype, the developer community had no interpretive framework. Instead of seeing LLMs as part of a recurring evolutionary pattern, many saw them as an existential rupture. Anxiety spread: if machines can generate working code, what role remains for the human developer?

The immediate consequences were stark. Junior programmers were displaced first, as their bread-and-butter work—boilerplate coding, writing unit tests, scaffolding APIs—was suddenly trivialized. Career ladders collapsed as the entry-level rungs disappeared. Even experienced developers saw parts of their workflow—documentation, refactoring, routine debugging—automated overnight.

But the shock was deeper than economics. It was cultural. Programming had long been treated as a craft, with mastery built over years of incremental practice. Suddenly, the early stages of that craft felt obsolete. For educators, the question was existential: how do you train the next generation when the traditional training ground is gone? For working engineers, the question was personal: what counts as valuable contribution when the machine can now do what once defined your skill?

The result is that the shock is as much cultural and educational as it is technical. The disruption exposed a blind spot: without a broader understanding of how technologies evolve—and how consensus transforms accidents into revolutions—the technical community was left reacting emotionally rather than strategically. What is needed now is not just retraining in new tools, but a reframing of how developers see themselves within the larger cycles of technological change.

A Renaissance-Level Disruption

To call LLMs “just another tool” misses the scale of what is unfolding. Word processors automated typing. Spreadsheets automated arithmetic. LLMs automate meaning itself—compressing, recombining, and generating language across every field where symbols carry value. This is why their disruption is not confined to programming. It is reverberating across the entire post–World War II order of disciplines and professions.

That order was built on division. After WWII, knowledge was systematically carved into silos: computer science here, linguistics there, law in one faculty, sociology in another. Each discipline developed its own language, its own standards of legitimacy, and its own gatekeeping rituals. This division stabilized education, labor markets, and professional hierarchies for nearly eighty years.

LLMs challenge this settlement by flooding the space with compression and recombination. A model trained on vast corpora doesn’t care whether a sentence comes from a legal ruling, a sociological study, or a programming manual. It reduces them all to tokens in the same vector space, making connections humans rarely noticed. Suddenly, the boundaries between fields—once maintained by institutions, departments, and guilds—start to dissolve.

This is why Sam Altman’s phrase, a “Renaissance-level event,” resonates. The historical Renaissance was not just an artistic blossoming. It was a collapse of boundaries: art and science, philosophy and politics, religion and commerce all reconnected in new ways, producing a surge of creativity and social upheaval. LLMs threaten a similar collapse today. They dissolve the walls that kept disciplines separate, forcing us to reconsider what counts as expertise, authorship, and intellectual labor.

The key point is this: Darwinism plus consensus doesn’t just evolve technology; it forces society to reorganize knowledge itself. The Darwinian accident of transformers surviving the chaos of AI research became inevitable once society adopted the narrative of “AI as electricity.” But inevitability has consequences. It shakes not only industries and professions, but the entire architecture of knowledge.

Seen in this light, the disruption to programmers is only the first tremor. Academia, law, healthcare, policy—all will feel the quake as the silos built after WWII start to fracture. What comes next will depend not only on how the technology advances, but on how society chooses to reorganize itself around this Renaissance-level collapse of boundaries.

What This Teaches Us: The Dual Engine as a Lens

The story of LLMs reveals a deeper truth about technological evolution: it cannot be understood through a single lens. Darwinism alone explains the chaotic experimentation that produced transformers, but it does not explain why they left the lab. Consensus alone explains why society embraced them, but it does not explain why this particular architecture—out of dozens—was there to be embraced. To make sense of disruption, we need both lenses.

On the Darwinian side, innovation is messy. Researchers try countless approaches, most of which fail. Symbolic AI collapsed. Expert systems plateaued. RNNs struggled to scale. Transformers, almost by accident, survived. This is chaotic innovation: survival through variation and trial, with no guarantee of success.

On the consensus side, society must decide which survivors matter. Consensus provides legitimacy, narrative, and adoption channels. Andrew Ng framed LLMs as transformative; Sam Altman called them a Renaissance-level event. Media, investors, and policymakers repeated the story until it solidified. Without that consensus, even the most capable model could have remained a curiosity—another clever paper gathering dust. With consensus, the breakthrough became inevitable, attracting billions in funding and reorganizing institutions worldwide.

This dual engine is what turned LLMs from contingency into inevitability, from laboratory novelty into a civilizational shock. Without Darwinism, there would be nothing new to legitimize. Without consensus, there would be no way for the new to scale. Together, they not only drive technological adoption but also reshape the structures of society itself.

The lesson is clear: every future breakthrough will emerge from this same synthesis. Whether in biotech, energy, or governance, the survivors of Darwinian chaos will only matter if consensus amplifies them. And when both forces align, the result will not just be new tools—it will be disruptions capable of reorganizing disciplines, professions, and even the architecture of knowledge.

LLMs, then, are not an anomaly. They are a case study in how technology always evolves. By using the dual engine as a lens, we can stop being surprised by disruption and start preparing for it—recognizing that every “accident” is also a candidate for inevitability, depending on how society chooses to receive it.