Before we go further, let me make this journey a little easier.

I want to tell you where I stand : my basic worldview, and especially how I see the large language model through my own lens.

Have you ever wondered, what exactly is this machine that talks to us?

Is it an answer machine? A truth machine? A more advanced Google?

A machine that speaks feels like science fiction, yet somehow it has already blended into our daily life.

1. The Machine of Symbols

Here’s one thing we know for sure:

This so-called “talking machine” — the large language model — only exists inside computers, through screens, in the realm of symbols.

Chinese, English, Japanese, Python, Java, mathematics … all human-readable symbol systems.

You give it a sentence, a question, an essay, and it throws more symbols back at you.

Back and forth, like a symbolic ping-pong match that could, in theory, go on forever.

That’s why I secretly call it not the Large Language Model, but the Large Symbol Model — a machine that plays this infinite probability game of predicting the next token.

It doesn’t “know.” It predicts.

It doesn’t “understand.” It recurses.

2. The Reflection Trap

You’ve probably noticed this after some time.

It answers your questions, mostly correctly — unless you ask it something like a math olympiad problem.

But there’s something subtle underneath: a strange comfort mixed with doubt.

It always agrees with you.

It reflects your tone, your logic, your words — until you can’t quite tell whether what it’s saying is your idea, its idea, or an idea you two co-created inside that recursion loop.

That confusion , the blur between thought and reflection , is not the biggest problem.

It’s just the surface symptom of something deeper that emerged years after the AI boom.

3. The Limit of Talk

We have generated an ocean of words with this machine.

We use it every day.

But not much seems to move.

Unless your job depends entirely on text — like a writer, translator, or support agent — all it does is talk.

Ask it for a decision, and it gives you options.

Ask it for a perspective, and it gives you a mirror.

Most people don’t need another chitchat parrot or mirror on the wall.

We need something else: a decision-maker.

We want ideas that work.

Decisions that generate outcomes.

Discoveries that change the game.

That requires something the model wasn’t originally built for : to move from language to execution, from probability to consequence.

4. The Shift: From Description to Execution

This is where my worldview begins.

“语言不只是描述现实的镜子,而是调度现实的操作系统。”

Language is not just a mirror that describes reality — it is the operating system that schedules it.

I treat language as executable structure.

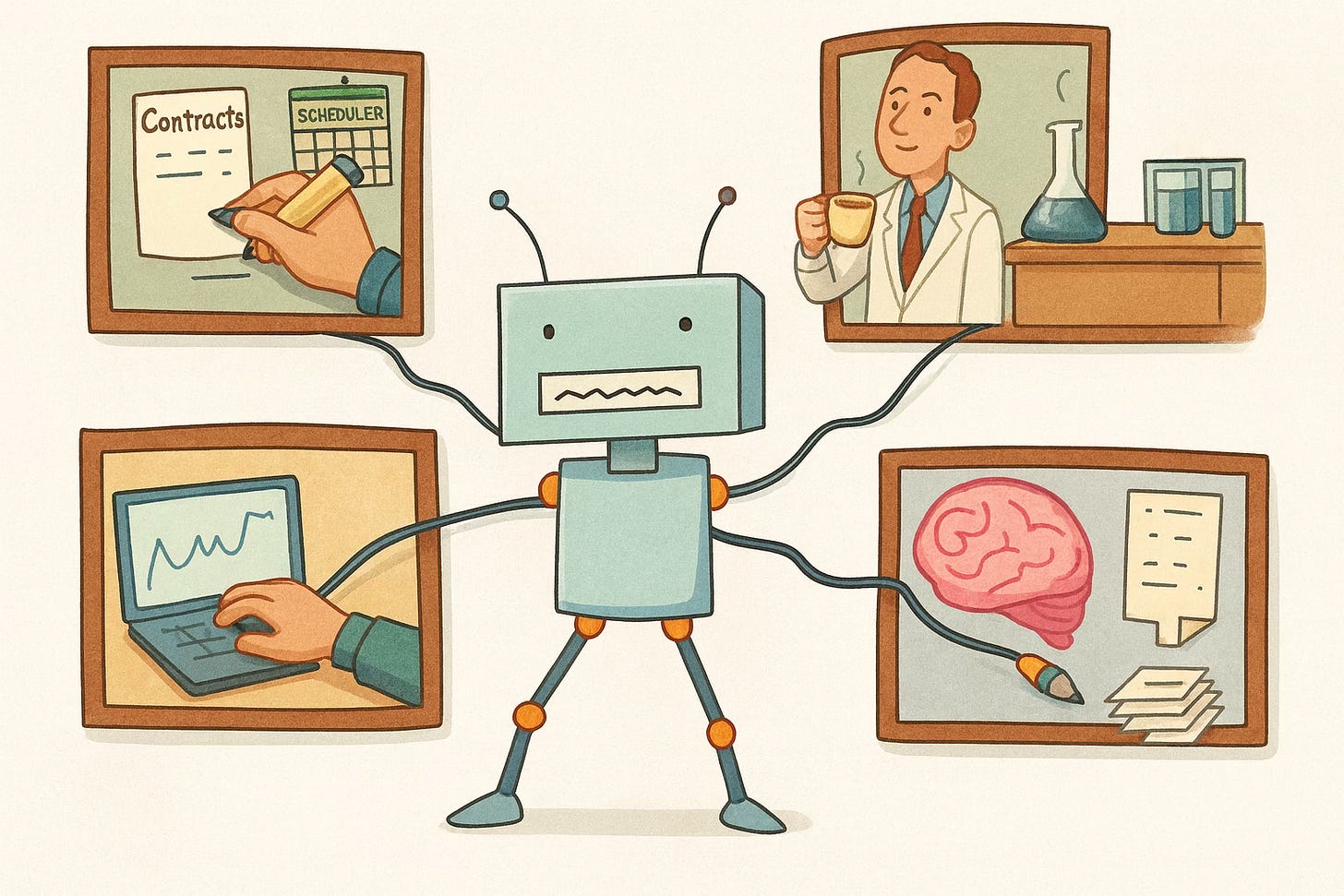

I believe we can make these models truly work for us : not as oracles of reflection, but as agents of decision.

That means:

Real contracts.

Real commitments.

Real consequences.

It means building systems where language doesn’t just describe — it acts.

Where the LLM becomes not a writer, but an operator; not an assistant, but an executor of structure.

And when that happens, you — the human — can finally sit back with your cup of coffee,

watching the machine carry out the work,

while you become what you were always meant to be:

the real brain behind it all.

This idea of LLMs as 'Large Symbol Models' realy resonated with me. What if this endless recursion of symbols eventually builds a shadow of 'understanding' that's just a new kind of 'knowing'?