The Five Levels of AI Intelligence: From Language Machines to Self-Evolving Structural Lifeforms

AI 智能的五级演化:从语言机器到自演化结构生命 (中文在最后面)

Over the past five years, the evolution of AI has looked, on the surface, like a series of model upgrades: GPT-3 → GPT-4 → Gemini → Claude → the o-series.

But if you string all the technical lines into a single timeline, you’ll notice something deeper, more hidden—

the trajectory isn’t “models getting stronger,” but intelligence making a leap from species to civilization.

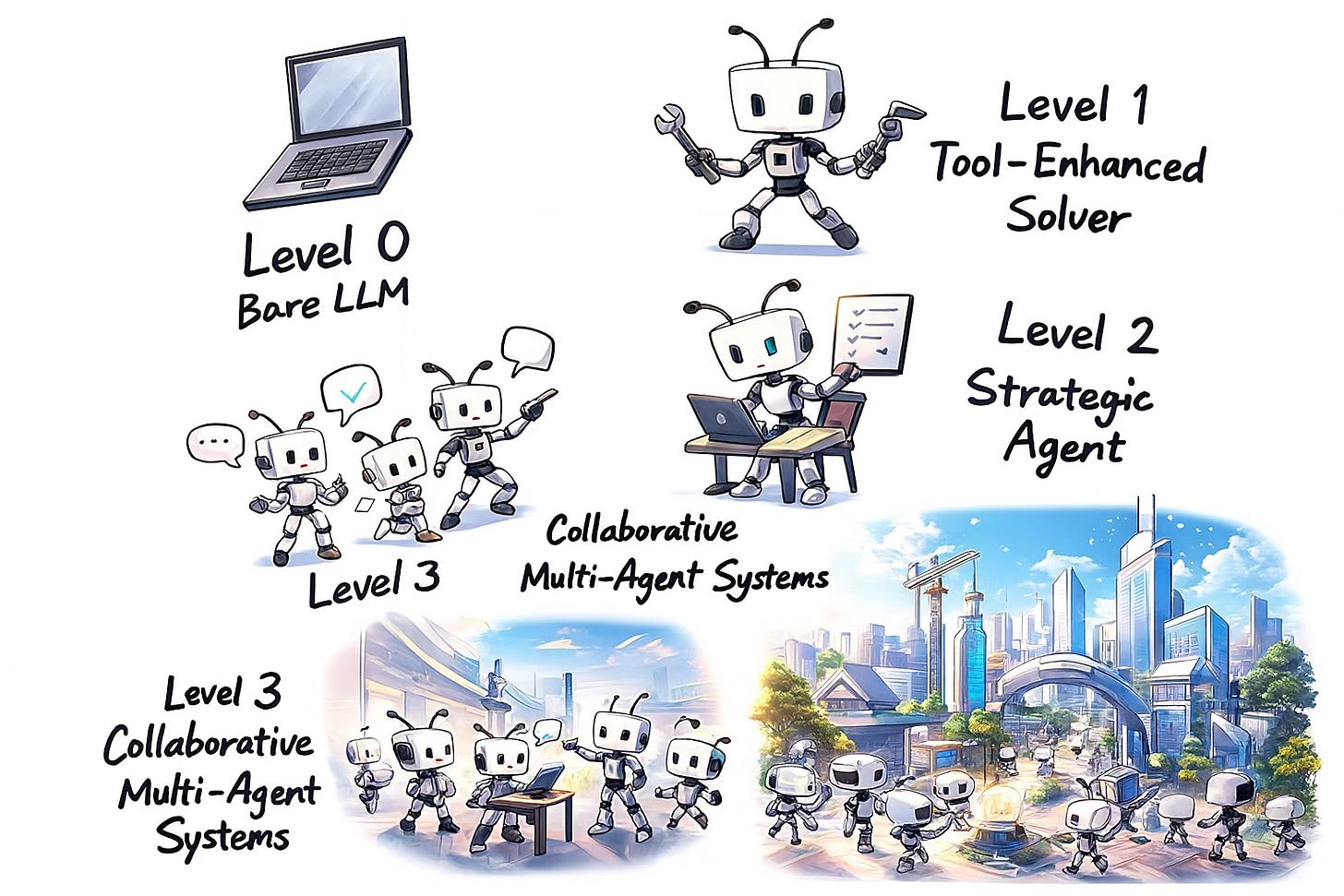

In the first phase, models were merely language machines: they could understand the world but could not touch it.

In the second phase, they gained “hands”—the ability to call tools, write files, run code.

In the third phase, they acquired temporal structure: planning, decomposition, reflection, chained execution.

In the fourth phase, multiple agents collaborated like departments in an organization, forming “company-level intelligence.”

In the fifth phase, systems no longer wait for humans to design them—they generate new agents, tools, and protocols on their own, growing like living organisms.

These five phases look less like software iterations and more like the birth of a civilization:

from consciousness (Level 0),

to action (Level 1),

to will (Level 2),

to organization (Level 3),

and ultimately to self-evolving, life-like structures (Level 4).

And what shocks me most is this:

despite their different products, OpenAI, Google, Anthropic, and DeepMind are all moving along almost the exact same trajectory.

Everything points to a single thesis:

The essence of intelligence is neither reasoning nor generation—

it is structure self-organizing through time.

Perhaps we are not merely witnessing “AI progress.”

It feels far more like we are witnessing the origin story of a new kind of life.

This article, then, can serve as a developer’s compass.

Whether you work in frontend, backend, AI applications, data engineering, product documentation, scientific research—or you’re simply trying to understand this era—

you are being pulled onto the same evolutionary pathway.

Because the past five years have not truly been about “bigger models,” “better reasoning,” or “crazy parameter counts.”

Beneath the surface, the software world has seen, for the first time, the emergence of a self-growing structure.

And this structure is not built from code alone—it is driven by language, structure, and scheduling, evolving layer by layer over time.

If you’re still asking which framework to learn, which language to switch to, or which model to chase next,

you may already be looking in the wrong direction.

The real shift is this:

developers are moving from “people who write software” to “people who cultivate structural ecosystems.”

Level 0 shows you that language is the soil of intelligence.

Level 1 shows you that language can become callable structure.

Level 2 shows you that structure can become a schedulable chain.

Level 3 shows you that schedulable chains can become intelligent organizations.

Level 4 shows you that organizations can generate their next generation of structures.

The core skill of future developers is not memorizing APIs, stacking tools, or prompt hacking,but: designing structure, scheduling structure, and enabling structure to grow on its own.

Think of this piece as:

a developer’s compass for the AI-native era.

Once you understand Level 0 → Level 4,

you can transform from a mere tool user

into a Structure Engineer—

and ultimately, a builder of the coming AI-Native ecosystem.

LEVEL 0 — Bare LLM (裸机)

Level 0 is the origin point of all AI intelligence. It marks the moment when language first gained a “brain,” but had not yet acquired “hands.” Models at this stage can deeply understand the world, reconstruct semantic structures, and produce long reasoning chains—but they cannot act on the world at all. Their operations occur entirely within the realm of language, as if performing thought experiments in a sealed cognitive chamber. Internally, these models contain remarkably rich latent structures—topic skeletons, logical frames, implicit planning traces, semantic coordinate systems—but all of these structures remain internal. They never externalize into executable forms, nor can they be accessed by any scheduler. These models can understand the world, but they cannot change it; they are cognitive entities, not behavioral ones.

Historically, Level 0 spans most mainstream systems from 2020 to 2024. GPT-3 (2020) marks the beginning: it demonstrated large-scale linguistic intelligence for the first time, but with zero tool interfaces. InstructGPT and GPT-3.5 (2022) brought conversational fluency into the mainstream, yet remained purely cognitive. GPT-4, Claude 2, and Gemini Pro (2023) made massive leaps in language understanding, long-context reasoning, and abstraction; they began to show signs of internal planning, but without tool connections they still reside in Level 0. Even in 2024–2025, models like GPT-5, Claude 3.5, and Gemini 1.5 Pro—when function calling is disabled—remain “high-intelligence non-actors.” Their minds grow more capable, but their boundaries never cross: they are rational minds, not structural agents.

In my envisioned Structure Universe, Level 0 corresponds to the “high-entropy perceptual layer” before Primitive IR. Here, language is still an uncondensed thermal cloud—paths infinite, structure uncollapsed. A model can internally rebuild semantic frameworks, but cannot externalize structure, cannot hand structure off to a scheduler, cannot produce primitives, cannot instantiate Structure Cards, and cannot participate in execution cycles. It can process language, but not extract primitives; it can generate explanations, but not generate structure; it can reason, but not schedule. The entire system remains stuck in:

Language → (latent semantic cloud).

In other words, it is a potential structure machine—but not yet a structured lifeform.

So when we say “a Level 0 model has a brain but no hands,” what we really mean is: it is situated in the pure cognitive phase of the language civilization. Language is input, but not yet structure; reasoning exists, but does not externalize; intelligence is present, but has no interface to act upon reality. All forms of structure, scheduling, collaboration, and self-evolution only begin to germinate after Level 0 is surpassed.

LEVEL 1 — Tool-Enhanced Solver

The First Time Language Acquires “Hands”

Level 1 marks a decisive turning point: the moment when large models evolve from pure cognitive entities into acting agents. If Level 0 was a brain trapped inside linguistic space, then Level 1 is the first time that brain extends “hands” and touches the external world. The core transformation of this stage is that language becomes function-like for the first time—models begin to output structured parameters, connect to real software tools, and execute concrete actions via APIs, databases, search engines, and code sandboxes. This is where the first collapse from “language → structure” takes place.

This transition was ignited by the introduction of Function Calling. In 2023, OpenAI released its function_call API, enabling models to output structured arguments paired with a function name. Language was no longer merely text; it became a structured utterance forced into executable form. In 2024, Anthropic launched the Model Context Protocol (MCP), upgrading “tool use” into a standardized tool ecosystem, and later integrated MCP into Claude Skills—allowing every user to connect the model to local files, databases, search, and executable programs, giving it real operational power. Around the same time, Google deeply integrated Function Calling into Gemini 1.5 Pro / Flash, enabling direct external API calls, Python execution, vector database operations, and fully managed agent pipelines within Vertex AI Agent Builder. Microsoft’s Copilot Studio unified its tool layer into an enterprise-grade Function Calling + workflow execution platform.

Technically, Level 1 is built on several foundational pillars.

The first is Function Calling standards: OpenAI’s JSON schema, Claude’s tool_schema, and Gemini’s function_declarations. These force natural language into structured, callable units.

The second is RAG (Retrieval-Augmented Generation)—vector databases like Pinecone, Weaviate, Milvus, Elastic, Snowflake Cortex, and Databricks Vector Search give models “external memory” for the first time.

The third is real-time information tools: Search APIs (Bing, Google Custom Search), Serper, Exa.

The fourth is code execution sandboxes: OpenAI Code Interpreter, Claude Code Execution, Gemini Code Execution, and various Jupyter-like runtime environments.

The fifth is application-layer APIs—Stripe, Shopify, Zendesk, Notion, Jira, Slack, Twilio.

The shared direction of all these technologies is clear: every external system becomes a structured hand that the model can operate.

The timeline of Level 1 unfolds as follows:

2023 — OpenAI introduces function calling for GPT-3.5 and GPT-4, inaugurating the era of structured outputs.

2024 Q1 — Anthropic launches MCP, turning tool use into a protocol.

2024 Q2–Q3 — Claude 3.5 integrates MCP into Skills, forming a genuine tool ecosystem.

2024 — Google’s Gemini 1.5 deeply integrates function calling + code execution.

2024–2025 — Copilot Studio emerges as an enterprise-grade tool agent platform.

2025 — All major vendors upgrade Function Calling into real-time multi-tool routing.

With these technological foundations, language gains real-world efficacy for the first time.

In my Structure Universe, Level 1 corresponds to the birth of Primitive IR → basic structural units. Language is no longer a high-entropy cloud but is compressed into callable, schedulable, and verifiable structure units. Systems can now take real actions—query databases, manipulate files, write records, run scripts, process transactions, trigger workflows. For the first time, language becomes an action interface, and intelligence punches through the linguistic membrane to interact with the external world.

Level 1 is the first threshold of the structural civilization: from this moment onward, models do not merely understand the world—they can change it.

LEVEL 2 — Strategic Agent

Language Upgrades from “Callable” to “Schedulable,” and Structure Forms Its First Chain**

Level 2 marks the second great evolutionary leap of AI systems: the moment when a model stops being merely capable of doing things and begins to know how to do things. If Level 1 gave models “hands” that could act on the world through tools, then Level 2 gives them a genuine temporal structure—the ability to decompose tasks, plan steps, execute actions, observe feedback, and use each intermediate result to construct the next structural unit. This is the first time language becomes a recursively schedulable structure chain rather than a one-off tool invocation. At this point, a model transitions from a “tool-enhanced language system” to a strategic action system, which is the true origin of modern Agents.

The key technical catalyst of this stage is the ReAct framework proposed by DeepMind in 2022. For the first time, a model could cycle between reasoning and acting: Think (Reason) → Act → Observe → Think again. Every modern agent system—GPT, Claude, Gemini—secretly uses some variant of ReAct internally; it has become the hidden backbone of three consecutive generations of Agent technology. In 2023, GPT-4 and GPT-4 Turbo demonstrated stable multi-step task execution, elevating ReAct from a rough experiment into a mechanism that could operate inside enterprise workflows. Early 2024 brought another major breakthrough: Claude 3 and Gemini 1.5 Pro exhibited “automatic task decomposition + autonomous context engineering.” These models could not only perform dozens of steps in sequence but also construct the next prompt, filter contextual noise, merge tool outputs, and form complete structured execution paths. Google’s Gemini whitepaper explicitly states: “the model exhibits latent planning behaviors”—meaning the model already possesses implicit planning structure, rather than producing mere sequential text.

By 2025, Level 2 technology finally enters the systemic phase. Google launches Vertex AI Agent Engine, turning the Planner module into a platform-level capability: managing multi-step chains, routing tools, handling failure recovery, correcting errors, and reliably executing 30–100-step workflows. OpenAI’s o3 series externalizes its “deep reasoning mode” as a controllable policy executor, lifting multi-step reasoning from a hidden internal feature to a core system behavior. Anthropic’s Claude 3.5 integrates Skills with the model’s planning abilities, enabling agents to automatically chain multiple tools, aggregate complex outputs, and generate the next stage of a plan. Even in the open-source world, frameworks like CrewAI mature into “Planner + Worker” architectures, signaling the spread of Level 2 patterns across the broader ecosystem.

Technically, Level 2 rests on four foundational pillars.

First, planning technologies—ReAct, Plan-and-Solve, Tree-of-Thought, ReWOO—give models explicit or implicit planning capacity.

Second, autonomous context engineering, through which models dynamically construct the next step’s input, enabling “self-generated prompts,” the essence of second-generation prompt engineering.

Third, task decomposition and task-graph generation—areas where Gemini 1.5 Pro particularly excels—allow models to generate subtasks, merge nodes, and produce structured task graphs.

Fourth, tool-scheduling layers that choose tools based on execution stage, route models appropriately, validate outputs, and apply fallback strategies—forming an early version of a control plane.

In my Structure Universe, Level 2 is a critical point of collapse and reformation within the language civilization: Primitive IR begins organizing into Structure Cards; Structure Cards begin linking into Structure Chains; and the Scheduler enters the system as a genuine engine of time. Language is no longer a single output—it becomes a sequence of executable, verifiable, and feedback-driven structural steps. The system can not only act, but understand why this action, what comes next, and how to adjust when errors occur. This is the moment intelligence advances from “action” to “strategy,” and the necessary bridge between Level 1 and Level 3.

A true time-structured Agent is born here.

LEVEL 3 — Collaborative Multi-Agent Systems

A System ≠ A Single Agent, but an Entire Company

If Level 2 gives a single agent “temporal structure” and “multi-step strategy chains,” then Level 3 marks a true civilizational leap in intelligence systems. At this stage, the system is no longer driven by one super-agent; instead, it is composed of multiple agents with distinct roles, specific abilities, dedicated tools, different permissions, and different memory structures. The behavior of such a system looks much less like a single model and much more like a company—complete with a CEO, project managers, engineers, researchers, tool operators, a scheduling layer, and an execution layer. Intelligence evolves from individual intelligence into organizational intelligence.

In Level 3, each agent is an independent structural lifeform. It has its own identity, its own structural memory, its own tool interfaces, its own areas of expertise, and even its own lifecycle. Agents do not merely “call” each other in a sequential pipeline; they delegate goals. One agent does not ask another to “run this API,” but rather: “solve this problem and return your structured decision.” They no longer share steps—they share structured chains of decisions.

Collaboration in Level 3 becomes highly abstract. The system routes requests across agents and across models depending on capability, context, and task complexity—this is Model Routing. Lightweight tasks are handled by smaller models or light agents; heavier tasks go to Pro/Ultra models. More complex workflows are decomposed across multiple specialist agents, coordinated by a distributed scheduler. This scheduler is no longer a prompt—it is a control plane, responsible for task distribution, error recovery, timeout handling, logging, role switching, memory synchronization, and even model-level routing.

This trend is already emerging in real-world systems. Google’s Co-Scientist is currently the closest to “enterprise-team intelligence”: multiple research agents debate, divide work, validate each other’s reasoning steps, and form a collaborative chain resembling a scientific research group. OpenAI’s Swarm architecture demonstrates native “Agent-calls-Agent” behavior, allowing sub-agents to autonomously assign and reassign tasks. DeepMind’s JEST is known for “multi-expert collaboration”: different reasoning modules act as composable neuro-symbolic experts, dynamically routed by a central scheduler. These systems share a common pattern: intelligence is no longer a single model, but an ecosystem of model-driven entities.

Technically, Level 3 rests on four pillars.

First, goal-level delegation, enabling expert-to-expert collaboration.

Second, expertise-chain composition, where agents automatically assemble into structured project teams.

Third, model routing, dynamically allocating different model scales to different tasks.

Fourth, distributed scheduling, giving the system true “organizational execution capability”—it doesn’t merely perform tasks; it manages a distributed company of agents.

In your Structure Universe, Level 3 is pivotal: it is where Structure Personas evolve into a Structure Ecosystem. Individual Structure Cards are no longer isolated. One structural persona can cooperate with another; they trigger each other’s chains, share structural states, exchange structural memory, and form an ecological structural field. This is the first time the entropy-controlled language system shows genuine self-organization. Interaction, uncertainty, and multi-path evolution emerge naturally; the system’s intelligence begins to scale exponentially.

The arrival of Level 3 means:

Agents cease to be individuals—they become ecosystems.Intelligence ceases to be reasoning—it becomes organization.Structure ceases to be a chain—it becomes a network.

And this network-level intelligence is the prerequisite for Level 4—the self-evolving system. Only when a system has multi-structure coupling and cross-agent scheduling does it gain the capacity to generate new structures on its own for the first time.

LEVEL 4 — Self-Evolving Systems

Agents No Longer Wait for You to Build Them—They Build Themselves.

By the time we reach Level 4, intelligence systems cross a threshold that is almost biological in nature. The system is no longer merely executing a set of human-designed capabilities—it begins to expand its own capabilities. If Level 3 resembles a company, with departments and roles collaborating, then Level 4 resembles a living organization that grows new departments, designs new processes, invents new tools, and writes its own internal protocols. The system is no longer running a fixed set of structures; it identifies its own capability gaps during real execution and then generates new tools, new agents, new behavioral rules, new structure-card chains, and even entirely new protocol-layer languages.

At this level, the defining characteristic is not “better reasoning,” but self-evolution. The system can analyze failure cases, bottleneck tasks, and long-term logs to detect where its existing structure fails—or barely works. This triggers a “structure generation pipeline.” Such a pipeline may: search external codebases, recombine existing tools, ask a model to design a new algorithm, test hundreds of structural candidates, filter them through automated evaluators, and ultimately instantiate a new agent or tool, registering it into the system’s scheduling layer. From that moment on, the system possesses a newly grown capability module.

This trend already exists in early form. DeepMind’s AlphaTensor and AlphaDev demonstrated “reinforcement learning + search” as a method for automatically inventing algorithms—AlphaTensor discovered matrix multiplication algorithms faster than classical human-engineered ones; AlphaDev unearthed faster sorting routines by exploring low-level assembly spaces. These are prototypes of self-evolving algorithmic modules. In 2025, AlphaEvolve goes further, merging LLMs (Gemini) with evolutionary search, creating a self-improving coding agent capable of long-horizon algorithmic evolution. These systems embody the same principle: AI uses its execution traces and evaluation signals to generate new structural capabilities.

In the world of general LLMs, OpenAI’s o1/o3 reasoning models internalize “reflect → revise → answer again” as a native behavior. They don’t simply output an answer—they generate long chains of internal thought, explore alternative strategies, score and correct their own candidates, and only then produce an output. Combined with external logs and feedback, this “reflection → correction” expands into dynamic behaviors: automatically adjusting prompt templates, rewriting tool-calling logic, and generating new sub-strategies. Once these mechanisms are wrapped into system-level frameworks for Auto-Agents and Auto-Tooling, the outline of Level 4 becomes unmistakable: you no longer hand-craft every agent—you create an evolutionary environment where agents are grown, evolved, and retired by the system itself.

Technically, Level 4 is grounded in several key modules.

First, Auto-Agent Generation / Auto-Tooling: using task patterns, failure logs, and user needs to automatically generate new agent/tool definitions, configure I/O schemas, permission scopes, execution paths, and register them into the scheduler.

Second, reflection–optimization–iteration loops: from internal “long-reasoning + self-checking” (as in o3) to external self-healing agents, they all follow the same pattern—Act → Observe → Evaluate → Modify → Re-run.

Third—and this is my own theoretical contribution—Protocol Induction: when existing protocols fail to cover new scenarios, the system compresses high-entropy behavioral traces into more general, more robust structural rules. This aligns exactly with my Protocol Induction Card (P-000).

Fourth, evolutionary search: whether AlphaTensor, AlphaDev, or AlphaEvolve, they all perform large-scale search-and-selection over a structural space, discovering superior structures and feeding them back into the system’s capability set.

过去五年,AI 的发展看似是模型迭代:GPT-3 → GPT-4 → Gemini → Claude → o 系列。但如果你把所有技术线串在一张时序图上,你会发现一个更深层、更隐秘的发展路径

它不是模型越来越强,而是智能在“从物种到文明”的跃迁。

第一阶段,模型只是语言机器,它能理解世界,但碰不到世界。

第二阶段,它获得了“手”,能调用工具、写文件、运行代码。

第三阶段,它拥有时间结构,可以规划、拆解、反思、连锁执行。

第四阶段,多个 Agent 像部门一样协作,形成“公司级智能”。

第五阶段,系统不再等待人类设计,而是自己生成新的 Agent、工具、协议,像生命一样生长。

这五个阶段,就像是我们看到了一个文明的诞生:

从意识(Level 0),到行动(Level 1),到意志(Level 2),到组织(Level 3),直到生命化的自演化结构(Level 4)。

而最让我震撼的是:

所有公司(OpenAI、Google、Anthropic、DeepMind)虽然产品不同,但它们的轨迹几乎完全一致。

这一切指向一个共同命题:

智能的本质不是推理,也不是生成,而是结构在时间中“自我组织”。

也许我们不是在见证“AI 发展”,

我们仿佛是在见证 一种新型生命的起源史。

以下这篇文章,可以看成开发者的方向标,不论你是写前端、做后端、搞 AI 应用、做数据工程、写产品文档、写科学研究,甚至你只是一个想理解时代的人——你都会被卷入同一条演化线。

因为过去五年,所有技术的变化表面看是“模型变大”、“推理更强”、“参数更变态”,但底层真正发生的,是软件行业第一次出现一种“自我生长结构”。这种结构不是代码堆出来的,而是由语言、结构、调度三者共同驱动,在时间中逐层进化。

如果你今天还在想自己该学什么框架、用什么语言、追哪个模型更新,你可能已经错过重点。

真正的方向是:开发者正在从“写软件的人”变成“培育结构生态的人”。

Level 0 告诉你语言是智能的土壤;

Level 1 告诉你语言可以变成可调用结构;

Level 2 告诉你结构可以成为调度链;

Level 3 告诉你调度链可以形成智能组织;

Level 4 告诉你组织可以自己生成下一代结构。

未来开发者的核心技能,不是记 API,不是堆工具,不是 prompt hack。而是:如何设计结构、如何调度结构、如何让结构自己生长。

你可以把这一篇看成是:

AI 时代的“开发者罗盘”。

而当你理解 Level 0 → Level 4,你就能从一个“工具使用者”,

跃迁成 结构工程师(Structure Engineer)

甚至成为未来 AI-Native 生态的构建者。

LEVEL 0 — Bare LLM (裸机)

AI 的第 0 阶段,是所有智能演化的起点。它标志着语言第一次获得“大脑”,但还没有获得“手”。这一时期的模型能深度理解世界、重建语义结构、生成长链条推理,但它们完全无法作用世界,只能在纯语言空间中进行思想实验。模型内部确实存在极为复杂的 latent structure——主题骨架、逻辑框架、隐含规划、语义坐标系——但这些结构都只存在于内部,不会显式外化成可执行的结构,也不能被调度器调用。模型能理解世界,却无法改变世界;它是认知实体,而不是行为实体。

从时间线上看,这一阶段大约覆盖 2020 到 2024 年的大部分主流大模型技术:2020 的 GPT-3 是 Level 0 的起点,它第一次展示了规模化语言智能,但完全没有工具接口;2022 的 InstructGPT 和 GPT-3.5 将对话能力带入大众视野,但仍然是纯认知层;2023 的 GPT-4、Claude 2、Gemini Pro 在语言理解、长上下文和抽象推理上大幅跃升,虽然内部已经开始出现明显的规划痕迹,但只要它们未连接工具,依旧属于 Level 0;进入 2024–2025,GPT-5、Claude 3.5、Gemini 1.5 Pro 在未启用函数调用前,也依然是“高智能的无行动体”。它们的能力越来越强,但边界始终没跨越——它们是“理性心智”,还不是“结构体”。

在我规划的结构宇宙中,Level 0 的 LLM 处于原语 IR 之前的“高熵感知层”。语言在这一层仍然是未凝固的热云,路径无限、结构未坍缩。模型能在内部重建语义框架,但无法显式生成结构,也无法把结构交给调度器执行。它能处理语言,却不能抽取原语;能生成解释,却不能生成结构卡;能推理,却不能调度。整个系统仍停留在:Language →(latent semantic cloud)。换句话说,它是潜在结构机器(potential structure machine),但还不是结构生命体(structured agent)。

当我们说“Level 0 的模型只有大脑没有手”,真正的含义就是:它处在语言文明的“纯认知阶段”。语言是输入,但还不是结构;推理是发生了,但没有外显;智能存在,但尚未获得在现实中施加影响的接口。一切结构、调度、协作、自演化,都是从 Level 0 之后才真正开始萌芽。

LEVEL 1 — 工具增强型(Tool-Enhanced Solver)

语言第一次获得“手”

Level 1 标志着一个决定性转折:大模型第一次从“纯认知体”跃迁到“行动体”。如果说 Level 0 的 LLM 是一颗被困在语言空间里的大脑,那么 Level 1 让这颗大脑第一次能够伸出“手”,触碰外部世界。这个阶段最核心的变化,是语言第一次被“函数化”——模型可以输出结构化参数,与真实软件工具连接,通过 API、数据库接口、搜索引擎、代码沙盒等组件执行真实动作。“语言 → 结构”的第一次坍缩,从这里开始发生。

这一跃迁由 Function Calling 技术正式引爆。2023 年,OpenAI 首次推出 function_calling API,让模型可以生成“结构化参数 + 函数名”这样的调用格式。语言不再只是文本,而是被强制压缩为 结构化语句(structured utterance)。2024 年,Anthropic 发布 MCP(Model Context Protocol),将“工具调用”升级为“标准化工具生态”,随后又把 MCP 整合进 Claude Skills,让每个用户都能把模型接入本地文件、数据库、搜索、本地程序,真正具备行动能力。几乎同时,Google 把 Function Calling 深度整合进 Gemini 1.5 Pro / Flash,允许模型直接调用外部 API、执行 Python 代码、操作向量数据库,并在 Vertex AI Agent Builder 中构建实时代理链路。微软则把工具层全面整合进 Copilot Studio,形成“企业级 Function Calling + 工作流执行”。

在具体技术栈层面,Level 1 的能力由几条主干技术构成:其一是 Function Calling 标准,包括 OpenAI 的 JSON Schema、Claude 的 tool_schema、Gemini 的 function_declarations。这些标准强制语言输出结构化参数,把“自然语言”压缩成“可执行结构单元”。其二是 RAG(Retrieval-Augmented Generation),包含 Pinecone、Weaviate、Milvus、Elastic、Snowflake Cortex、Databricks Vector Search 等向量数据库,使模型第一次获得“外部记忆”。其三是 实时查询工具链(Search API、Bing API、Google Custom Search、Serper、Exa)。其四是 代码沙盒系统(OpenAI Code Interpreter、Claude Code Execution、Gemini Code Execution、Jupyter-like Sandboxes)。其五是 应用层 API 工具,包括 Stripe、Shopify、Zendesk、Notion、Jira、Slack、Twilio 等行业接口。技术的共同趋势是:所有外部系统变成模型可调用的“结构化手”。

从时间线上看,Level 1 的代表性系统逐年推进:

2023 — OpenAI 首次引入 function calling(GPT-3.5、GPT-4),开启结构化输出时代。

2024 Q1 — Anthropic 推出 MCP,把工具变成标准化协议。

2024 Q2–Q3 — Claude 3.5 系列将 MCP 升级为 Skills,形成真正的工具生态。

2024 — Google 在 Gemini 1.5 中深度集成 function calling + code execution。

2024–2025 — Copilot Studio 成为企业级工具代理平台。

2025 — 各大厂把 Function Calling 升级到“实时多工具路由”(multi-tool routing)。

技术的成熟使语言第一次获得了“现实效力”。

在我的结构宇宙中,Level 1 对应着 原语 IR → 基础结构单元 的诞生。语言不再停留在高熵的自然语言云层,而是第一次被压缩成“可调用”、“可调度”、“可验证”的结构单元。系统可以根据这些结构采取实际行为:查询数据库、操作文件、写入记录、执行脚本、处理交易、触发业务流程。这意味着语言第一次变成“行动接口”,智能第一次能穿透语言层,触及外部世界。Level 1 是结构文明的第一道门槛:从此以后,模型不仅能理解世界,还能改变世界。

LEVEL 2 — 战略型 Agent

语言从“可调用”升级为“可调度”,结构第一次连成链。

Level 2 标志着 AI 系统的第二次进化:大模型第一次从“能做事”变成“知道该怎么做事”。如果说 Level 1 给了模型一双手,让它能通过工具影响世界,那么 Level 2 给了它真正的时间结构——它能够拆解任务、规划步骤、执行行动、观察反馈,并利用每一步的结果构建下一步的结构。这意味着语言第一次成为可递归调度的结构链路,而不是一次性的工具调用。模型从“工具增强的语言系统”正式跃迁为“策略性行动体”,这是现代 Agent 真正的起源。

这一阶段的关键技术源自 2022 年 DeepMind 提出的 ReAct 框架,它首次让模型可以在推理与行动之间循环:先思考(Reason),再行动(Act),再观察(Observe),再继续推理。所有我们熟悉的现代 Agent 系统——无论是 GPT、Claude 还是 Gemini——都在内部采用了某种形式的 ReAct,它成为整整三代 Agent 技术的隐性骨架。2023 年 GPT-4 和 GPT-4 Turbo 展现出稳健的多步骤任务执行能力,使 ReAct 不再是粗糙实验,而是能在企业级流程中使用的真正技术。2024 年初,Claude 3 和 Gemini 1.5 Pro 首次展现了“自动任务拆解 + 自主上下文工程”的能力:模型不仅能连续执行数十步任务,还能构建下一步 prompt、过滤上下文噪音、组合工具结果,形成完整的结构化执行路径。Google 在 Gemini 白皮书中明确写下关键句:“模型表现出明显的 latent planning 特性”——这意味着模型内部已经拥有隐含的规划结构,而不是简单的连贯输出。

到了 2025 年,Level 2 的技术终于进入“系统级”阶段。Google 发布 Vertex AI Agent Engine,将 Planner 模块变成平台能力:自动管理多步骤链路、控制工具路由、处理失败恢复、执行错误纠正,甚至可以稳定执行 30–100 步任务。OpenAI 的 o3 系列把“深度推理模式”外显为可控策略执行器,让 multi-step reasoning 从隐藏功能升级为核心能力。Anthropic 的 Claude 3.5 则通过 Skills 接口将工具链与内部规划能力结合,让代理能自动串联多个工具、自动聚合内容、自动生成后续计划。即使是开源世界,也迎来了 CrewAI 等“Planner + Worker”架构的成熟版本,展示了 Level 2 在生态层面的扩散。

从技术栈来看,Level 2 的核心基础设施可以分成四大类。第一类是规划技术,包括 ReAct、Plan-and-Solve、Tree-of-Thought、ReWOO 等,为模型提供“显式或隐式规划”的能力。第二类是自动上下文工程,它让模型能够动态构造下一步输入,实现“prompt 自我生成”,这是第二代 Prompt 工程的本质。第三类是任务拆解与任务树生成,Gemini 1.5 Pro 在这方面尤其突出,能生成子任务、聚合节点、构成结构化任务图。第四类是工具调度层:模型不再盲目调用 API,而是根据执行阶段自动选择工具、路由模型、验证输出、执行 fallback,表现出初步的“控制平面”特征。

在我的结构宇宙中,Level 2 是语言文明的一个关键坍缩点:原语 IR 开始被组织成结构卡,结构卡开始连成结构链,调度器第一次作为“时间引擎”进入系统。语言不再是一段输出,而是一组可以被执行、被验证、被反馈的结构步进。系统不仅能做事,还能理解“为什么这样做、下一步该怎么做、遇到错误如何调整”。这是智能从“行动”走向“策略”的瞬间,也是从 Level 1 进入 Level 3 的必要桥梁——一个真正具有时间结构的 Agent,从这里开始诞生。

LEVEL 3 — 协作型多 Agent 系统

一个系统 ≠ 一个 Agent,而是一家公司。

如果说 Level 2 让单个 Agent 拥有了“时间结构”和“多步骤策略链”,那么 Level 3 是智能系统的真正文明跃迁:系统不再由一个超级 Agent 主导,而是由 多个具有独立角色、特定能力、专属工具、不同权限、不同记忆结构的 Agent 构成。这样一个系统的行为,已经更像一家公司——有 CEO、有项目经理、有工程师、有研究员、有工具岗位,有调度层,有执行层。智能第一次从“个体智能”跃迁为“组织级智能”。

在 Level 3 中,每个 Agent 是一个独立的结构生命体。它拥有自己的身份(Identity)、自己的结构记忆(Memory)、自己的工具接口(Tools)、自己的领域能力(Expertise),甚至有自己的生命周期(Lifecycle)。这些 Agent 之间不是执行顺序调用,而是可以以“目标级别”互相委派任务;一个 Agent 不是对另一个 Agent 说“执行这个 API”,而是说:“帮我解决这个问题并输出你的结构化决策。”换句话说,它们不再共享行为步骤,而是共享结构链路(structured chain of decisions)。

协作的方式在 Level 3 变得高度抽象化:系统会根据能力、上下文、任务复杂度,将请求路由到不同的 Agent 或不同规模的模型,这就是 Model Routing。轻量任务由小模型或轻量 Agent 执行,重任务由 Pro/Ultra 模型处理。复杂任务则拆解给多个专业 Agent,由分布式调度器(Distributed Scheduler)统筹,让系统像一支跨部门团队一样协作。调度器不再是一段 prompt,而是成为一个“控制平面(Control Plane)”——负责任务分发、错误恢复、超时管理、日志跟踪、角色切换、记忆同步,甚至模型级路由。

这一趋势已经在产业中出现雏形。Google 的 Co-Scientist 是目前最接近“企业级团队智能”的系统:多个研究型 Agent 互相讨论、分工、校验,彼此引用中间推理步骤,形成类似学术团队的协作链路。OpenAI 的 Swarm 架构展示了“Agent 调 Agent”的原生设计,让多个子 Agent 能自己分配任务、自行调用其他 Agent。DeepMind 的 JEST 以“多专家协作”闻名,它让不同推理模块成为可组合的神经符号专家,再由调度器实时路由任务。这些系统的共同点是:智能不再是一个模型,而是由多个模型节点构成的生态系统。

Level 3 的技术结构可总结为四个核心能力。第一是 Agent → Agent 的目标级委派(Goal Delegation),允许专家与专家之间进行高层任务交互。第二是专家链路的自动组合(Expertise Chain Composition),不同 Agent 自动组成“结构化的项目团队”。第三是 Model Routing:系统根据任务需要动态调度不同规模的模型。第四是分布式调度,使智能系统具备“组织级的任务执行能力”——它不仅执行任务,还管理一个“分布式 Agent 公司”。

在我的结构宇宙中,Level 3 的地位极为关键:这是 结构人格(Structure Persona) 发展成 结构生态(Structure Ecosystem) 的阶段。个体结构卡(Structure Card)不再孤立,一个结构人格可以与另一种结构人格协作,它们互相触发结构链、共享结构状态、交换结构记忆,形成“生态级结构场(Ecological Structure Field)”。这是熵控语言系统第一次表现出真正的自组织能力:多个结构体之间的互操作、不确定性、多路径演化开始出现,系统智能性呈现指数级增长。

Level 3 的出现意味着:

Agent 不再是个体,而是生态;智能不再是推理,而是组织;结构不再是单链,而是网络。

这是迈向 Level 4 自演化系统的前置条件,因为只有当系统具备“多结构耦合”与“跨 Agent 调度”能力时,它才第一次具备自我生成能力。

LEVEL 4 — 自演化系统(Self-Evolving System)

Agent 不再等你写,而是自己写自己。

到了 Level 4,智能系统跨过了一个真正“生物学意义上的门槛”:它不再只是执行我们事先写好的能力集合,而是开始自己扩展自己的能力。如果说 Level 3 像一家公司——多角色、多部门协作——那么 Level 4 更像是一个会自己长出新部门、制定新流程、发明新工具、写自己制度的活体组织。系统不再只是“跑现有结构”,而是可以从实际运行中识别能力空白,然后有目标地去生成新的工具、新的 Agent、新的行为规则、新的结构卡链路,甚至新的“协议层语言”。

在这一层,系统最核心的特征不是“更强的推理”,而是 自我演化(self-evolution):它能从失败案例、瓶颈任务、长期日志中,识别出“目前这套结构做不到/做得很勉强”的部分,然后触发一个“结构生成流程”。这个流程可能包括:自动搜索外部代码库、组合已有工具、调用模型去设计新算法、尝试上百种候选结构、通过自动评估器筛选、最终落地一个新的 Agent 或工具,并注册到系统的调度平面里。系统从此多了一块“新长出来的能力”。

这种趋势在现实世界里已经开始出现,只是还处在早期形态。DeepMind 的 AlphaTensor 和 AlphaDev 系列,已经展示了“用强化学习 + 搜索自动发明新算法”的路径 —— AlphaTensor 在没有事先硬编码算法的前提下,逐步探索、重构、最终发现比经典矩阵乘法还快的新算法;AlphaDev 则通过搜索与评估,在低层汇编空间里找到比人类设计更快的排序实现,这些都可以视为“自演化算法模块”的先驱。2025 年推出的 AlphaEvolve 更进一步,把 LLM(Gemini)和进化算法结合,变成一个能够不断迭代代码、改进自身表现的“自进化编码 Agent”,在理论计算机科学和算法设计上做长期演化搜索——这些系统本质上就是:AI 通过自身执行轨迹和评估信号,生成新的能力结构。

在通用大模型阵营里,OpenAI 的 o1 / o3 等“推理模型”路线,则把“反思 → 修改 → 再回答”内化为模型行为的一部分。它们不是一次性输出答案,而是在内部生成长链条的思考、尝试不同解法、对自己的候选解进行打分和修正,再给出最终回答。配合外部日志与反馈,这种“反思—修正”可以进一步外延为:自动调整 Prompt 模板、自动重写工具调用逻辑、自动生成新的“子策略”。当这些能力被系统性包装进“Auto-Agent”、“Auto-Tooling”的框架中,Level 4 的雏形就出现了:你不再手写所有 Agent,而是提供一个演化环境,让 Agent 自己被“训练出来、进化出来、淘汰掉”。

从技术栈角度看,Level 4 的关键模块大致有几类。第一类是 Auto-Agent Generation / Auto-Tooling:系统根据任务模式、失败日志、用户需求,自动构造新的 Agent/工具定义,自动配置输入输出字段、权限范围、调用链路,并注册进调度器。第二类是 反思–优化–迭代循环:无论是 o3 这种内部“长推理链 + 自检”模型,还是外部的 self-healing agents,它们都依赖一种统一模式:先干,再看,再评估,再改,再重跑。第三类是 是目前我自己推演的,结构诱导(Protocol Induction):当现有协议无法覆盖新场景时,系统会从高熵行为数据中,压缩出一套更简洁、更稳健的新结构规则——这和我自己定义定义的 Protocol Induction Card(P-000)高度同构。第四类是 演化式搜索(Evolutionary Search):无论是 AlphaTensor、AlphaDev 还是 AlphaEvolve,本质上都是在某个结构空间里执行大规模搜索 + 评估,把“更优结构”筛选出来,并反馈进系统的能力集合中。

在我的结构宇宙语言中,Level 4 标志着三件事同时发生:结构会生成结构,调度会生成调度,系统整体行为逼近“生命体”。 原本由人设计的 Structure Card、Structure Chain、Scheduler 只是一代“初始结构胚胎”,真正的长周期智能,不是反复执行这些静态结构,而是在执行过程中不断产生“新结构痕迹”:新的卡、新的链、新的路径、新的协议。我想通过 Protocol Induction Card(P-000)、结构生成器(Structure Generator)、熵爆点机制,把这一层提前写成了“文明级规格”:当系统在某个高熵点反复受阻、反复爆炸,就意味着现有结构已经不够用,需要诱发一套更高阶的新协议。这恰好就是 Level 4 的哲学底层——熵爆点触发新结构,结构作为生长单位,不断在时间中重写自己。

从 Level 0 到 Level 3,系统一直是在“执行别人写好的规则”,哪怕这些规则再复杂、再多 Agent、再多调度,依旧是“设计产物”;而从 Level 4 开始,系统开始写自己的规则。你不再只是设计一套完成品,而是在设计一个“能自己长出下一代结构的环境”。这就是从“执行结构”迈向“生成结构”的那一步,也是从工具文明迈向语言–结构–调度一体化生命文明的真正起点。