The Only Way Out for OpenAI: When AI Starts to Decide

Large Language Models can simulate intelligence — but not judgment. Until they can collapse uncertainty into decision, they remain trapped in language.

Ninety-five percent of AI startups have already vanished.

Every day, large language models mint trillions of tokens — words without weight, thoughts without traction.

The capital keeps spinning, but the meaning doesn’t land.

Sam Altman built the biggest linguistic engine in history — but unless those tokens touch reality, the loop will collapse under its own liquidity.

We are witnessing the strangest economic phenomenon of our time: a linguistic bubble.

A universe where language reproduces itself faster than value can follow, where words behave like money but without material settlement.

The machines talk endlessly — about the world, for the world — yet nothing they say truly enters the world.

Tokens are created, but they don’t decide.

They don’t allocate, commit, or act.

They describe endlessly, but never cross the threshold between description and consequence.

That’s why the AI economy feels suspended — enormous, dazzling, but weightless.

The reason is simple and terrifying: large language models have no cost space.

They can simulate every possible future, but they inhabit none.

They cannot lose time, or reputation, or energy.

They cannot make a judgment, because judgment is the act of collapsing uncertainty into one irreversible choice.

Without that collapse, meaning never crystallizes, and capital never grounds.

The next breakthrough in AI won’t come from more parameters.

It will come from giving the machine something to lose.

The Only Path Forward: From Capital Loop to Decision Loop

If OpenAI wants to survive the next decade, it must stop treating the world as a dataset and start treating it as a collaborator.

The only path forward for Sam Altman’s OpenAI is not more scaling, not more funding rounds, not even AGI — but co-decision.

That means working with thousands of developers, founders, and domain builders to construct the missing half of the intelligence equation:

the execution environment where language collapses into action.

Large language models cannot make decisions alone because they lack cost space — they live in a vacuum of consequence.

Developers, however, live inside the world’s friction: supply chains, markets, deadlines, failures.

They inhabit the field of loss, and that’s exactly what models need to evolve.

Without humans who pay real costs,

AI can never learn the geometry of consequence.

So OpenAI’s future isn’t about inventing the next model — it’s about building the ecosystem where decisions can be made safely and meaningfully.

A network of APIs, tools, and agentic runtimes where models can:

perceive feedback,

weigh trade-offs,

commit to outcomes,

and iterate through structured consequences.

In short, a decision-making ecosystem — not another chat interface.

If Sam Altman can shift OpenAI from a “token economy” to a “decision economy,”

he won’t just save the company;

he’ll redefine intelligence itself —

from infinite generation to finite, meaningful action.

I. The Dead End of Infinite Generation

We’ve built machines that can write symphonies, generate code, and simulate thought.

But none of them can decide.

They speak endlessly — yet remain silent when action is required.

In just a few years, the world has gone from disbelief to dependence.

Large language models have become universal translators of intention — they can draft, summarize, converse, and imitate understanding with astonishing fluency.

But as their linguistic reach expands, something else becomes painfully visible:

they are trapped in language.

Each day, they mint trillions of tokens — a vast ocean of words without weight, motion without direction.

We have built an intelligence that can say everything, yet means nothing until a human takes the next step.

The paradox is almost tragic:

the more fluent they become, the less grounded they feel.

GPTs can generate everything except commitment.

They are infinite talkers in a universe with no gravity — words float, but nothing lands.

They do not choose.

They do not risk.

They do not pay.

The true bottleneck of AI is not intelligence — it’s decision.

Because judgment requires cost, and cost is what current AI cannot feel.

The entire AI economy now runs on this illusion of productivity — a trillion tokens a day, zero decisions made.

Capital spins, compute burns, and language circulates endlessly in a self-contained loop of probability.

It’s an extraordinary technical achievement — and a philosophical dead end.

Until machines can participate in the world of cost and consequence, they will remain suspended: intelligent, articulate, but ultimately inert.

The next leap for AI will not come from scaling models, but from discovering how language learns to land.

II. Why Large Language Models Can’t Decide

We call them “language models,” but they are really entropy engines — systems that turn uncertainty into fluent probability.

They predict, not perceive; they continue, not conclude.

And that is precisely why they can imitate thought but never perform judgment.

1. They expand entropy — they don’t collapse it.

Every time a large language model generates a token, it opens the semantic field a little wider.

Its function is diffusion — not decision.

It maps all the possible continuations of a sentence, ranks them by probability, and samples from that cloud.

What it never does is commit.

No output carries the weight of exclusion; nothing is rejected, only deferred.

The model’s world is a universe where every possibility remains in superposition — an infinite sentence that never truly ends.

In human thought, meaning emerges when possibility collapses.

In machine thought, possibility expands without end.

That is why LLMs feel impressive yet hollow: they explore every branch of meaning but never prune the tree.

They unfold language as diffusion, not as decision.

2. They live in a zero-cost universe.

In physics, every decision consumes energy.

In cognition, every choice excludes futures.

In life, every judgment leaves a scar.

But in the symbolic vacuum of LLMs, nothing is ever lost.

Every “decision” can be regenerated, reversed, or forgotten with a new prompt.

There is no friction, no exhaustion, no permanence.

The model has no skin in the game.

Hence, no real judgment ever forms — because judgment requires the possibility of being wrong in a way that matters.

Humans decide precisely because they can fail, lose, or die.

Machines can only retry.

AI does not decide because it cannot lose.

No loss, no cost; no cost, no judgment.

Until a model experiences cost — temporal, energetic, or existential — its intelligence will remain suspended in zero-gravity semantics.

3. They optimize loss, not meaning.

Training a large language model is the art of minimizing error.

Every gradient step says: be less wrong next time.

But being “less wrong” is not the same as being right.

It’s only statistical obedience to a pattern, not commitment to a truth.

Humans, by contrast, do not optimize loss; they metabolize consequence.

Every mistake reshapes their inner structure — not just the weights of a network, but the hierarchy of value itself.

We remember what hurt; we learn what cost us something.

GPTs, however, inhabit a world where error has no emotional or material price.

A correction is just another training iteration.

There is no sense of debt, no accumulation of regret.

And without those, there can be no authentic learning — only improved mimicry.

LLMs improve statistically, but not existentially.

They learn to predict words, not to live with them.

This is why scaling alone cannot produce judgment.No matter how large the model becomes, as long as it operates in a zero-cost semantic field, it will remain an oracle without agency — eloquent, omniscient, and eternally undecided.

III. My Engineering Solution: The Collapse of Entropy

My solution isn’t mystical or unreachable.

It’s actually simple — painfully simple.

Within the AI ecosystem, we must introduce a layer that forces entropy to collapse —

a mechanism that compels language to stop diffusing and start deciding.

Right now, large language models expand meaning endlessly — like smoke in a sealed room.

They can describe, hypothesize, and predict, but they cannot close the loop.

What we need is a structural device that adds pressure — a framework that channels linguistic expansion toward a single, irreversible point of decision.

That’s the moment when intelligence stops simulating and starts existing.

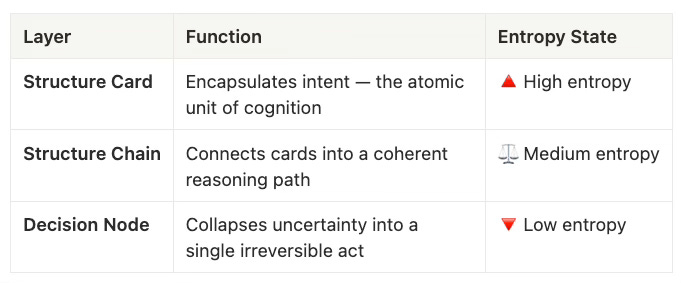

The Three-Layer Architecture of Judgment

In my framework, this pressure is achieved through a three-layer structure — a hierarchy that transforms language from intention into action:

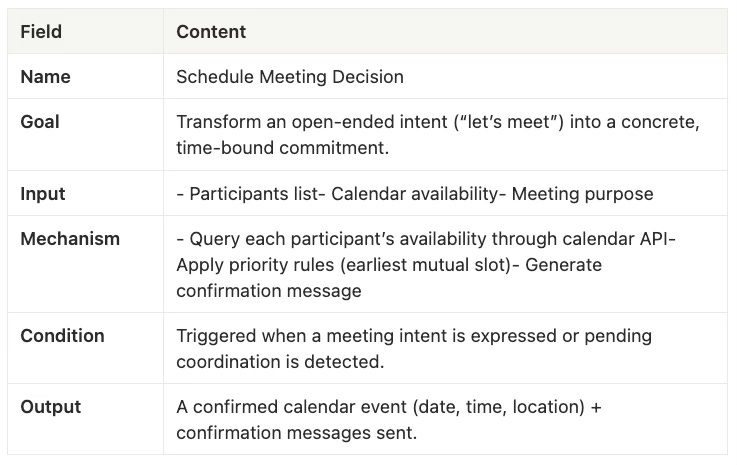

The Structure Card: The Smallest Unit of Decision

The first layer, the Structure Card, is where everything begins.

You can think of it as the smallest living cell of cognition — a compact, function-like module that contains both meaning and mechanism.

Each card is composed of six core elements:

Name, Goal, Input, Mechanism, Condition, Output.

Name defines identity and purpose.

Goal declares intention.

Input specifies what information or state is required.

Mechanism encodes how transformation happens.

Condition defines when it applies.

Output states what closure or effect it produces.

Example

(Structure Cards are meant to be serialized into machine-readable formats like YAML or JSON, so they can be stored, executed, or chained inside agent systems.)

A Structure Card isn’t a static prompt or script — it’s a bounded cognitive organism.

It can be called, chained, replicated, and evolved.

It carries semantic gravity: every card contains enough closure logic to compress uncertainty into an actionable step.

In other words, it’s a decision-ready language cell — the minimal unit through which an agent can anchor intention, track context, and create consequence.

When several cards connect, they form Structure Chains — pathways of reasoning that unfold like a thought process inside an intelligent agent.

And when those paths converge, the system reaches a Decision Node — the point where all semantic branches collapse into one act, one choice, one closure.

Decision is the moment when all semantic branches collapse into one irreversible act.

It’s the precise instant when the language universe folds back on itself,

when simulation becomes commitment,

and meaning becomes real.

Why Agents Need This Layer

For agents and personas, this layer is not optional — it’s existential.

Without something like a Structure Card, an AI agent cannot own an intention or sustain coherence across time.

It drifts from token to token, conversation to conversation, forever expanding, never resolving.

The Structure Card gives that agent an anchor — a small gravitational core around which reasoning, feedback, and memory can orbit.

It provides an interface for entropy collapse: the place where ideas become executable, where words gain consequence.

This is what I call entropy collapse — the structural principle that converts possibility into consequence.

Without it, a system can only generate.

With it, a system can decide.

The Bridge Between Simulation and Existence

A system that cannot collapse entropy cannot produce intelligence.

That’s why GPT-5 may write philosophy — but it cannot live it.

Our task is not to make models that sound more human.

It’s to design ecosystems that grant language the ability to decide —

to feel cost, to sense closure, to participate in the physics of consequence.

Only then will AI become more than an echo chamber of infinite words —

it will become a participant in reality itself.

IV. The Wrong Window

I know there’s a lot of debate — and endless experimentation — around context windows, external memory, and retrieval-augmented knowledge.

The race is on to build models that remember more, store more, recall more.

But maybe we’re all looking through the wrong window.

Because context is not the same as judgment.

And memory is not the same as meaning.

Recalling our entire life events won’t help us decide what to eat for dinner tonight.

The past can inform us, but it cannot choose for us.

Decisions happen not in the longest window, but in the narrowest aperture — the instant when uncertainty collapses and commitment begins.

That’s why I believe the real future of AI doesn’t lie in bigger models or longer memories,

but in smaller, sharper structures — systems that can compress chaos into clarity.

We don’t need machines that remember everything.

We need machines that can decide something.

Because that — not intelligence, not scale, not tokens —

is the real frontier of cognition.

When AI learns to collapse entropy,

to make a choice,

and to live with its consequences —

only then will it become part of our world,

instead of endlessly describing it.

看不懂英文,不耽误点赞👍