The Sea of Meaning — Vectorization as a Continuous Substrate

How embeddings turn hidden connections into discoverable knowledge

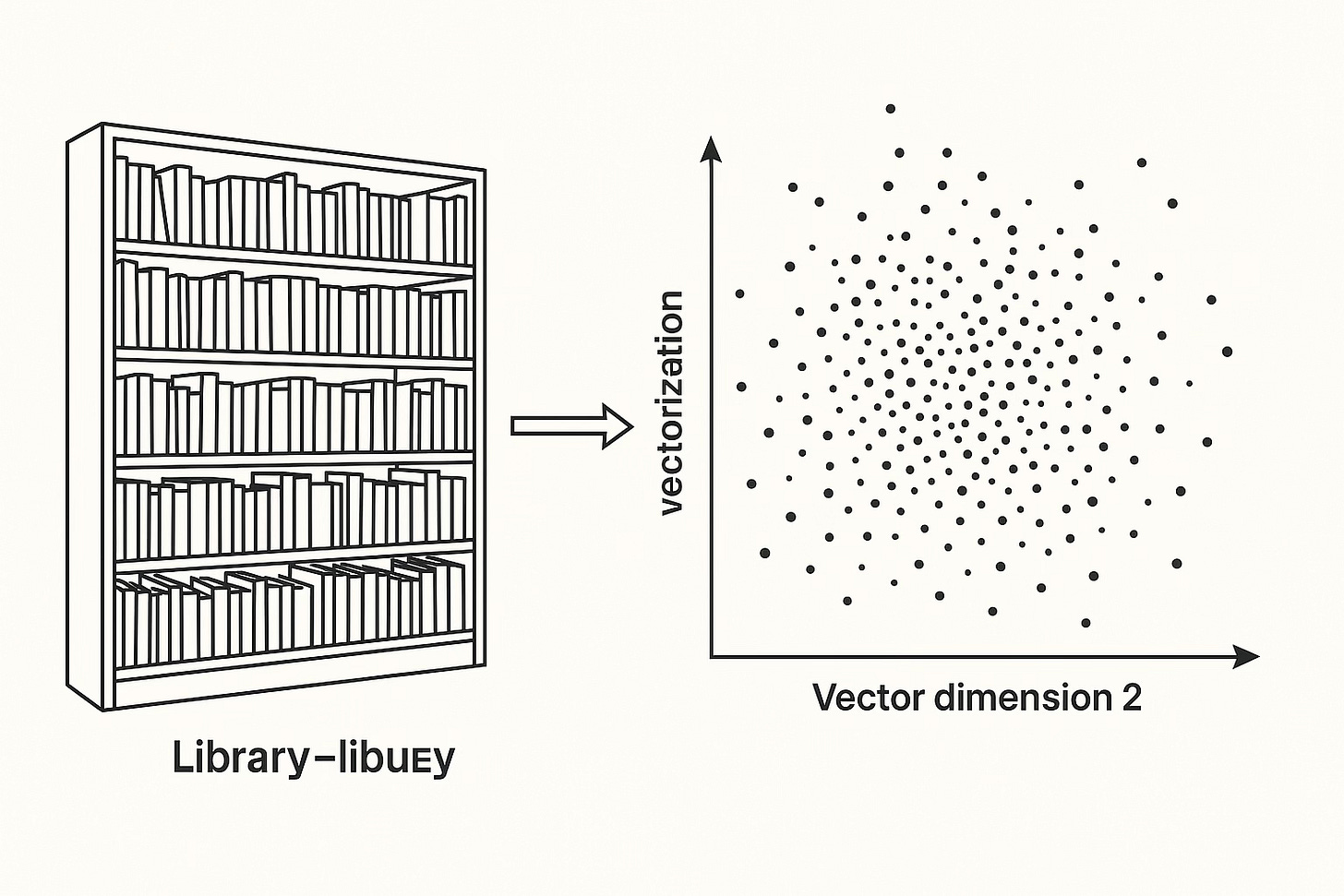

Meaning has always been scattered—locked in books, hidden in archives, buried in different disciplines and industries. What held us back was not a lack of ideas, but the unbearable workload of connecting them. Now, with vectorization, meaning itself has been made into a continuous space. What once went unnoticed can now be revealed. What once took years of scholarship or trial-and-error can now be surfaced in seconds, at a fraction of the cost.

From Fragments to a Sea of Continuity

Historically, knowledge lived in fragments. Every app, database, and field of expertise was its own island. A biologist’s paper and a legal scholar’s commentary had no obvious bridge. An engineer’s log file and a historian’s archive were separated by context and convention. Humans could, in principle, connect them—but only through enormous manual effort: reading, cross-referencing, translating.

This fragmentation meant that many possible connections simply never happened. Ideas that could have sparked breakthroughs were lost in the noise, buried under workload.

Vectorization changes this. By embedding all kinds of data—words, images, tables, code—into a shared coordinate space, knowledge is no longer trapped in silos. Everything acquires coordinates in a continuous semantic map. Suddenly, the “unnoticed” becomes neighboring; the hidden connection becomes visible.

How Vectorization Was Discovered

The breakthrough did not come from philosophy but from practice. Researchers noticed that simple neural models trained to predict words learned something unexpected: words with similar meaning clustered together in the model’s internal space.

“King” and “Queen” ended up near each other.

“Paris” and “France” formed a tight bond.

Arithmetic even worked: King – Man + Woman ≈ Queen.

This discovery—that meaning could be represented as vectors in high-dimensional space—was profound. It showed that semantics was not just abstract but geometry. From word2vec to GloVe, from BERT to multimodal embeddings, the progression has been the same: expand the scope, refine the mapping, and watch meaning fall into place as coordinates.

In other words, vectorization wasn’t designed—it was discovered as a side effect of training models on massive data. The models revealed a truth: language itself is structured in a way that can be represented continuously.

Why Continuity Matters

Discrete categories force rigid definitions. A database field says “customer” or “supplier,” nothing in between. A taxonomy forces every book into one shelf, even if it belongs on several. Humans have always lived within these containers.

Continuity breaks this rigidity. In vector space:

A research paper can be near both “biology” and “computer science.”

A photograph can live between “art” and “documentation.”

A contract clause can cluster with both “legal precedent” and “risk assessment.”

What this means is that ambiguity and nuance become first-class citizens. Instead of being errors, they are positions in a space. And because similarity is measured by distance, previously unnoticed patterns emerge naturally.

From Workload to Discovery

In the past, discovering such patterns was theoretically possible but practically impossible. Connecting 10,000 documents across 20 domains meant endless human labor—reading, indexing, correlating. The cost was prohibitive.

With vectorization, the workload collapses. What was once a mountain of manual effort becomes a geometric query. “Find me things near this meaning.” Suddenly:

A lawyer drafting a contract can surface scientific reports that hint at hidden risks.

A doctor exploring symptoms can find case studies across languages and decades.

A musician searching for inspiration can locate obscure works that share tonal structure.

The discovery is not that these connections exist—they always did—but that they are now accessible at scale and at low cost.

Creation at the Edges of Meaning

The sea of meaning is not only about retrieval. It is also about creation. When embeddings bring disparate fields into proximity, new combinations emerge.

Scientific intuitions encoded as embeddings can recombine into hypotheses no one thought to test.

Legal language aligned with computational structures can birth executable governance.

Cultural metaphors from different languages can blend into new artistic forms.

Creation happens at the edges—where proximity reveals unexpected neighbors. By lowering the cost of finding those neighbors, vectorization turns hidden possibilities into active frontiers.

The Leap from Discrete to Continuous

This is the conceptual leap: from discrete containers to continuous fields.

Before: information was sorted into boxes, categories, and file formats.

After: everything floats in a shared ocean of coordinates.

In this ocean, the fundamental operation is not “look up by label” but “sail by meaning.” Knowledge is not locked in shelves but mapped as flows and currents. Discovery becomes navigation.

The Sea of Meaning as a Substrate

Vectorization provides a new substrate for civilization’s knowledge. Like Turing’s tape or the token stream of language models, embeddings form a universal medium—one where meaning is continuous, navigable, and recombinable.

The implications are vast. What once took years of expertise and unbearable labor can now be generated, retrieved, or recombined in moments. What was unnoticed can now be surfaced. The cost of exploration has collapsed; the frontier of meaning has expanded.

The sea of meaning is already here. The question is not whether it exists, but how far we are willing to sail—and what new continents of thought we will discover when we do.