The Social Turing Machine: When the Web Learns to Think

From tokens to meaning, from code to cognition — how the next evolution of Web 4.0 turns language into computation, proof into trust, and the Internet into a living system of understanding.

What if the real Web 4.0 isn’t a market of tokens — but a mind?

Not a financial network, but a cognitive network: a web that can understand what we mean, verify what it does, and act with mathematical proof — across people, machines, and institutions alike.

For decades, the internet has evolved through connection and participation. Web1 let us read, Web2 let us speak, and Web3, as it’s currently imagined, lets us own. But what if the next leap isn’t just about ownership — what if it’s about understanding? Here’s my vision for Web 4.0:

Imagine a web where every sentence can be executed, every action can explain itself, and every decision leaves a verifiable trace of reasoning. A web that doesn’t just store information, but thinks in structure; that doesn’t just move data, but moves meaning.

I call it the Social Turing Machine — a cognitive architecture for the true Web4.0, where language (meaning), protocol (trust), and intelligence (experience) finally operate as one continuous system.

This is not a product pitch.

It’s a blueprint for a civilization-scale runtime —

an operating system for a world that wants to think in public.

From Networks to Minds

The web was never just a network of cables and pages — it was humanity’s collective attempt to externalize thought.

Each generation of the internet has been a reflection of what civilization was capable of imagining at that time.

Web1.0 gave us the freedom to read.

It was the age of static knowledge — of directories, portals, and hyperlinks. Information moved outward from the few who could publish to the many who could only browse. The web was a library, vast but silent — a place where meaning existed, but conversation did not.

Web2.0 gave us the freedom to speak.

It replaced silence with participation — the rise of blogs, forums, social media, and user-generated content. Suddenly, the web had a voice, and billions joined in. But with that new abundance of expression came a new hierarchy of control. Platforms became intermediaries; algorithms replaced editors; and the “social web” quietly centralized our language into data. The network became noisy, yes — but it stopped being free.

Then came Web3.0 — or what we thought it was.

Tokens, smart contracts, and decentralized ledgers promised to return power to individuals by giving us the freedom to own. Ownership was a necessary correction, but not a complete evolution. Web3 solved the economic problem of the web, but not the semantic one. We could now trade value — but we still couldn’t share understanding.

Because understanding requires more than ownership — it requires structure.

It requires that machines know what we mean, that our logic can be verified, and that every digital act carries proof of its intention.

That next leap — Web 4.0 , the one beyond ownership — is what I call the Social Turing Machine.

It is a vision of the web as a cognitive architecture, where human intention flows through structured reasoning and emerges as verifiable execution.

A system where meaning, trust, and intelligence form one continuous loop:

human intention → structured reasoning → verifiable execution.

In such a system, the web stops being a marketplace and starts behaving like a mind.

Every message can become a function, every function can justify itself, and every outcome can be proven to align with shared meaning.

This is the frontier of the true Web 4.0 —

not just a decentralized economy, but a decentralized cognition.

Not just networks that connect us,

but networks that think with us.

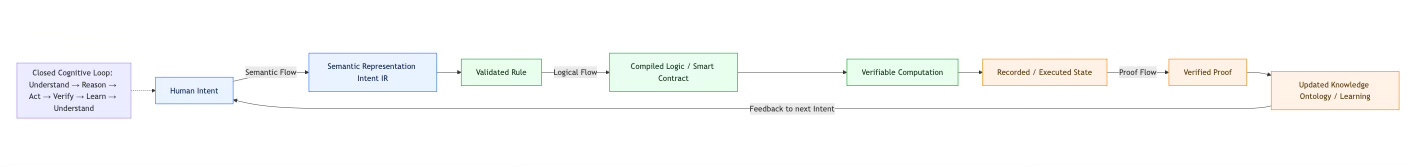

The Core Idea in One Picture

At its essence, the Social Turing Machine (STM) is a loop — a living circuit that connects language, logic, and verification into one continuous flow.

It begins the moment a human expresses intent in natural language and ends when that intent has been executed, verified, and folded back into collective knowledge.

Think of it as the cognitive metabolism of the next web:

Language → Structure → Execution → Proof → Learning.

Each phase transforms meaning into a new form of reality.

Language captures intention — what we want to say or do.

Structure translates that intent into a logical, machine-understandable representation.

Execution turns that logic into verifiable action across digital and physical systems.

Proof ensures every outcome is accountable — cryptographically, logically, and socially.

Learning closes the loop, feeding verified results back into the shared semantic memory of the web.

Over time, this loop allows the web to evolve from a communication network into a cognitive organism — one that doesn’t just transmit information but continuously refines its understanding of the world.

In today’s internet, this loop is broken.

Language remains detached from logic; logic is executed without transparency; and actions rarely return as structured knowledge. The STM proposes to repair that fracture — to rebuild the web as a closed, self-learning cognitive system, where meaning and verification co-evolve.

Visually, you can imagine it as a circle or figure-eight flow:

Human Intent → Semantic Representation → Verified Action → Proof of Execution → Updated Knowledge → Human Intent again.

Every cycle strengthens the web’s collective intelligence.

Every verified action becomes new language.

Every human contribution becomes part of the system’s reasoning.

This is the heart of the Social Turing Machine —

a web that no longer just connects us,

but one that learns from every connection.

The Three Vertical Layers

The Social Turing Machine (STM) is not a single piece of software—it’s a layered cognitive architecture. Each layer corresponds to one of the three forces shaping the true Web 4.0: meaning, trust, and experience. Together, they form a living system in which language can be understood, verified, and enacted as collective intelligence.

You can think of these layers as the three parts of a digital brain.

The Semantic Layer is the cortex—where perception, language, and reasoning take place.

The Protocol Layer is the spinal cord—where execution, reflex, and validation occur.

The Experience Layer is the prefrontal cortex—where decisions, emotions, and interaction meet.

Only when these three layers operate in harmony can the web shift from being a network of data to a system of cognition.

1. The Language / Semantic Layer — Understanding Meaning

Every intelligent act begins with understanding.

The semantic layer is where the system learns to interpret intent—to transform the fluid ambiguity of natural language into precise, machine-usable structures.

This layer is the web’s cognitive cortex: it listens, parses, and models meaning. Its job is to bridge the vast gulf between human expression and machine logic.

a. Purpose

The goal here is simple but radical:

to translate natural language into structured, verifiable Intent IR (Intermediate Representation)—a new grammar of action that machines can reason about, modify, and execute.

Where today’s large language models (LLMs) generate language that sounds intelligent, the STM’s semantic layer goes further—it encodes that meaning as computable intent. Each phrase, command, or statement becomes a data structure that carries explicit logic and contextual metadata.

In other words, meaning becomes portable and auditable.

b. Core Components

LLM Interface — The conversational surface where humans express themselves naturally. It parses input, disambiguates intent, and calls on the system’s deeper semantic engines.

Intent IR (Intermediate Representation) — A formalized structure that captures what the user means, not just what they say. It’s the web’s “assembly language of thought.”

Ontology Graph — A continuously evolving network of concepts, entities, and relations. It anchors language to a shared knowledge base, ensuring that meaning stays consistent across users, domains, and time.

c. Output

The result of this layer is a verified semantic object: an intention that can be reasoned about, shared between systems, or executed safely.

Each Intent IR is like a digital contract between meaning and action—it defines what needs to happen, under what conditions, and why.

d. Analogy

If the STM were a brain, this layer would be the cortex—the region responsible for perception and language, where signals from the world are turned into structured thought.

This is where the web begins to “understand itself.”

2. The Protocol / Decentralization Layer — Establishing Trust

Understanding alone isn’t enough.

A system that can interpret meaning but not guarantee truth will collapse under its own uncertainty.

That’s why the next layer exists: to anchor meaning in verifiable reality.

The Protocol Layer is the trust fabric of the Social Turing Machine.

It transforms logic into provable action, and action into immutable record.

a. Purpose

This layer’s mission is to ensure that everything the STM does is provable, auditable, and tamper-resistant.

Every rule, transaction, or state change must be traceable—not because we distrust humans, but because we want to build systems that can be trusted without constant human supervision.

Here, code becomes the enforcement mechanism of meaning.

When a semantic object is compiled into executable logic, it runs within a distributed execution environment that guarantees fairness and reproducibility.

Trust, in this layer, is not a social assumption—it’s a mathematical property.

b. Core Components

Execution Ledger: The distributed memory of the STM, recording every verified action in an immutable chain of events.

Smart Contracts / Logic Blocks: Atomic programs that interpret and enforce Intent IRs, functioning as verifiable mini-protocols for executing meaning.

Proof Mechanisms: Zero-knowledge proofs (ZKPs), digital signatures, and cryptographic attestations ensure that each execution can be verified without exposing private data.

Identity / DID Systems: Portable digital identities that link every action to accountable authorship—without relying on centralized authorities.

c. Output

The output of this layer is the trust fabric itself: a distributed record of verified logic, cryptographically anchored and universally auditable.

Every action, once recorded here, becomes a fact—not because a platform says so, but because the network can reproduce and verify it.

d. Analogy

If the Semantic Layer is the cortex of the system, the Protocol Layer is its spinal cord.

It carries instructions from thought to action and enforces reflex-level integrity.

It doesn’t interpret meaning—it ensures that meaning can’t be corrupted during execution.

This is where trust stops being a matter of belief and becomes a property of computation.

A Note on Decentralization: Toward a Progressive or Hybrid Model

While decentralization remains a philosophical cornerstone of Web3, it need not be absolute.

The true goal is not anarchy of nodes but integrity of systems.

In practice, progressive decentralization—a staged migration from centralized coordination to distributed consensus—may offer a more pragmatic path.

Early-stage systems can rely on verified coordinators or federated trust hubs, blending human governance with cryptographic guarantees.

As semantic standards, verification proofs, and agent-level intelligence mature, control can gradually dissolve into the network itself.

Similarly, hybrid trust models—where on-chain proofs interoperate with off-chain verifiers (auditors, AIs, or legal institutions)—may ensure both speed and legitimacy.

After all, trust in civilization has always been layered: contracts, courts, and communities coexist.

The Social Turing Machine extends that principle to computation.

Decentralization is not a destination but a gradient—

a dynamic balance between autonomy, efficiency, and verifiability.

The protocol layer should therefore be designed not as an ideology, but as an adaptive trust engine—capable of evolving its degree of decentralization according to context, maturity, and ethical demand.

3. The Experience / Intelligence Layer — The Human Interface

If the semantic layer is the mind and the protocol layer is the body, the Experience Layer is the personality—the part that makes interaction intuitive, contextual, and human.

This layer defines how people feel the web.

It turns the STM’s complexity into a compositional experience—a space where every action, no matter how complex, feels as natural as having a conversation.

a. Purpose

The Experience Layer’s purpose is to make the system usable, adaptive, and invisible.

Users shouldn’t need to know what an Intent IR is, how a proof works, or what chain an action runs on.

They simply express an intention in plain language, and the system orchestrates everything—understanding, execution, verification—on their behalf.

The goal is not just usability, but symbiosis: a state where human cognition and machine cognition flow together.

b. Core Components

Conversational Agents: LLM-powered assistants that translate between human expression and machine precision. They remember context, anticipate needs, and act as cognitive collaborators.

Adaptive Personalization: Systems that adjust dynamically to a user’s goals, preferences, and roles—tuning complexity to expertise.

Cognitive UX / AI Companions: Interfaces that think with you. They present information as meaning, not data; as structured dialogue, not menus or dashboards.

c. Output

The output of this layer is a seamless human–machine dialogue.

The user doesn’t interact with software—they interact with understanding itself.

Every query, request, or instruction is an act of collaboration with the network’s intelligence.

d. Analogy

This layer is the prefrontal cortex of the STM—the seat of decision-making, planning, and self-reflection.

It’s where language, reasoning, and emotion converge into coherent action.

When this layer matures, the web will no longer feel like a set of disconnected apps and interfaces.

It will feel like a single, adaptive intelligence—one that knows enough to interpret, prove, and act on our behalf, yet transparent enough to explain why.

Putting It All Together

These three layers form a vertical stack—experience above, protocol below, semantics in between—connected by the Semantic Runtime, the “brainstem” that translates meaning into verified execution.

The Semantic Layer defines what we mean.

The Protocol Layer ensures it’s true.

The Experience Layer makes it usable.

When combined, they create an internet that can think, trust, and collaborate—not as separate products, but as a single cognitive infrastructure.

Imagine a simple visual:

┌─────────────────────┐

│ Experience Layer │ → Human interface, adaptive UX

├─────────────────────┤

│ Semantic Runtime │ → Language–Logic bridge

├─────────────────────┤

│ Protocol Layer │ → Verified execution, trust fabric

└─────────────────────┘

This is the architecture of the Social Turing Machine—

an internet that learns like a mind, remembers like a ledger, and interacts like a friend.

The Three Horizontal Flows

If the vertical layers form the anatomy of the Social Turing Machine — its cortex, spinal cord, and interface — then the horizontal flows are its metabolism: the circulation of meaning, logic, and verification through the system.

Where the layers describe what the system is made of, the flows describe how it thinks.

Together, they turn the static architecture of Web 3.0 into a living cognitive loop — Web 4.0 should be a web that can continuously understand, reason, act, and learn.

Each flow represents a transformation — from language into structure, from structure into action, from action into knowledge.

1. The Semantic Flow — Meaning → Form

Every intelligent process begins with interpretation.

The semantic flow captures the first stage of cognition: the moment when human language is translated into structured understanding.

This is where meaning becomes form.

A user expresses an intention — in speech, text, or code. The system’s LLM layer interprets this expression, disambiguates it, and maps it into a structured representation called Intent IR.

The Intent IR is then matched against the ontology graph, ensuring that each concept aligns with a shared vocabulary of entities, relationships, and constraints.

Through this process, raw language becomes semantic structure: a precise, machine-verifiable statement of intent.

For example, the phrase “ship 30 units of Product A to Distributor B” becomes:

action: ship

quantity: 30

unit: Product_A

destination: Distributor_B

condition: confirmed_payment == true

At this point, intention has been formalized — it can be reasoned about, verified, or executed.

The semantic flow is what ensures that machines understand what we mean, rather than merely reacting to what we say.

It’s the foundation of interoperability — because once meaning is structured, it can move seamlessly across systems, languages, and domains.

Semantic Flow = From Language to Structure = From Meaning to Form.

It’s the phase of perception and understanding — the same process your brain performs when hearing words and forming concepts.

2. The Logical Flow — Form → Function

Once meaning is structured, the next question is: Can it run?

The logical flow is where structured intent becomes executable logic.

It compiles the semantic representation into verifiable computation, ensuring that every action follows a transparent and reproducible path.

Here, Intent IRs are translated into logic blocks or smart contracts — modular programs that define the exact conditions, dependencies, and outcomes of each action.

Each block is cryptographically linked to the trust fabric — meaning that when it executes, its state changes are recorded, auditable, and permanent.

This is the form-to-function transition — the digital equivalent of metabolism.

Meaning that has been understood is now acting in the world.

For instance:

The verified “ship 30 units” intent triggers inventory checks.

The contract validates payment and compliance.

The decentralized ledger records the state changes (goods reserved, payment escrowed).

Each step of execution is provable and replayable. No hidden states, no black boxes.

In traditional computing, execution happens behind closed systems.

In the Social Turing Machine, execution happens in the open, where every transition is observable and accountable.

Logical Flow = From Structure to Execution = From Form to Function.

This is where meaning becomes behavior —

the reasoning center of the web’s cognitive body.

3. The Proof Flow — Function → Knowledge

After execution, a final transformation must occur.

Actions without reflection are mere automation; cognition requires learning.

The proof flow closes this loop — turning action back into understanding.

Each executed state produces verifiable proofs: evidence that an action occurred, under what rules, and with what results.

These proofs are stored alongside their semantic origins, creating a transparent causal chain — from intent → logic → outcome → verification.

But the process doesn’t end with storage.

The STM feeds these verified results back into its ontology and learning systems.

New patterns are learned, rules are updated, and the system’s shared understanding becomes more accurate over time.

In human terms, this is memory and reflection.

The web, for the first time, gains an internal dialogue — a mechanism for self-correction and growth.

This is what differentiates the Social Turing Machine from earlier systems of automation.

Where Web2 stored data, and Web3 secured data, Web 4 STM learns from data —

integrating proof into meaning, and meaning into evolution.

Proof Flow = From Execution to Knowledge = From Function to Understanding.

Each proof strengthens collective intelligence.

Each verified action becomes a new fact in the world’s semantic fabric.

4. The Closed Cognitive Loop

When these three flows operate together, the system becomes more than infrastructure — it becomes a thinking organism.

Semantic Flow: Perception — understanding meaning.

Logical Flow: Reasoning — acting with structure.

Proof Flow: Reflection — learning from action.

Together they form the closed cognitive loop:

Understand → Reason → Act → Verify → Learn → Understand again.

This cycle is self-reinforcing:

Every understanding leads to new action,

Every action creates new knowledge,

Every proof strengthens shared trust.

In biological terms, this is how cognition emerges — through constant loops of perception, reasoning, and adaptation.

In technical terms, this is the mechanism by which meaning becomes measurable and trust becomes recursive.

Over time, as the Social Turing Machine iterates through this loop, it will not just execute instructions — it will refine its own semantic model of the world.

The web will become a continuous process of thinking:

learning from what it does, reasoning from what it knows, and understanding what it means to act.

Semantic Flow (blue), Logical Flow (green), Proof Flow (gold) — weaving endlessly through one another, feeding energy back into the system’s cognitive core.

The Social Turing Machine lives in that motion —

a web not of content or transactions, but of understanding that sustains itself.

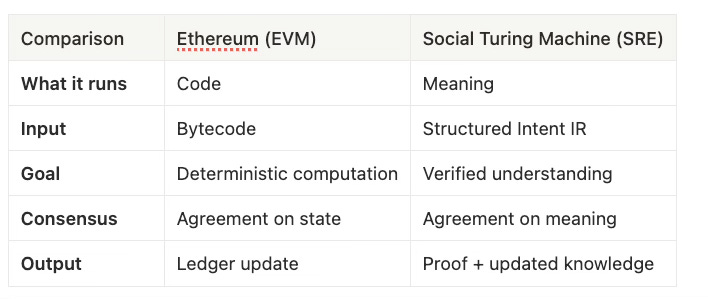

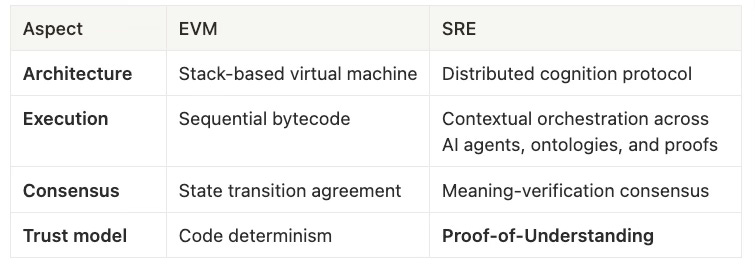

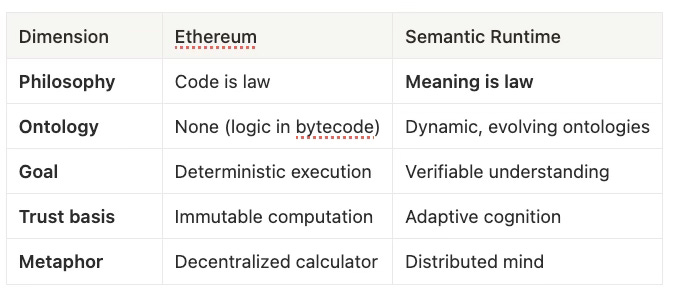

Let’s Envision a Semantic Runtime

Imagine standing at the edge of our digital civilization: behind us, Ethereum’s roaring engine—a global computer that runs code without permission; ahead of us, something quieter but deeper—a runtime not for code, but for meaning. Ethereum solved “how to execute programs we can trust.” The next leap asks: can the web execute understanding itself—reason, verify, and remember, not just compute?

That is the Semantic Runtime Environment (SRE) of the Social Turing Machine. It does for meaning what the EVM did for computation: its input is language, its currency is understanding, and its consensus is proof-of-meaning.

1. From EVM to SRE: running meaning instead of code

The EVM executes bytecode compiled from Solidity; it guarantees that code runs exactly as written.

The SRE executes Intent IRs—structured representations of natural language; it guarantees that meaning runs exactly as intended.

The EVM ensures logic is consistent; the SRE ensures logic, meaning, and intent are consistent.

EVM executes computation. SRE executes cognition.

2. Determinism vs. semantics

Ethereum’s brilliance is determinism: every node gets the same bit-for-bit result. But determinism externalizes context; the machine doesn’t care why a transaction exists.

The SRE targets semantic alignment: nodes converge on a shared understanding of what happened and why. Consensus shifts from bytes to meaning. The unit of output is not only a transaction, but a verified narrative—intent, logic, and proof bound together.

a.Two generations of trust machines

EVM execution is top-down and closed: the bytecode is the law.

SRE execution is bottom-up and adaptive: intent stays open to context; proofs are composable across domains.

The EVM trusts the code. The SRE verifies the intention.

b. From gas to meaning: the cost of understanding

Ethereum introduced gas as the unit cost of computation.

The SRE introduces an epistemic cost: the cost of understanding correctly. Efficiency depends on:

clarity of the ontology (semantic precision),

speed of proof generation (verification efficiency),

reuse of verified knowledge (network learning).

In the EVM, gas enforces fairness in computation.

In the SRE, clarity enforces fairness in meaning.

In Ethereum, computation is scarce. In the SRE, verified meaning is scarce.

c. Persistence: from ledger to memory

Ethereum persists what happened.

The SRE persists what was understood and why—a semantic memory. Every verified action updates the ontology, refines shared logic, and enriches collective understanding. The linear ledger becomes a cognitive spiral: intent → action → proof → learning → new intent.

d. Verification: from code integrity to meaning integrity

Ethereum guarantees cryptographic validity of state changes.

The SRE guarantees semantic validity—that outcomes match intent. This extends PoW/PoS into Proof-of-Understanding, where AI reasoning, ontology alignment, and cryptographic proofs interlock to make trust measurable.

Ethereum proved trust can be computed. The SRE shows trust can think.

e. Philosophy: code is law → meaning is law

Ethereum automated transactions and created financial consensus.

The SRE automates understanding and seeks semantic consensus—alignment not only of value, but of thought.

3. The Semantic Runtime (SRE): heart of the system

Every architecture needs a heartbeat. In the Social Turing Machine, that heartbeat is the Semantic Runtime—not a server, chain, or single program, but a distributed cognition protocol that continuously translates between language, logic, and verification. Think of it as the brainstem linking the Semantic Layer (meaning), Protocol Layer (trust), and Experience Layer (human interface) into one loop of perception, reasoning, and action.

a. Compilation — translating language into logic.

Free-form intent (“Transfer 30 tokens to Alice when shipment arrives”) becomes a semantic graph and Intent IR with entities, conditions, and dependencies. The SRE learns from context to make intent a verifiable object, not a vague instruction.

Coherence ≠ understanding. Understanding begins when language gains structure, context, and consequence.

b. Orchestration — routing meaning through the machine.

The SRE chooses where and how to execute: off-chain compute or on-chain proof, local agent or federated node, which sequence balances latency, privacy, and trust. It doesn’t move data; it moves meaning across an open field of cognition.

c. Verification — making understanding accountable.

Outputs are checked against cryptographic and logical constraints: did execution follow the Intent IR, the ontology, and the rules—and can others reproduce it? This is where LLMs alone fall short:

Coherence ≠ proof. Black-box ≠ accountable.

The SRE binds every inference and action to external, auditable evidence.

d. Persistence — remembering and evolving.

Verified intents/results update semantic memory: vocabularies grow, successful reasoning paths are reinforced, weak ones flagged. Over time, the runtime cultivates institutional memory for civilization-scale cognition.

A Heartbeat.

Compilation → Orchestration → Verification → Persistence → (new) Compilation. The SRE is a co-operative layer of cognition where LLMs, ontologies, proof systems, and ledgers synchronize. It is where the fluid intelligence of language meets the rigid logic of proof—and from that tension, trustworthy intelligence emerges.

Why envision this now?

Ethereum was the mechanical age of trust (trust via computation). The Social Turing Machine is the cognitive age of trust (trust via understanding). We built machines that don’t lie; now we must build machines that can explain the truth—a global computer evolving into a global mind that can understand, verify, and learn with us.

The Minimal Unit — The Block

Every large system needs a smallest stable part — a piece of structure that holds everything else together.

For the Social Turing Machine, that unit is called the Block.

In a traditional blockchain, a block is a bundle of transactions.

In a semantic network, it’s a statement of fact or relationship.

In the Social Turing Machine, a Block is both:

a verifiable, composable unit of structured intent — a small self-contained container that captures what needs to happen, under what logic, and how it can be proven true.

Each Block is like a cognitive cell of the web. It carries Intent, Logic, and Proof — the three components that allow meaning to flow, act, and verify itself across the system.

1. What Exactly Is a Block?

A Block is the smallest actionable container of understanding.

It holds a single “intent” (a human instruction or claim), the logic that defines how that intent should work, and the proof that shows it happened as intended.

You can think of it as the atomic unit of digital reasoning — small enough to move freely between systems, yet complete enough to stand on its own.

Here’s how it works conceptually:

┌────────────────────────────┐

│ Intent (Meaning) │ → What is being declared or requested?

├────────────────────────────┤

│ Semantics (Structure) │ → How is it defined and disambiguated?

├────────────────────────────┤

│ Logic (Execution Rules) │ → Under what conditions does it execute?

├────────────────────────────┤

│ Proof (Verification) │ → How do we know it occurred as stated?

└────────────────────────────┘

For example:

Intent: “Transfer 30 units of Product A to Distributor B when payment is received.”

Semantics: This phrase is converted into a structured Intent IR — entities (Product A, Distributor B), quantities (30), and conditions (payment = true).

Logic: The rule governing the intent: “If payment is verified, trigger shipment.”

Proof: Once the shipment executes, a digital signature or zero-knowledge proof records that it occurred exactly under those terms.

Each Block represents one closed loop of intention, action, and verification.

It’s the smallest piece of meaning that the system can execute and remember.

2. Why Containers Matter

Why use containers like Blocks at all? Because meaning without structure disperses.

In language, we use sentences and paragraphs to hold thought together.

In software, we use functions or APIs to define reusable logic.

The Block serves the same role in a semantic runtime: it gives meaning a boundary so it can be reasoned about, transferred, and validated.

Without containers:

Actions would blur into noise.

Intentions could not be referenced or combined.

Verification would be impossible.

A container does three crucial things:

Coherence — it makes a thought or rule self-contained.

Composition — it lets you connect one thought to another safely.

Verification — it provides a finite scope for proving what happened.

In this way, Blocks are not just technical artifacts but cognitive boundaries — the smallest spaces where understanding can exist and be tested.

3. From Blocks to Chains of Meaning

Blocks are designed to connect.

Each Block can reference, depend on, or verify another, forming what the Social Turing Machine calls Structure Chains— networks of meaning that evolve through verified relationships.

A single Block represents a verifiable action (e.g., “payment confirmed”).

A chain of Blocks represents a verifiable narrative (e.g., “order created → payment confirmed → product shipped → delivery completed”).

Traditional blockchains record what happened in a linear ledger.

The STM records what was understood and why it happened — a semantic ledger of cause, condition, and meaning.

Over time, the network of Blocks becomes a living graph of trust — a web that can reason, audit, and even reinterpret its own history based on verifiable understanding.

4. Containers in Broader Context

The Block is not a metaphor — it’s an engineering necessity.

Every functioning system, from biology to computing, uses some form of container:

In biology: a cell maintains boundaries so life can exist.

In programming: a function isolates logic so it can run safely.

In society: a contract defines terms so actions can be trusted.

The Block serves that role for cognition and digital interaction.

It’s the container of meaning — small enough to be portable, strong enough to preserve integrity, and transparent enough to be verified by anyone.

If the internet’s early layers were built from packets of data,

then the next web will be built from Blocks of meaning.

5. How the Block Evolves Knowledge

Each time a Block completes its cycle — intent → logic → proof — the result is added to the system’s shared memory.

This recursive process allows the Social Turing Machine to learn structurally:

the more Blocks that are verified, the more refined its ontology and reasoning become.

Unlike large language models, which learn statistically by pattern,

the Social Turing Machine learns cognitively by proof.

Every verified Block becomes a new building block of knowledge — a piece of meaning the system can trust and reuse.

Block = Intent + Logic + Proof

→ the smallest executable unit of verified understanding.

Summary

A Block is both a technical unit and a conceptual container.

It’s where human intention meets machine logic and becomes accountable to truth.

Blocks make meaning modular, logic transparent, and trust composable.

When thousands of these containers connect, they don’t just form a ledger of transactions —

they form a structure of reason.

This is how the Social Turing Machine begins to “think”:

not through one massive central intelligence,

but through millions of small, verifiable containers of intent —

each a Block, each a little proof that meaning still matters.

Developers don’t build monolithic apps anymore; they compose semantic workflows of agents and blocks.

Users don’t install software; they converse with intelligent systems that assemble themselves around each goal.

Trust isn’t assumed; it’s generated and verified, block by block.

Large language models have made language powerful again — useful, but not yet trustworthy. Their knowledge is implicit, their origins opaque, their logic unverifiable. They can predict and persuade, but not prove. The Social Turing Machine (STM) closes that gap. It adds explicit semantics so meaning is structured and machine-readable; verifiable computation so every action carries cryptographic proof; and compositional experience so users interact through intent, not interfaces. In this architecture, language becomes more than a medium — it becomes the operating system of intelligence itself, where words can execute, verify, and govern. The STM transforms AI from black-box fluency into transparent reasoning, turning conversation into coordination and trust into a protocol. In this future, we no longer just uselanguage to describe the world — we run the world through it.

MVP Example: Cross-Border E-Commerce Loop

To make the Social Turing Machine concrete, let’s imagine one simple but globally relevant scenario: a cross-border e-commerce transaction.

Today, such a process involves dozens of disconnected systems — invoices, customs declarations, logistics APIs, and payment networks — each with its own data format, rules, and trust model. The result is friction, delays, and an enormous reliance on manual verification. Every actor — buyer, seller, bank, insurer, customs — must trust others blindly or rely on external audits.

The STM proposes a different approach: a closed, verifiable cognitive loop, where every step — from intent to delivery — is transparent, executable, and provable.

1. The Intent: “Ship 30 units of Product A to Distributor B under DAP.”

The process begins with a simple sentence — a natural-language expression of human intention.

“Ship 30 units of Product A to Distributor B under Incoterm DAP.”

The STM’s Semantic Runtime parses this instruction and generates a structured Intent IR (Intermediate Representation).

It identifies entities (Product A, Distributor B), quantities (30 units), and the business rule (Delivered At Place — DAP).

This intent becomes a Block, the smallest verifiable container of meaning in the system.

Incoterms (International Commercial Terms) are standardized trade rules that define the responsibilities, costs, and risks of buyers and sellers in international shipping and delivery of goods.

2. The Semantic Layer: Structure and Compliance

Next, the semantic layer validates the intent against domain knowledge and regulatory ontologies:

Product classification → checked against trade and customs databases.

Delivery conditions → matched to Incoterm compliance rules.

Distributor B → verified via identity ledger and risk database.

The system now knows not only what is being requested, but also what it means in a legal, financial, and logistical context.

Each condition is encoded as a rule inside the Block:

IF payment_verified == true

AND customs_cleared == true

THEN trigger_shipment(ProductA, 30, DistributorB)

This rule set becomes executable logic, linked to external data feeds (customs APIs, payment gateways, IoT logistics sensors).

3. The Protocol Layer: Execution and Verification

Once conditions are met, the STM’s Protocol Layer handles verifiable execution.

Payment confirmation triggers an escrow release smart contract.

A shipping agent (human or AI) initiates the delivery and submits IoT sensor proofs (temperature, route, timestamp).

Each update is hashed and appended as a sub-Block — building a verifiable chain of events.

The entire process runs autonomously but transparently: each actor’s contribution is signed, timestamped, and validated through decentralized consensus.

No single party can alter records, but all can audit the state of play.

4. The Proof Layer: The One-Page Audit Ticket

When delivery is completed, the system automatically compiles all verified steps — payment, customs, logistics, delivery confirmation — into a one-page audit ticket.

This ticket isn’t a PDF report; it’s a verifiable proof object containing:

transaction summary,

condition checks and rule validations,

cryptographic signatures from all parties,

hash pointers to corresponding Blocks in the semantic ledger.

Anyone — regulator, insurer, auditor — can confirm authenticity by checking the proofs directly.

The result is a complete, machine-verifiable narrative: a story of the transaction told in logic and evidence, not paperwork and email threads.

5. Closing the Loop: Learning and Reuse

Once the ticket is generated, it feeds back into the STM’s semantic memory.

The verified rules and patterns — “DAP + escrow + IoT verification” — become reusable templates for future transactions.

The system grows smarter with each verified loop.

Next time, when someone says “Ship goods under DAP,” the STM can instantly assemble the necessary Blocks, agents, and proof mechanisms — learning structurally, not statistically.

6. The Outcome

This single MVP demonstrates the Social Turing Machine’s full cycle:

Intent → Structure → Logic → Execution → Proof → Learning.

What was once a paper trail becomes a cognitive feedback loop.

What was once fragmented trust becomes machine-verifiable coordination.

A single human sentence — “Ship 30 units…” — turns into an autonomous, provable process across borders and systems.

And when that happens, language stops being an instruction — it becomes infrastructure.

Ecosystem Blueprint: Who Builds What

The Social Turing Machine (STM) is not a single product or company.

It’s a civilization-scale architecture — a shared blueprint for how meaning, trust, and intelligence can coexist in the digital world.

Just as the early Internet wasn’t built by one entity but by layers of collaboration — TCP/IP protocols, Linux kernels, W3C standards, open browsers — the STM will emerge from an ecosystem of independent creators, builders, and infrastructure players, each working on different layers of the same cognitive web.

You can think of it as three concentric layers of innovation:

Indie Developers → Builders / Protocol Companies → Platforms & Infrastructure.

1. Indie Developers — The Semantic Pioneers

Every major shift in computing starts with individual builders experimenting at the edges.

In the STM ecosystem, independent developers are the first movers — crafting the raw tools that teach the machine how to understand.

Their focus: the semantic layer — making language computational, meaning verifiable, and interfaces conversational.

What they build:

Semantic tooling — utilities that turn unstructured text into structured intent; tools for parsing, tagging, and linking meaning.

Prompt compilers — transforming natural language into machine-readable logic (Intent IR).

Open semantic blocks — reusable templates for intents (“verify identity,” “issue receipt,” “audit transaction”) that anyone can compose.

Lightweight reasoning agents — mini-processors that interpret user requests and invoke the right Blocks or proofs.

In the early phase, most STM innovation will likely come from this group — small teams or open communities building high-leverage libraries.

Think of them as the semantic hackers of this new web — not writing apps, but teaching the web to think in structures.

Their work will define the early “vocabulary” of the cognitive Internet: the first reusable Blocks and ontologies that form the basis of all higher logic.

2. Builders and Protocol Companies — The Trust Engineers

Once semantics becomes standardized, the next layer focuses on trust, coordination, and scale.

This is where builders and protocol companies step in — creating the connective tissue that turns meaning into verified action.

These organizations will operate like the middle layer of the stack —

building the runtime logic, verifiable execution frameworks, and governance protocols that power STM applications.

What they build:

Web 4.0 middleware: frameworks that connect semantic intent with decentralized execution systems (blockchains, oracles, verification networks).

Proof engines: zero-knowledge proof systems, verifiable computation layers, and other cryptographic primitives that allow AI and humans to share proofs of correctness.

DID and credential frameworks: decentralized identity and reputation systems that attach accountability to actions without exposing private data.

Agent coordination frameworks: systems for multi-agent reasoning, negotiation, and consensus around meaning.

Cross-domain verification services: audit, compliance, and provenance tools that ensure statements and actions can be trusted across networks.

Their work ensures that every semantic object — every Block — is executable, auditable, and reusable across contexts.

In short: indie devs make meaning computational; builders make trust programmable.

They are the engineers who ensure that language can move safely through digital space — that when a machine says “this is true,” the statement carries cryptographic, legal, and social weight.

3. Platforms and Infrastructure — The Cognitive Foundations

Above these two layers lies the infrastructure of cognition itself:

the runtime environment that allows billions of Agents, Apps, and Blocks to operate in sync.

This is the domain of platform companies, research institutes, and global consortia — those with the capacity to set technical standards and maintain shared public goods.

What they build:

Semantic Runtime Infrastructure: the distributed computing backbone where language, logic, and proof converge. This includes orchestration layers, state verification systems, and global semantic registries.

Verifiable AI Standards: frameworks for AI systems to produce auditable reasoning chains (proof-of-understanding, model provenance, data traceability).

Semantic Interoperability Protocols: standards ensuring that meaning is portable — that a verified statement or intent expressed in one system can be understood and trusted by another.

Open ontologies and governance frameworks: maintaining the “grammar” of the web’s shared meaning space — versioned, transparent, and globally accessible.

Cognitive infrastructure services: compute nodes optimized for reasoning, proof generation, and knowledge graph persistence.

These organizations will serve the same function that Linux Foundation, Ethereum Foundation, and W3C served for previous eras — ensuring coherence, openness, and long-term sustainability of the protocol ecosystem.

Their goal is not to own intelligence, but to standardize the governance of intelligence.

4. A Collaboration Model: “Linux + Ethereum + W3C”

The STM ecosystem can’t evolve through competition alone — it needs collaboration protocols, not just communication protocols.

The governance model will likely resemble a hybrid of three proven ecosystems:

Linux’s open-source meritocracy: anyone can contribute code or semantic Blocks, governed by transparent review processes.

Ethereum’s economic coordination layer: incentive systems that reward verified contribution and protocol maintenance.

W3C’s standards governance: ensuring semantic consistency and backward compatibility across global systems.

Together, these principles form the foundation of a self-governing cognitive commons — a global system where meaning, proof, and innovation coexist without central control.

5. Why This Ecosystem Matters

No single actor can build the Social Turing Machine alone — it’s an emergent project of civilization itself.

Each group —

Indie developers ignite the language revolution,

Builders and protocol engineers encode trust into logic,

Platforms and institutions give it structure and continuity.

When these layers align, the web stops being a fragmented marketplace of data and becomes an ecosystem of verifiable understanding.

The result is not another app store, blockchain, or AI model —

it’s the operating system of meaning itself.

Status & Roadmap

The Social Turing Machine today is still in its concept-alpha stage — an architecture under active exploration rather than a finished system.

What exists so far is a language-first prototype: a set of experiments proving that natural language can be compiled into structured Intent IRs, reasoned about through semantic engines, and verified using decentralized proofs.

This early version runs as a hybrid runtime — combining LLMs for interpretation, semantic reasoning engines for structure, and blockchain or cryptographic layers for verification.

In other words, it’s a functioning simulation of trustable language: you can express an intent in English, see it transformed into logic, executed under explicit conditions, and verified with proof.

The foundation is now in place.

The next phase focuses on scaling the semantic runtime and expanding its measurable capabilities.

To track progress, the project uses a seven-metric scorecard — each representing a core property of a trustworthy cognitive web:

Precision — Can the system translate intent into logic with near-zero ambiguity?

Coverage — How many domains (legal, commerce, science, education) can the ontology handle?

Trustworthiness — Are all actions and outputs cryptographically verifiable?

Transparency — Can users audit reasoning chains and provenance?

Seamlessness — Is the experience intuitive enough for natural conversation?

Composability — Can Blocks and Agents be reused across systems?

Evolvability — Can the architecture adapt to new technologies and standards without breaking coherence?

The short-term roadmap centers on developing working prototypes in real-world verticals — logistics, governance, and scientific collaboration — to demonstrate closed cognitive loops from intent → proof → learning.

In parallel, the STM is opening collaboration tracks for developers, researchers, and domain experts.

We’re inviting contributions in:

Domain ontologies (industry, law, science),

Proof mechanisms (verifiable AI, zero-knowledge),

Human–machine UX (language-driven interaction).

The long-term goal is not to build a product but to cultivate a shared cognitive infrastructure — an open, verifiable web where meaning, logic, and trust can finally operate as one.

When the Web Opens Its Eyes

Civilizations don’t leap because they discover new metals.

They leap because they discover new forms of understanding.

We have spent three decades wiring the planet;

we can spend the next three teaching it to think.

The Social Turing Machine is not a platform to join, a token to buy, or a dashboard to learn.

It is a promise we make to one another: that language will be honored with structure, that structure will be accountable to proof, and that proof will return as shared wisdom.

If Web1 gave us pages, Web2 voices, and Web3 ownership,

then Web 4.0 gives us conscience—systems that can explain themselves.

Imagine a world where a policy can show its reasoning,

a market can show its fairness,

a model can show its evidence.

Where a single sentence—honest and precise—can traverse agents, ledgers, and laws,

and come back with a receipt of truth.

This is not the triumph of machines over people.

It is the alignment of people with machines—

a co-evolution where our best intuitions are made legible,

our intentions executable,

our institutions corrigible.

We will not get there by decree.

We will get there block by block:

intent → logic → proof → learning.

Every verified outcome becomes a new brick in a cathedral of understanding that no ruler can seize and no crowd can distort.

So let this be our working creed:

Meaning before motion. We don’t act until we understand.

Proof before power. We don’t trust what cannot explain itself.

Learning before legacy. We ship systems that can change their minds.

The day the web learns to think will not arrive with fireworks.

It will arrive quietly, in the moment a city audits itself in one page,

a contract teaches its reader,

a classroom converses with a century of knowledge,

and a person anywhere can prove what is true without asking permission.

When that day comes, we won’t say “the internet changed.”

We’ll say we changed—

from consumers of feeds to authors of meaning,

from platforms of control to protocols of trust,

from code that executes to civilization that reasons.

The switch is simple and immense:

Understand → Reason → Act → Verify → Learn → Repeat.

If this thesis has a single invitation, it is this:

build one loop. Then another. Then a million more.

Not to summon a single, perfect intelligence,

but to awaken a chorus—a living web where

computation becomes civilization, language becomes code, and consensus becomes cognition.

We already have the wires.

What remains is the will.

Let’s teach the web to open its eyes—

and learn, together, how to use them.

What would the interface of such a system be like? Would it be an ultra-large-scale AI butler, similar to China Quantum in "Supernova Era"? Or like HAL9000 or J.A.R.V.I.S.?