Electricity wasn’t just another invention—it became universal because one principle, voltage, could power lamps, radios, computers, and cities. The internet wasn’t just a network—it became universal because TCP/IP could carry any kind of data: text, images, video, even money. AI feels magical today, but what we are really witnessing is the search for another universal principle. Each leap in technology begins when we discover universality: a minimal set of primitives that can simulate everything else.

And in every field of social and technical progress—whether in programming languages, global trade, or governance—we see the same gravitational pull. Systems start fragmented, full of incompatible parts, but over time they compress into a shared foundation, a common substrate that unlocks scale. Universality is not about doing more; it is about doing anythingwith less.

This series is about tracing that trajectory. From Turing’s imitation machine to today’s large language models, and forward to what I call the “social Turing machine,” we’ll explore the breakthroughs that universality has already delivered, and the ones that may still be ahead. The goal is not prediction for its own sake, but orientation: to know what kind of shifts to expect, and where it might be worth placing our collective bets.

What Universality Means

When I say universality, I don’t mean “widespread adoption” or “something everyone uses.” I mean something much more precise: the ability to reduce infinite tasks to a finite set of primitives, paired with a universal substrate that can carry them out. Once that substrate exists, it can simulate anything that falls within its domain.

Think about mathematics. At first glance, the infinite world of equations, curves, and transformations seems impossibly diverse. Yet every calculation can ultimately be expressed in a handful of primitive operations: addition, multiplication, and a small set of axioms. Entire branches of math, from calculus to number theory, are just elaborate recombinations of those primitives.

Physics works the same way. Newton’s laws compressed the unruly motion of apples and planets into three simple principles. With those rules in hand, the universe became predictable: you could simulate the arc of a cannonball or the orbit of the moon. Later, Einstein’s equations compressed an even wider range of phenomena into the geometry of spacetime. These were universality moments in science: finite rules, infinite reach.

Biology offers an even clearer example. Life itself is governed by four bases—A, T, C, G. DNA is the universal code. Out of this tiny alphabet, nature recombines sequences to produce the staggering diversity of living organisms. A fern, a frog, a human—they are all variations on the same set of primitives.

The pattern is unmistakable. Universality always looks like this:

Compression — distilling a chaotic, infinite landscape into a small toolkit of primitives.

Recombination — building endless complexity by rearranging those primitives in new ways.

This is why universality defines breakthroughs. It is not about solving every problem separately; it is about discovering the meta-solution, the framework that makes every problem look like a variation of the same theme.

The First Leap: Computation Universality

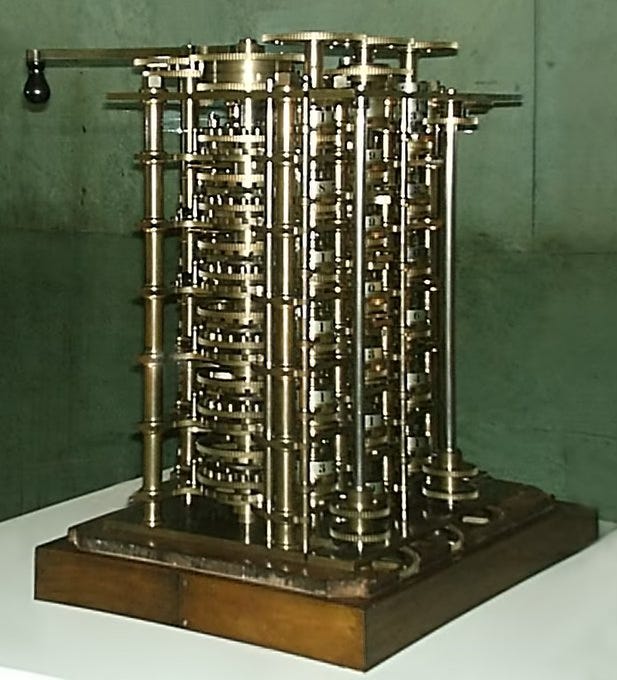

Before 1936, machines could calculate, but they could not generalize. The abacus was reliable, but it only mapped numbers to beads. Babbage’s Difference Engine, designed a century earlier, was more ambitious: a vast tangle of gears and shafts that could crank out polynomial values. Later, electromechanical calculators could add, subtract, multiply, even handle square roots.

But all of these were specialized calculators. Each one embodied a single function. If you wanted to add, you built an adding machine. If you wanted logarithms, you designed a new contraption. Complexity was handled through multiplication of hardware, not through abstraction. Civilization was living in a “mapping age,” where hardware = algorithm.

Alan Turing’s breakthrough was to step outside this paradigm. In his 1936 paper, he described an imaginary device with only four primitive actions:

Read a symbol on a tape

Write a symbol on the tape

Move the tape left or right

Change state based on simple rules

That was it—no gears, no special circuits, no pre-built functions. And yet, Turing proved that this minimal toolkit could simulate any algorithm that could ever be written down. By encoding the “instructions” of a specialized machine onto the tape, his “Imitation Machine”—what we now call the Universal Turing Machine—could mimic any other machine.

This was a profound shift: from a world of many machines, each bound to one task, to a world of one machine that could be reprogrammed endlessly. For the first time, hardware and function were separated. Software was born.

The impact cannot be overstated. Every computer you use today—laptops, smartphones, cloud servers—rests on Turing’s logic. A single physical device can run a word processor in the morning, simulate a weather system in the afternoon, and render a movie at night. All of these are just sequences of read–write–move–state instructions flowing through a universal substrate.

This was the first leap in universality: the compression of infinite tasks into four primitives, plus a universal tape to carry them. The consequences were not just technical, but philosophical. It gave humanity a new definition: “computable” now meant anything that could be described in these four actions.

(my son’s abacus, a mapping machine for addition and subtraction.)

The Second Leap: Language Universality

If Turing unified the world of computation, the next great unification came in the world of language. For decades, natural language processing (NLP) was a patchwork of specialized tools. You built one algorithm for machine translation, another for sentiment analysis, another for question answering, and still others for summarization, dialogue, or topic modeling. Each task had its own architecture, its own training set, its own evaluation metrics.

The result was a kind of digital Tower of Babel. Every system spoke its own dialect. Progress in one corner of NLP rarely transferred to another. A machine that could translate French to English was useless for detecting sarcasm, and a sentiment classifier had nothing to say about document retrieval. It was the same pre-1936 problem all over again: many “machines,” each confined to a narrow function.

The breakthrough came with large language models. Instead of building a bespoke solution for every task, researchers realized that all language operations could be reduced to a single primitive:

Predict the next token.

That’s it. The model doesn’t need a different engine for translation, summarization, or reasoning. It simply keeps guessing the most likely next unit of text—word, subword, or character—based on everything it has seen so far. Out of this humble mechanic, the full spectrum of linguistic intelligence emerges.

Once you have a system trained to predict tokens across billions of examples, prompts become the new “programs.” Write “Translate this to German” and the model predicts tokens that correspond to German text. Ask “Summarize this article” and the model predicts a condensed version. Query “What is 37 × 41?” and the model predicts the correct arithmetic sequence. Just as the Universal Turing Machine encoded the logic of any specialized calculator onto its tape, the LLM encodes the logic of any specialized language task into the probability of the next token.

The impact has been staggering. What was once a fragmented set of siloed models has collapsed into a single universal substrate. One model, infinite tasks. Translation, reasoning, summarization, dialogue—these are no longer separate engines but different expressions of the same primitive.

This was the second leap in universality. If Turing defined “what is computable,” then LLMs are beginning to define “what is language-modelable.” The boundary of intelligence itself is being redrawn, not as a collection of tasks, but as a single act of prediction unfolding on an infinite tape of tokens.

The Coming Leap: Civilization Universality

If computation was the first leap, and language the second, then what comes next? Look around: society today is a patchwork of silos. Finance, healthcare, education, logistics, governance—each has its own standards, its own data formats, its own institutions. Even inside a single organization, the fragmentation is obvious: the CRM doesn’t talk to the ERP, the accounting software doesn’t align with the HR platform, and government records are locked in formats from half a century ago. We’ve learned to live with these disconnects as if they were natural, but they are symptoms of a deeper problem: we lack a universal substrate for civilization itself.

What might such a substrate look like? I believe it begins with a cycle of four primitives:

Consensus — deciding what matters.

Protocol — encoding that decision into rules.

Structure — crystallizing those rules into roles, institutions, or processes.

Narrative — weaving a story that legitimizes and sustains the structure.

This cycle repeats endlessly. Constitutions are written, companies are founded, movements are born. Each time, the pattern is the same: consensus → protocol → structure → narrative. Yet today this cycle is still slow, brittle, and mostly manual. Every institution reinvents the wheel, every industry defines its own silo.

The coming leap would be to treat this cycle not as a sociological curiosity but as a universal primitive set, the way read/write/move/state defined computation, or token prediction defined language. Imagine a Social Turing Machine, where consensus can be captured digitally, protocols encoded transparently, structures instantiated as executable processes, and narratives reinforced through shared symbolic layers. In such a world, rules and institutions themselves could become executable code—not just written in paper constitutions or buried in bureaucracy, but running on a shared substrate of natural language, APIs, and vector spaces.

This is, of course, speculation. But then again, so was Turing’s imitation machine in 1936, so too were the first neural probabilistic language models in the early 2000s. What matters is the direction of compression: infinite diversity of social tasks, reduced to a finite cycle of primitives. From there, recombination does the rest.

If the first leap defined what is computable, and the second what is language-modelable, then the third may define what is governable and sharable at the scale of civilization.

Why Universality Defines Breakthroughs

When people talk about breakthroughs, they often imagine more power—faster processors, bigger datasets, stronger engines. But history shows that raw scale is not what truly changes the game. What defines a breakthrough is not the ability to do more of the same, but the ability to do anything new with less. That is the essence of universality.

Universality is about compression. A messy, fragmented field suddenly collapses into a handful of primitives and a universal substrate that can recombine them. The abacus, the adding machine, and the polynomial calculator were all swept into a single framework of read, write, move, and state. Translation, summarization, and reasoning dissolved into the single act of predicting the next token. In each case, the endless complexity of tasks was compressed into a smaller and smaller toolkit.

But compression alone is not enough. The real magic comes from recombination. Once you’ve identified the minimal building blocks, you can shuffle and stack them into countless new configurations. With just four DNA bases, life built whales and willows. With just two electric charges, engineers built circuits, radios, and supercomputers. With four computational primitives, programmers built every software system we know today. Universality means that a finite toolbox can be endlessly repurposed.

That is why universality defines breakthroughs. It does not merely speed up existing processes—it rewrites the limits of what is possible. The arrival of software meant we no longer needed a new machine for each task; one machine could simulate all of them. The arrival of LLMs means we no longer need a separate model for each linguistic task; one model can fluidly perform all of them. And if a Social Turing Machine emerges, we may no longer need separate institutions for each domain; one substrate could coordinate many forms of governance, commerce, and collective action.

Every leap in history follows this pattern: find the smallest set of building blocks, discover the universal substrate, and unleash infinite recombination. Breakthroughs are not about adding horsepower—they are about discovering universality.

The Road Ahead

When we zoom out, the pattern is unmistakable. Each era-defining breakthrough—whether in science, engineering, or society—has come from a moment of universality. We discover a set of minimal primitives, we build a universal substrate to carry them, and suddenly infinite possibilities open up.

In computation, it was Turing’s four actions and a universal tape.

In language, it became token prediction on a universal corpus.

And in civilization, we may soon see consensus, protocol, structure, and narrative woven into a new universal cycle.

Universality doesn’t just make things faster. It changes the horizon of what is possible. It tells us that instead of multiplying silos—more machines, more models, more institutions—we can compress them into a common base and let recombination do the rest. That is how breakthroughs scale.

This is why universality matters. It is not a curiosity of computer science, nor a quirk of AI research. It is the hidden law of progress: breakthroughs happen when we collapse chaos into clarity, when we discover the few rules that can simulate everything else.

像乐高的模块,原子和分子粒子,底层逻辑

无比通透,驾驭符号的能力👍👍👍

特别是针对加密货币的描述,直指本质