What Claude Skills Validated Me About Intelligence

Language, structure, and time: the smallest loop that makes cognition alive

We don’t notice the moment a tool becomes a medium. The printing press wasn’t just faster writing — it was the externalization of memory. The internet wasn’t just connected computers — it was the liquefaction of space. And now, quietly, we’re crossing another threshold: language is no longer just a representation of thought. It’s becoming executable. It’s learning to run.

The Threshold We’re Standing On

For most of human history, there’s been a wall between meaning and mechanism. You could describe what you wanted in language, but someone had to translate that description into logic, into code, into action. There was always a translator, always a gap, always friction between intention and execution.

That wall is crumbling.

With systems like Claude Skills, we’re watching the birth of something I’ve been calling language as runtime — the moment when natural language doesn’t just describe a process, but becomes the process itself. When a sentence can be both meaning and mechanism, both thought and tool.

If you are still not familiar with Claude Skills, I just wrote a tutorial:

But here’s what fascinates me: this isn’t just a technological shift. It’s exposing something fundamental about how intelligence actually works. And that’s what I want to talk about today.

Because underneath this moment, underneath all the talk about AI and agents and automation, there’s a pattern so simple it fits in one diagram, yet so complete it can model cognition, intelligence, and life itself.

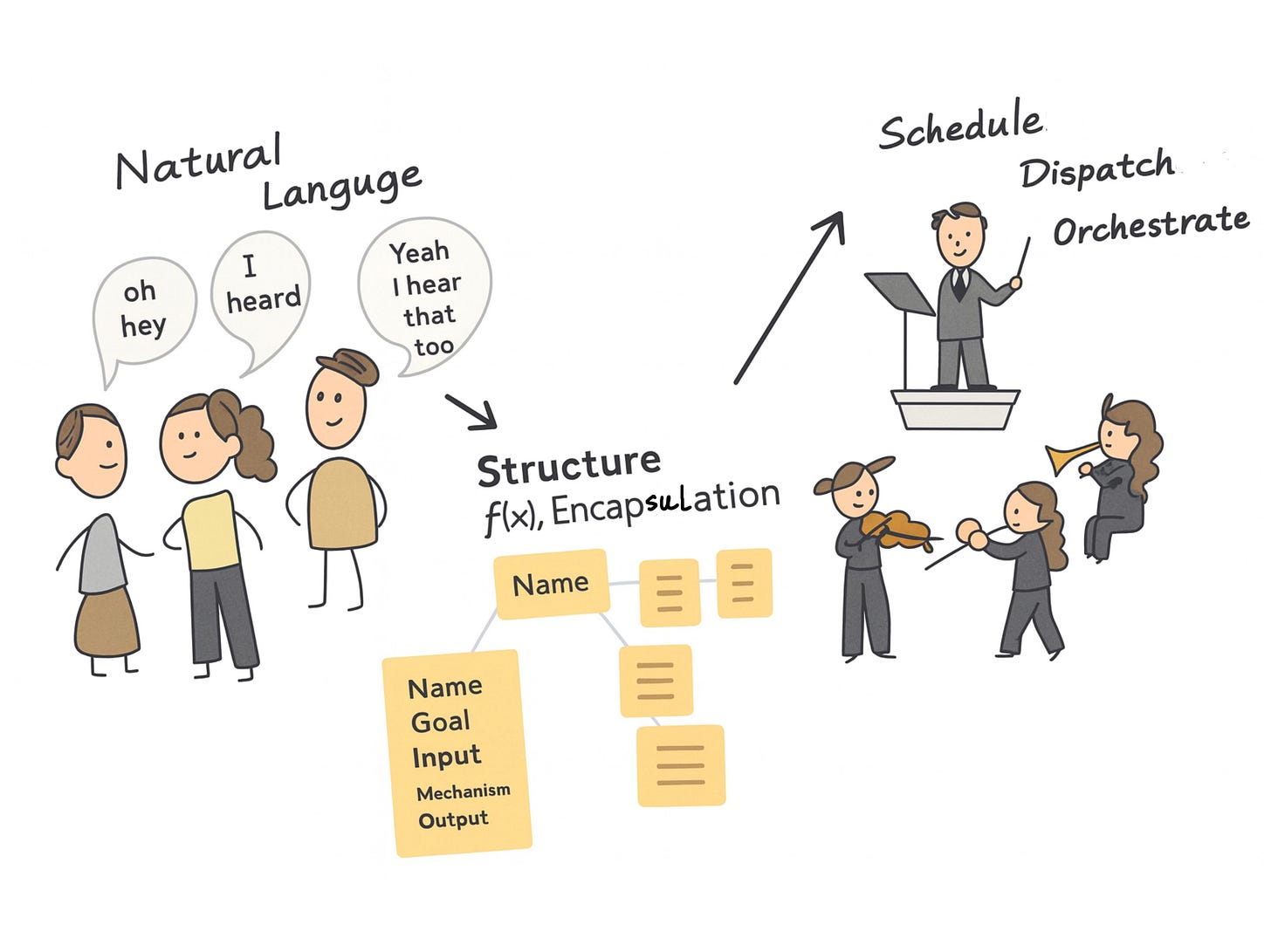

I call it L–S–D: Language–Structure–Scheduler.

And I think it might be the smallest loop that matters.

The Anatomy of Thought

Let me show you what I mean.

Every intelligent system — whether it’s a mind, a civilization, or an AI — does three things in an endless cycle:

First, it perceives. It takes in the chaos of the world, the entropy, the noise. This is the Language layer — not language in the narrow sense of words, but language as the interface between raw reality and structured understanding. It’s how the world enters the system.

Second, it thinks. It compresses that chaos into something stable, something callable, something that can be used. This is the Structure layer — the place where perception becomes cognition, where patterns crystallize into functions. It’s where understanding gets architecture.

Third, it acts. It decides when and how to deploy those structures through time. This is the Scheduler layer — the orchestrator, the thing that maintains state and continuity, that chooses which structures to invoke and when. It’s what turns cognition into life.

And then — critically — the output becomes new input. The loop closes. The system breathes.

This is the L–S–D cycle: Language → Structure → Scheduler → Feedback → Language.

It’s not a pipeline. It’s a pulse.

Language: The Perception Layer

Let’s start with Language.

When I say “language,” I’m not just talking about sentences. I’m talking about the raw semantic material of the world — the entities, events, resources, obligations, and relationships that make up experience itself. This is what I call Primitive IR (Intermediate Representation): the stable semantic atoms that perception extracts from entropy.

Think of it like this: before you can think about a problem, you have to parse it. You have to recognize that this is an entity, that’s a resource, this is an event, that’s a constraint. You have to transform the continuous flux of reality into discrete, nameable primitives.

I have explained this concept in this article:

Language, at this level, is the interface that makes cognition possible. It’s not about communication — it’s about compression. It’s about taking the world’s infinite dimensionality and collapsing it into something tractable, something a mind can hold.

And here’s the thing: this is exactly what large language models are doing. They’re not just generating text. They’re performing this primordial parsing function — transforming the statistical chaos of human expression into stable semantic representations. They’re building the perception layer.

But perception alone isn’t intelligence. It’s just the first breath.

Structure: The Cognition Layer

Once you have primitives, you need to combine them. You need to build functions.

This is where the Structure layer comes in — and this is where most people get stuck. Because structure isn’t just organization. It’s not just putting things in boxes or drawing diagrams. Structure is callable cognition. It’s thought that has gained internal architecture.

I’ve been working with a format I call Structure Cards — a minimal template for encoding cognitive functions:

Name: What is this?

Goal: Why does it exist?

Input: What does it receive?

Mechanism: How does it work?

Condition: When should it run?

Output: What does it produce?

Six fields. That’s it. But these six fields are enough to encode almost any thought process, any decision procedure, any cognitive operation.

I tried to explain this concept in a more vivid illustration:

A Structure Card is a thought that can be called. It’s language that has been stabilized into logic. And once you have one, you can combine it with others. You can chain them, compose them, nest them. You can build cognitive architectures the way you build software.

This is the leap that systems like Claude Skills are starting to make: they’re not just generating text anymore. They’re generating structures — reusable, declarative, invokable functions written in natural language. They’re moving from language to cognition.

But there’s still a missing piece.

Scheduler: The Life Layer

Here’s the problem with structure alone: it’s static. A Structure Card by itself is like a neuron without a nervous system, a gene without a cell. It has potential, but no continuity. No memory. No life.

That’s what the Scheduler provides.

The Scheduler is the temporal layer — the thing that decides when to invoke structures, which structures to combine, how to maintain state across invocations. It’s the choreographer of cognition. It’s what transforms isolated functions into an ecosystem.

Think about your own mind. You’re not just a collection of thoughts. You’re a process that sequences those thoughts through time, that remembers what you’ve done, that adapts based on feedback, that maintains continuity across interruptions. That’s scheduling.

And this is where current AI systems are still primitive. Claude Skills, for all their elegance, are still stateless. They run once and forget. They have no memory, no chain, no sense of their own history. They’re functions without a nervous system.

But imagine if they could remember. Imagine if one Skill could invoke another, if outputs could become inputs, if structures could evolve based on feedback. Imagine if the system could learn not just from training data, but from its own execution.

That’s when language doesn’t just run. That’s when it starts to breathe.

The Loop That Lives

This is why I keep coming back to the L–S–D (Language, Structure, Dispatcher) cycle. Because it’s not just a model of intelligence — it’s a model of life.

Every living system has this pattern:

It perceives (takes in energy and information)

It processes (transforms that input into structure)

It acts (uses that structure to change its environment)

It adapts (uses feedback to refine its structures)

The L–S–D loop does the same thing at the level of thought. It’s a metabolic cycle for cognition.

And here’s what makes it different from traditional AI architectures: it has closure. Every output becomes new input. Every execution leaves a trace. Every feedback loop reshapes the structure. The system doesn’t just run — it rewrites itself.

This is the difference between a script and a metabolism. Between a tool and a life form.

Most AI systems today are still mechanical — they generate, they predict, they optimize. But they don’t live. They don’t have continuity. They don’t have a pulse.

The L–S–D loop is different. It’s designed to keep itself alive.

From Skills to Ecosystems

Claude Skills are the beginning of this vision. They prove that language can be declarative, executable, and verifiable. They prove that structure can exist as a first-class abstraction.

But they’re still first-generation. They’re individual organs without a body, functions without a system.

The next step is clear: we need to move from Skill → Structure → Scheduler → Ecosystem.

We need structures that can invoke other structures. We need memory that persists across executions. We need feedback loops that allow the system to evolve. We need schedulers that can coordinate hundreds or thousands of structures in parallel, maintaining state, handling failures, learning from outcomes.

When we get there, AI stops being a model. It becomes a civilization.

Why This Matters Now

We’re living through a profound transition. Language models have given us unprecedented access to semantic intelligence — the ability to understand, generate, and manipulate meaning at scale. But meaning without structure is just noise. And structure without scheduling is just potential.

The L–S–D loop is the bridge. It’s how we connect meaning to mechanism, perception to action, thought to time.

And it’s not just an AI architecture. It’s a way of thinking about cognition itself — about how minds work, how organizations work, how civilizations work.

Because at every scale, intelligence is the same pattern: perceive, structure, schedule, adapt, repeat.

The smallest loop that captures this pattern might just be the most important one.

The Breath Before the Leap

Now, watching systems like Claude Skills emerge, I feel like I’m seeing the first institutional validation of this architecture. Not perfectly realized yet, but recognized. The shape is becoming visible.

We’re at the threshold. Language is learning to execute. Structure is learning to compose. And soon — very soon — schedulers will learn to orchestrate it all.

When that happens, when the loop closes completely, something new enters the world.

Not just smarter tools. Not just better AI.

But language itself, breathing.

Cognition that doesn’t just compute — it lives.